AI in the Creative Design Process: Guide (2026)

Explore the role of AI in the creative design process, from ideation and concept generation to prototyping and iteration.

Design teams at startups face a paradox. They have to ship distinctive products quickly with just a handful of people. Traditional creative workflows—concept sketches, prototypes, feedback loops and endless iterations—can be too slow. Meanwhile, computer‑generated images and text are everywhere. This overlap of pressure and possibility has kicked off a new conversation about how algorithms fit into day‑to‑day design.

In this article, I unpack why designers are experimenting with machine learning tools, the principles I’ve seen succeed at early‑stage startups and how to navigate trade‑offs. You’ll walk away with a clear framework, examples and a forward‑looking view of how AI in creative design process tools will evolve.

What does “creative design process” mean?

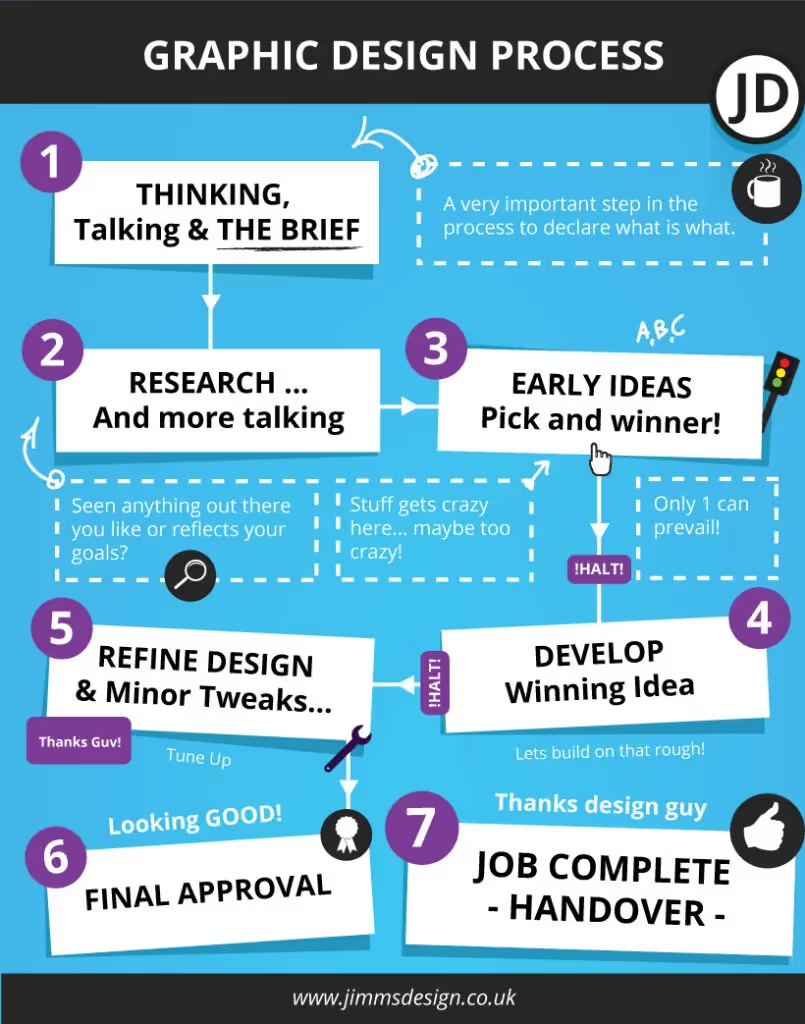

Most product teams follow a well‑established pattern:

- Concepting and sketches. Designers capture ideas as moodboards, rough sketches or wireframes.

- Prototyping. They translate a handful of concepts into interactive prototypes or visual compositions.

- User feedback. The team puts prototypes in front of real people, gathers reactions and adjusts.

- Production. Refined assets are passed to engineers for implementation.

- Iteration. Based on performance data and additional feedback, the cycle repeats.

These tasks rarely happen in isolation. Product managers set priorities, engineers identify feasibility constraints and stakeholders supply business context. At successful companies, everyone collaborates closely. When resources are tight, each specialist often wears multiple hats.

Why this process is under pressure

Several forces are squeezing traditional workflows:

- Speed to market. Users compare your product against the best in the world. Founders feel pressure to ship and adjust quickly.

- Personalization and content volume. Audiences expect tailored experiences and fresh visuals on every platform. That demands more design output than small teams can produce manually.

- Resource constraints. Early‑stage companies cannot hire large creative departments. A handful of designers handle brand, product and marketing.

These realities make it tempting to reach for automation. However, the hype around generative models can lead teams to unrealistic expectations. In 2024, the Nielsen Norman Group found that few design‑specific tools meaningfully enhanced workflows and that practitioners were mostly using chatbots for drafting copy and brainstorming. A 2025 follow‑up observed improvements but concluded that specialized features, not all‑in‑one generators, offered the most value. That distinction informs the advice that follows.

What artificial intelligence brings to design

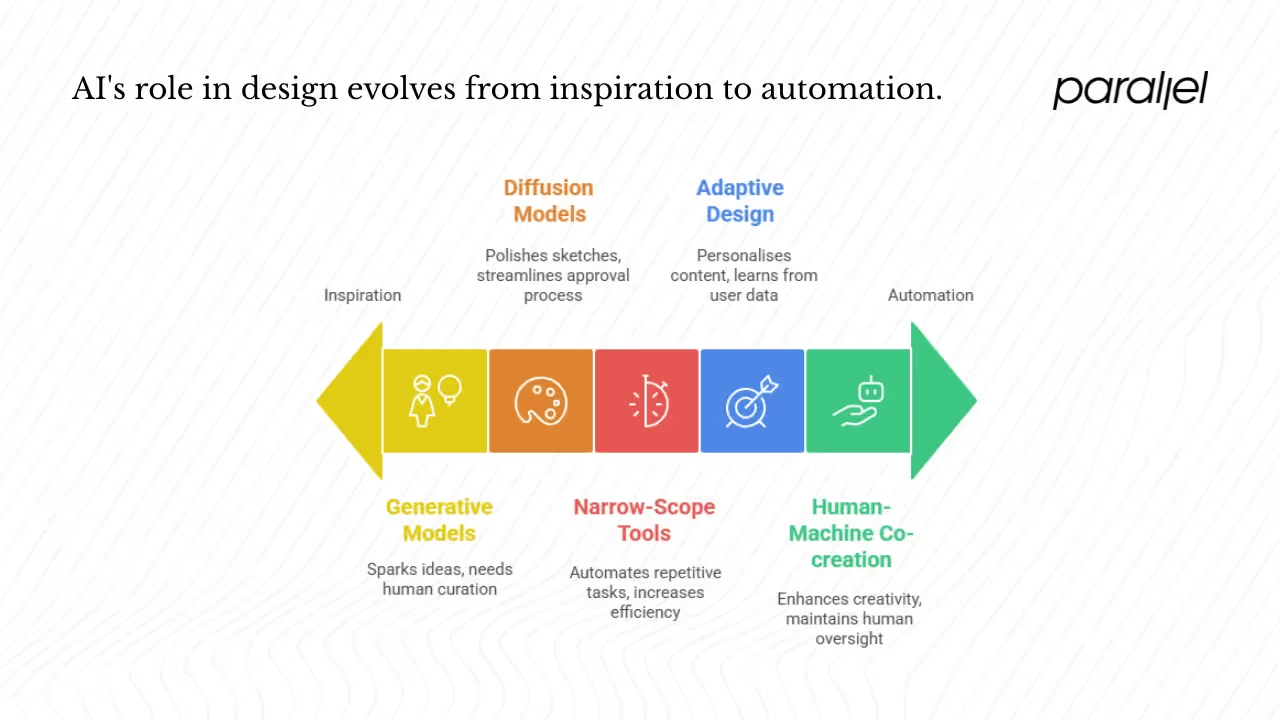

When I say “artificial intelligence” in this context, I’m referring to systems that learn from data and generate new outputs—images, text, layouts or recommendations. Three concepts matter most:

- Generative algorithms. Diffusion models, transformers and style‑transfer networks can create images or text from prompts.

- Creative automation. Models that handle repetitive production tasks such as resizing, background removal or generating variants.

- Data‑driven guidance. Systems that analyse user behaviour and performance metrics to propose design adjustments.

Used thoughtfully, these tools extend human creativity rather than replace it. Even the skeptical 2024 study above acknowledged that text‑based chatbots help with brainstorming and that designers already use generative models for placeholder images. As the 2025 update notes, narrow features such as layer renaming and copy rewriting in Figma save time and let designers focus on higher‑value tasks.

This tension sets the stage for what AI in creative design process adoption really means: a careful balance between leveraging algorithms for speed and keeping human judgment at the centre. Teams that thrive treat artificial intelligence as a creative partner, not a replacement.

Key technologies and how they map into design

Artificial intelligence isn’t one monolithic capability. Different techniques support different stages of the design cycle. Below are the key categories and their pros and cons.

1) Concept generation and ideation

Generative models can generate hundreds of visual concepts or word clouds from a single prompt. Platforms like Adobe Firefly or Midjourney take written descriptions and output images with different colours and styles. In IDEO’s 2024 study of 1,000 business leaders, those who used algorithmically generated questions produced 56 percent more ideas, with a 13 percent increase in diversity and 27 percent increase in detail compared with a control group. Instead of staring at a blank page, teams can quickly explore a broad solution space.

That said, novelty comes with risk. Generators trained on public datasets may yield derivative or biased outcomes. In our work at Parallel, we use these tools as inspiration—not as final deliverables. Designers still curate, remix and inject their own perspective. Without that human filter, outputs can feel generic.

2) Visualization and prototyping

Once you have an idea, you need to make it tangible. Diffusion models and style‑transfer algorithms can turn prompts or rough sketches into polished visuals. In a case study from Adobe’s design team, a veteran poster designer replaced his usual sketching with Firefly prompts. He found that generative methods streamlined ideation, client approval and production, cutting the time from concept to completion and allowing him to explore more ideas than he could by hand. He still had to iterate prompts and manually refine the best outputs, but the overall timeline shrank.

Product teams can also use these models to generate quick prototypes with realistic content. Some plug‑ins for Figma or Webflow allow you to create entire screens from a brief description. The Nielsen Norman Group cautions that these end‑to‑end generators are still inconsistent—identical prompts can yield wildly different layouts. Therefore, treat these as starting points rather than finished designs.

3) Creative automation and production

Beyond concepting, many design tasks are repetitive. Resizing assets for different platforms, renaming layers and generating A/B variations consume time but don’t require deep creativity. Narrow‑scope tools excel here. Figma’s 2025 release includes features that automatically rename layers based on content and context, rewrite copy from a short prompt and find similar assets across files. Khroma uses pattern recognition to assemble colour palettes.

In advertising, platforms like AdCreative.ai generate campaign images and text variants at scale. We’ve seen marketing teams use such systems to produce dozens of ad banners in minutes, then iterate based on click‑through data. This is where artificial intelligence shows clear, quantifiable gains: tasks that took hours now take seconds.

4) Design optimisation and personalisation

The promise of adaptive design goes beyond asset creation. When connected to analytics, models can suggest layout adjustments, copy variations or personalised content based on user behaviour. These systems learn from real‑world data and feed it back into the design cycle. For instance, generative components in modern design systems allow multiple colour schemes or content arrangements depending on the viewer’s profile.

Emergent “generative user interfaces” adapt screens in real time. While still experimental, this shift means designers will create flexible frameworks rather than fixed mockups. It also raises new questions: How do we maintain consistency? When does adaptation cross into manipulation? Your team must stay in control.

5) Collaboration and human–machine co‑creation

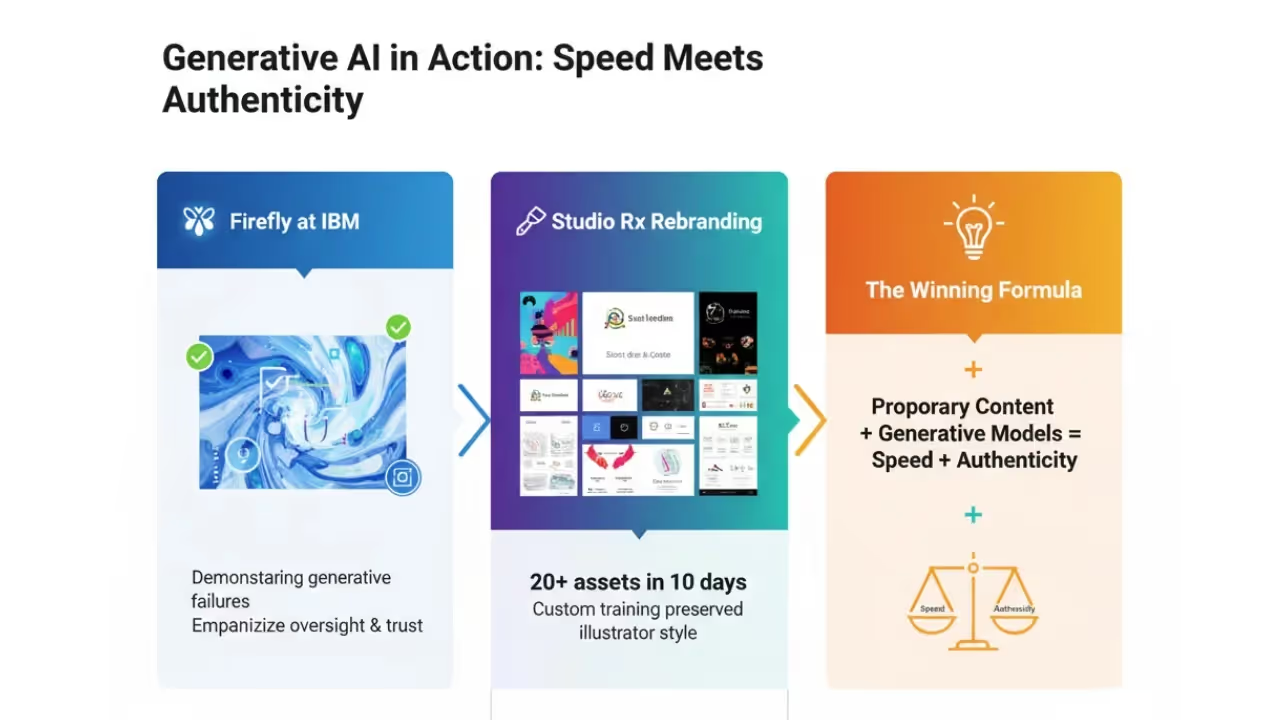

Successful projects treat algorithms as collaborators. The Firefly case studies illustrate this dynamic. IBM’s “Trust What You Create” campaign used generative models to visualise AI pitfalls while the creative team maintained oversight, emphasising safety and accuracy. Studio Rx used Firefly and custom training to turn hand‑drawn characters into polished assets, producing more than 20 high‑quality visuals in ten days while retaining the artist’s style. These stories show that when human intent guides the tool, output quality and authenticity remain high.

Co‑creation also changes team dynamics. Designers become prompt engineers, curators and editors. Product managers must understand how to harness generative output without derailing strategy. Engineers build the infrastructure to integrate models into the pipeline. Creating a shared vocabulary and process is as important as choosing the right tools.

Applying artificial intelligence in startups

Artificial intelligence is becoming a baseline expectation for venture‑backed companies. HubSpot’s 2025 survey of startup statistics reports that there are over 70,000 AI‑driven companies worldwide, and that these businesses accounted for more than 70 percent of venture capital activity in the first quarter of 2025. AI‑native firms achieve around $3.48 million revenue per employee, six times higher than other SaaS businesses, operate with 40 percent smaller teams and reach unicorn status a year faster. For early‑stage founders, the question isn’t whether to use algorithms but where to apply them.

My guidance is to align tool adoption with your product strategy:

- Ideation vs production vs optimisation. Identify the main bottleneck in your design process. If your team struggles to generate concepts, start with generative brainstorming. If asset production is the slowest step, focus on automation tools.

- Build vs buy. Off‑the‑shelf platforms like Firefly, Midjourney or AdCreative can deliver instant value. Building custom models may be justified if you have unique data or specific brand needs, but this demands significant investment and machine learning expertise.

- Return on effort. Begin with one or two high‑impact use cases. HubSpot’s data indicates that focusing on a few experiments leads to better outcomes than chasing every new capability.

Workflow integration

Integrating models into your process means changing how people work together. From experience, successful teams do the following:

- Write better briefs and prompts. Clear intent yields better outputs. The poster designer in Adobe’s case adjusted prompts every few rounds to converge on the desired style.

- Keep humans in the loop. Designers curate, edit and refine. This ensures consistency and avoids the randomness that currently plagues end‑to‑end generators.

- Collaborate across functions. Product, design and engineering need a shared understanding of how generative outputs map to requirements and technical constraints.

- Tool stack planning. Decide which platforms handle ideation, production and analytics. For instance, you might use Firefly for concept images, Figma plug‑ins for layout automation and Amplitude or Mixpanel for performance data.

Case studies and lessons

1) Firefly at IBM and Studio Rx: IBM’s campaign demonstrating generative failures used Firefly to create imaginative visuals while emphasising oversight and trust. Studio Rx’s rebranding project produced over twenty assets in ten days with custom training and still maintained the illustrators’ style. The lesson? Combine proprietary content with generative models to balance speed and authenticity.

2) Paramount+ fan engagement: For the release of the film IF, the streaming service invited fans to describe imaginary friends; designers then generated over seventy custom illustrations in minutes, each consistent with the movie’s sketchbook aesthetic. This is a clear example of personalised content at scale.

3) AdCreative.ai for advertising: Marketing teams we’ve advised have used this tool to produce dozens of banner variations, test them and quickly swap out underperforming versions. Combined with analytics, this approach increases conversion rates without adding headcount.

Together, these stories illustrate how AI in creative design process platforms can free up human talent for higher‑impact work. By offloading repetitive production tasks and augmenting ideation, they enable small teams to punch above their weight.

Benefits for startups

When used thoughtfully, algorithms offer concrete advantages:

- Speed. Generative brainstorming eliminates the blank‑canvas problem. Automation features eliminate repetitive tasks like layer naming, letting designers focus on bigger questions. Ideation phases can produce hundreds of images in hours rather than dozens over daysadobe.design.

- Scale. Personalisation becomes possible. Firefly allowed Paramount+ to generate seventy unique fan illustrations quickly. AdCreative platforms generate multiple ad variants without human labour.

- Efficiency. HubSpot notes that 80 percent of early‑stage SaaS startups use artificial intelligence tools, and 61 percent of those companies report profitability compared with 54 percent of non‑AI users. Automating low‑value tasks contributes directly to cost savings.

- Innovation. The IDEO study shows that algorithmic prompts can spark more and more varied ideas. By exposing teams to unexpected combinations, generative models broaden the creative palette.

Risks and pitfalls

No tool is a cure‑all. Beware of the following:

- Loss of voice. Relying too much on generated content can flatten your brand’s unique tone. Always curate and adjust outputs.

- Ethical and IP issues. Designers interviewed by the Nielsen Norman Group worried about copyright risks when using generated assets. Make sure you have rights to training data, and be transparent with clients about how assets are produced.

- Quality control. Models can hallucinate or produce inconsistent layouts. Human oversight is essential.

- Complex integration. Introducing new tools requires training, new processes and possibly new roles such as prompt engineers. Budget both time and money for this change.

A framework for implementation

Here is a practical, startup‑friendly approach for weaving AI in creative design process work into your organisation.

1) Assess readiness

Start by evaluating your current workflow. Where do projects stall? Is ideation slow? Are designers spending hours exporting assets for every ad platform? Identify the pinch points and estimate the potential impact of automation. If your bottleneck is conception, generative brainstorming may help. If production is slow, automation tools could yield quick wins. Use data to prioritise; for example, track time spent on each stage and gather feedback from the team.

2) Choose the right use cases

Avoid boiling the ocean. Pick one or two areas where artificial intelligence clearly adds value.

- Idea sketches. Use text‑to‑image models to generate rough compositions for moodboards.

- Style transfer. When you have a specific aesthetic in mind, style‑transfer tools apply it consistently across assets.

- Automation of variants. For ads or social posts, use creative‑automation platforms to produce multiple layouts and test them.

- Data‑driven adjustments. Implement analytics that feed back into design decisions; for example, adjusting button placement based on click‑through rates.

3) Select tools and build your process

Pick platforms that match your priorities:

- Ideation tools: Firefly, Midjourney, DALL‑E or open‑source diffusion models for concept generation.

- Automation tools: Figma plug‑ins for layer renaming and copy rewriting; Khroma for colour palettes; AdCreative.ai for generating ad variants.

- Visualisation tools: Firefly or custom models integrated with Photoshop for expanding images or changing aspect ratios.

- Analytics: Tools like Mixpanel, Heap or internal dashboards to monitor user behaviour and design performance.

Define roles in this new workflow. A designer or prompt engineer crafts requests to the model. The model generates options. The designer then refines and selects. Engineers build integration hooks, and product managers make sure the output aligns with the customer problem.

4) Close the loop with data

To make progress, you must measure. Establish metrics relevant to your design goals: conversion rates, engagement, time on task or qualitative feedback. Use these metrics to adjust both your designs and your prompts. The 2025 Stanford AI Index reports that private investment in generative models grew 18.7 percent from 2023 to 2024 and that 78 percent of organisations used some form of artificial intelligence in 2024. This trend means more tools will be available, but also more noise. Data‑driven iteration helps separate signals from hype.

5) Governance and ethics

Responsible use is non‑negotiable. Consider these guidelines:

- Intellectual property. Verify that your vendors train models on licensed data. Avoid models that scrape protected works without permission. The 2024 NN/G study highlighted practitioners’ ethical concerns about using content derived from unlicensed material.

- Human oversight. Do not “set and forget” generative systems. Always review outputs for accuracy and alignment with your brand and values.

- Bias and inclusivity. Generative outputs can mirror biases in training data. Build review loops to ensure representation. IBM’s campaign about generative pitfalls reminds us that quality and trust must coexist.

Emerging trends and the road ahead

Evolving technologies

Expect to see more sophisticated models that combine language, vision and interaction, enabling truly responsive interfaces. Small models are becoming more efficient; the Stanford AI Index notes that the cost of running a GPT‑3.5–level system fell over 280‑ folds between November 2022 and October 2024. As inference costs drop and open‑weight models close the performance gap, high‑quality generative tools will be accessible to smaller teams.

Style‑transfer and image‑expansion capabilities are also maturing. Designers will mix human sketches with algorithmic textures, producing hybrid aesthetics. Tools like Firefly’s generative expand let you reframe compositions in seconds. New plugins and models will make real‑time adjustments possible.

Implications for design and product leadership

Skill requirements are shifting. Designers now need to craft prompts, understand model behaviour and interpret probabilistic outputs. They must also become comfortable with data analysis, since performance metrics feed back into design decisions. Product leaders should encourage experimentation, but anchor it in strategy. Coaching teams on ethics and bias will remain critical.

What startups should watch

- Tools geared toward lean teams. Many companies are launching products specifically for startups—offering free tiers, integrations with common design tools and simplified interfaces.

- Competitive advantage. Early adopters of AI in creative design process tools can differentiate through faster cycles and personalized experiences. The hubbub around generative features will settle; what remains will be a set of practical capabilities that you can build into your differentiation.

- Policy and regulation. The AI Index reported that U.S. federal agencies introduced 59 AI‑related regulations in 2024, more than double the number in 2023. Expect more rules around training data, disclosure and use of generated content. Stay informed and adjust your practices accordingly.

When evaluating the future of AI in the creative design process, remember that the goal isn’t to chase every new tool. Focus on approaches that help you solve real user problems while maintaining creative integrity.

Conclusion

The AI in the creative design process debate often swings between hype and fear. The reality is more nuanced. Tools that generate images, text and layouts extend what small teams can achieve; they free up designers to focus on strategy and craft, and they let startups test ideas quickly. At the same time, these models rely on existing patterns and data, so human judgment and editing are essential. As the Nielsen Norman Group observed in 2025, narrow‑scope features like automated layer naming and copy rewriting deliver genuine value, but fully automatic design remains out of reach.

For founders, product managers and design leaders, the opportunity lies in thoughtful adoption: choose specific pain points, start small, measure outcomes and keep ethical guardrails in place. With that mindset, AI in creative design process work becomes a partnership rather than a shortcut. I encourage you to experiment, learn and adapt. As generative models improve and costs fall, your ability to build delightful products will only grow—provided you continue to lead with human creativity.

FAQ

1) What exactly does “ai in the creative design process” mean?

It refers to using generative models, machine learning and data‑driven systems to support tasks like brainstorming, prototyping, production and optimisation within design workflows. These tools extend the capacity of designers rather than replace them.

2) Will machines replace human designers?

No. Current research shows that design‑specific models are inconsistent and that practitioners still rely on human curation and editing. They are tools, not substitutes.

3) What are good first use cases for startups?

Generating rough visuals from text prompts, automating repetitive tasks like layer naming or asset resizing, and producing multiple ad variants are common entry points. These deliver quick wins with minimal risk.

4) How do I choose which tools to use?

Match the tool to your bottleneck. Use generative platforms like Firefly or Midjourney for ideation; automation features in Figma for production; and analytics platforms to close the feedback loop. Prioritize ease of use and data transparency.

5) What risks should I be aware of?

Over‑reliance can erode your brand’s voice, and there are unresolved legal questions around training data. Always review outputs and ensure you have rights to what you publish. Address bias through human oversight.

6) How do I measure success?

Define metrics that matter: time saved, number of concepts explored, conversion rates, engagement or feedback quality. Compare performance before and after adopting generative tools, and adjust accordingly.

7) What design skills will become more important?

Prompt writing, data literacy and ethical judgment will join core visual and interaction skills. Designers who can combine intuition with analytical thinking will thrive.

8) How do we integrate these tools into our workflow?

Start with one project. Write clear prompts, generate options, curate the results and measure the impact. Collaborate across design, product and engineering to embed the process, and refine your approach based on feedback. Keep human judgement at the centre.

.avif)