AI‑Powered Prototyping Tools: Guide (2026)

Discover AI‑powered prototyping tools that accelerate design, generate mockups, and improve user testing.

Prototyping used to be a slow, costly step in product development. Teams would sketch, iterate and code for weeks before seeing whether users even cared. The rise of AI powered prototyping tools changes that calculus. Tools such as Bolt, v0 and Banani can turn a plain idea into a working app within hours — one designer asked, “What if you could take an idea … and create a working prototype — all in just an hour?”, and comparative studies found that teams could go from concept to usable prototype in hours rather than weeks. For founders and design leaders at early‑stage start‑ups, this speed is more than a novelty; it is a survival skill. This article explains what these tools are, why they matter, how to use them, and which options fit different roles.

Quick Take

AI powered prototyping tools let early‑stage teams turn an idea into a working demo in hours. This guide explains what these tools do, where they help, how to fit them into your workflow and which options work best for founders, designers and engineers.

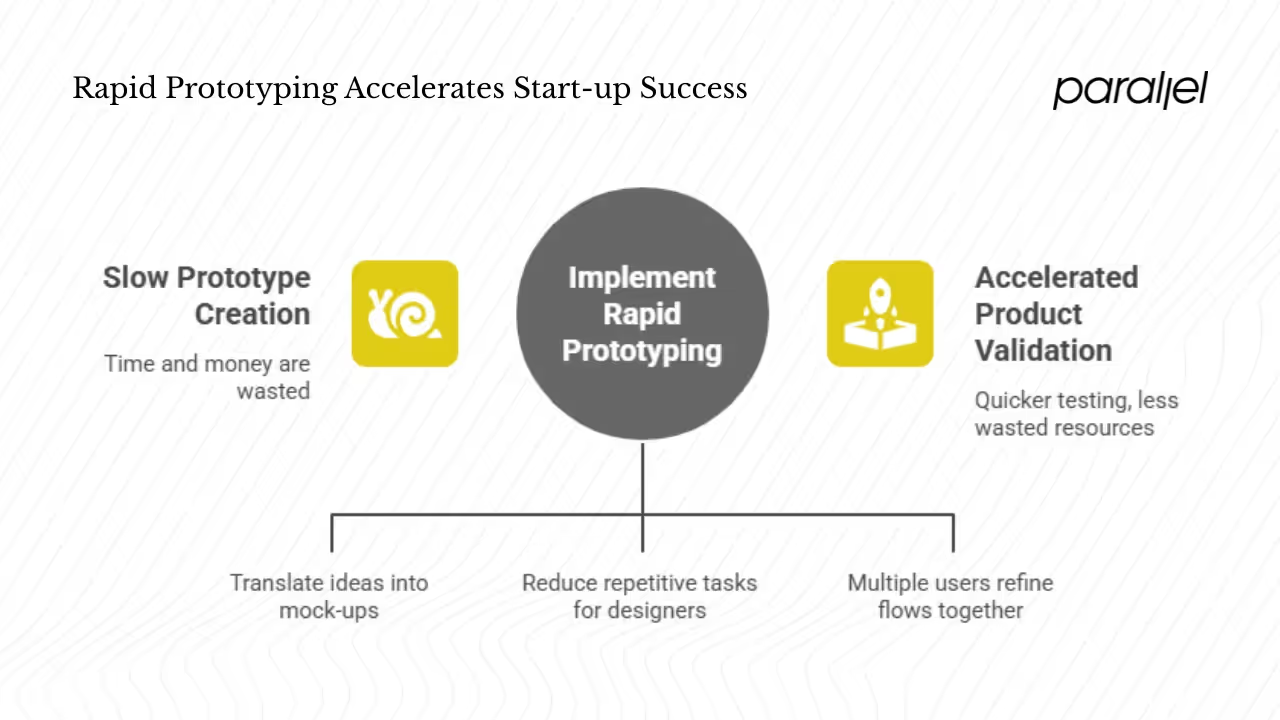

Why do rapid prototyping tools matter for start‑ups?

Traditional prototypes require multiple hand‑offs between product, design and engineering. Each iteration costs time and money. By the time a usable mock‑up exists, the market may have shifted. Rapid, machine‑assisted tools address these issues by translating plain language descriptions or rough sketches into high‑fidelity mock‑ups and even working code. A designer in a Medium essay asked, “What if you could take an idea… and create a working prototype — all in just an hour?”. Newer tools deliver on that promise: comparative tests found that teams can build usable prototypes in hours instead of weeks. Nielsen Norman Group’s evaluation shows that when prompts include a hand‑drawn sketch or a Figma frame, the resulting mock‑ups match more closely with the intended design.

For founders and product managers, this acceleration means quicker validation and fewer resources wasted on ideas that will not connect with users. They can test assumptions, gather feedback and make go/no‑go decisions while conserving capital. For design leaders, automated layout generation and code output reduce repetitive tasks and free up capacity for strategic thinking. Machine learning models can build entire screens, generate layout options and even simulate interactions. They also process uploaded sketches or design references to improve accuracy. Because many tools run in the cloud, multiple users can refine flows together. For early‑stage teams that might not have a dedicated design system yet, the ability to create interactive prototypes with minimal code can mean the difference between shipping a product and missing the window.

Important concepts and terminology

These tools combine machine learning, natural‑language processing, code generation and design patterns. Understanding the vocabulary helps teams pick the right features:

- Design automation: Algorithms generate layouts and user‑interface components from plain language. Banani lets you describe a screen or flow and presents multiple high‑fidelity options side by side.

- Concept visualization: Turning ideas or sketches into visual mock‑ups quickly. Nielsen Norman Group found that adding a hand‑drawn wireframe or Figma link to a prompt leads to more accurate outputs.

- User interface simulation: Simulating screen behaviours without full implementation. Tools like Figma Make generate interactive flows from prompts, handling states and transitions automatically.

- Interactive prototypes: Clickable, interactive prototypes built via machine learning rather than static mock‑ups. They let users click through flows and experience the product before any production code exists.

- Machine learning integration: The algorithms that power these tools. They are trained on millions of existing websites and UI patterns, which explains why outputs often follow mainstream conventions.

- Rapid prototyping: Compressing the time from idea to working prototype; a simple memo‑sharing app was built in an hour, and a Slack‑like messenger was built in hours.

- High‑fidelity mock‑ups: Polished screens created by the tool. Banani adapts your brand style when you upload a reference screenshot.

- Usability testing: Using prototypes to gather feedback. Machine‑generated screens still need real user testing because automated designs can miss subtle hierarchy and spacing.

- Collaborative design: Allowing design, product and engineering to work together. Cloud environments like v0 and Lovable allow multiple stakeholders to edit and test at the same time.

- Automation workflows: Embedding machine‑assisted steps into your process. Figma Make automates connecting frames and generating interactions.

What an AI‑powered prototyping workflow looks like

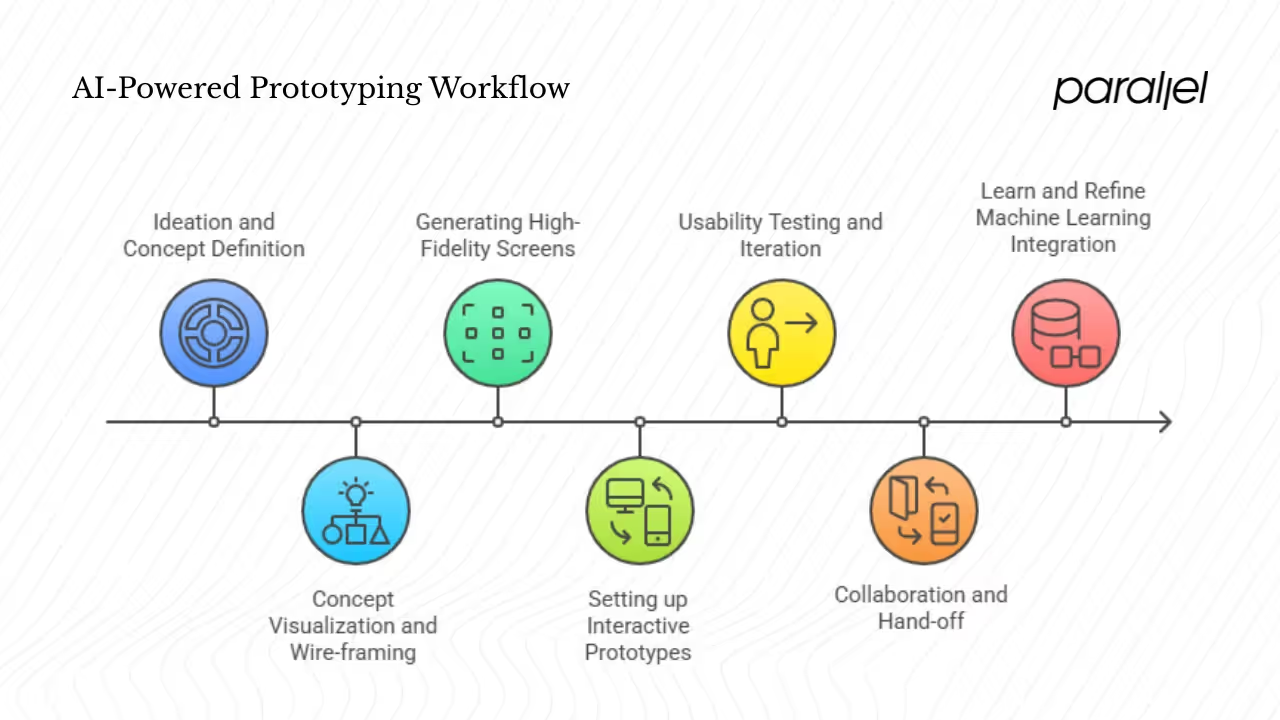

While every team’s process varies, a typical workflow for early‑stage startups using AI powered prototyping tools follows these steps:

1. Ideation and concept definition. Start with a clear product requirement document (PRD) or user flow. In a widely shared experiment, a designer used the Claude chatbot to draft a PRD describing core flows for a memo‑sharing app. The PRD spelled out pages and actions — write a memo, browse memos, interact with posts, view profiles — and served as the input for the prototyping tool.

2. Concept visualization and wire‑framing. Break down the PRD by page and feed pages into a wire‑frame generator. The same Medium experiment used UX Pilot’s Figma plugin to generate multiple wire‑frame options for each page. Alternatively, sketch on paper, take a photo and upload it — the Nielsen Norman Group found that prompts with attached images or a Figma link produce more accurate outputs.

3. Generating high‑fidelity screens. Once wire‑frames are chosen, use design automation to create polished screens. Banani lets you describe a screen or flow and produces multiple high‑fidelity options; it even adapts to your brand when you provide a reference image. MagicPatterns focuses on fitting generated screens into your existing code design system.

4. Setting up interactive prototypes. Link screens and simulate behaviours. According to Lenny’s Newsletter, modern tools convert a sketch or PRD into a working app without coding. Figma Make’s machine‑learning toolkit generates complete interactive flows from a text description, creates states for components and suggests animations. Tools like v0 produce actual React code with interactions, while cloud environments like Lovable spin up back‑end services and authentication.

5. Usability testing and iteration. Use the prototype to test user flows early. Even though AI tools can get you close, they can miss subtle design details — the Nielsen Norman Group observed issues like poor color contrast, inconsistent spacing and lack of hierarchy in machine‑generated screens. Test with real users, collect feedback and adjust the prompts or edit the designs manually. Use this phase to refine copy, flows and interactions.

6. Collaboration and hand‑off. Decide how the prototype will connect with development. Some tools export production‑ready code: v0 outputs clean React components using shadcn/ui, MagicPatterns fits your existing tokens and components, and Replit lets you build full‑stack apps using JavaScript or Python. Others, like Figma Make, live entirely inside the design environment; you will still need to recreate the interactions in your codebase. Decide early whether the goal is a throwaway prototype for learning or a foundation for production.

7. Learn and refine machine learning integration. Over time, feed real user data and analytics back into your process. If your prototype includes machine learning features, handle that as a separate product; you need the right data pipeline and evaluation metrics. Consider responsible AI practices. Farsight, a tool from Georgia Tech, alerts prototypers when their prompts could be harmful and encourages them to think through affected stakeholders and potential harms. A study with 42 prototypers showed that users could better identify potential harms after using Farsight.

What good looks like and what to avoid

When these steps are followed, prototypes can quickly validate ideas. But over‑automation is a real risk. Generic prompts produce generic outputs, so take the time to refine your prompts. Upload reference designs for better accuracy. Avoid skipping usability research; machine‑learning tools can produce polished screens but not the insight that comes from watching a user struggle. Also, don’t rely on these tools to handle complex applications without human oversight; the Nielsen Norman Group warns that machine‑generated designs often lack subtle hierarchy and may default to minimalistic styles.

Choosing the right tool

Not every AI powered prototyping tool fits every team. When evaluating a solution, consider the following criteria:

- Speed and ease of use. How quickly can you go from a prompt to a working prototype? GoPractice’s comparison found that AI prototyping tools let teams move from concept to functional prototypes in hours rather than weeks.

- Fidelity of outputs. Are the screens polished enough for your purpose? Banani’s multiple high‑fidelity options and style adaptation suit design‑conscious teams; MagicPatterns ensures consistency with an existing design system.

- Interactivity. Does the tool support multi‑screen flows, component states and transitions? Figma Make generates complete flows and interactions from natural language. v0 and Lovable can build working apps with complex logic.

- Machine‑assisted features. Some tools focus on layout generation; others can spin up a back end and database. Decide whether you need just screens or an end‑to‑end application.

- Integration with existing tools. Can you export code to your development stack? v0 outputs React components. MagicPatterns align with your design tokens. Banani exports to Figma.

- Testing and feedback loops. Look for tools that make it easy to test prototypes with users. Some integrate with analytics or feedback services.

- Hand‑off and export options. Decide whether you want a throwaway prototype or a starting point for production. Figma Make remains in the design environment and requires reimplementation, while Lovable and v0 produce deployable code.

- Team collaboration features. Can multiple users edit and comment? Cloud environments like v0 and Lovable allow real‑time collaboration.

- Cost and scalability. Early‑stage startups need to balance features against budget. Some tools offer free tiers for experimentation; others charge per seat or per project.

- Proof points and case studies. Check whether the tool has been tested on real projects. The Banani review compares multiple tools and singles out Banani as the best overall for design ideas.

- Risks and limitations. AI tools can produce generic designs, misinterpret prompts or fail in complex environments. Nielsen Norman Group notes that prompts with less detail produce widely varying outputs and that machine‑generated designs often lack subtle hierarchy. Figma Make can’t connect to your real APIs, so prototypes built with it remain disconnected from production data.

Important tools and emerging players in 2025

The market for AI powered prototyping tools is growing. The global virtual prototype market was valued at USD 597.2 million in 2023 and is projected to grow at a compound annual growth rate of 14.2 % from 2024 to 2030. Below is a snapshot of popular tools and what they offer.

Other notable options include Replit, which allows building full‑stack apps in JavaScript or Python, and MagicPath, which offers canvas‑based screen generation. The right tool often depends on your role: founders and product managers may prioritise speed and ease, design leads may care about polished visuals and brand alignment, and engineers may need tools that export production‑ready code.

Benefits and considerations for start‑up teams

The appeal of AI powered prototyping tools is clear. They compress the time from idea to working prototype and lower costs. A comparative study found that machine‑assisted tools let teams build functional prototypes in hours rather than weeks, and a simple memo‑sharing prototype was built in just an hour. By automating layout and code generation, these tools reduce manual design and development overhead. They also improve iteration cycles: you can test an idea with users, adjust your prompt, regenerate screens and test again — all within a single day. Because many tools are cloud‑based, they help design, product and engineering teams work better together and reduce friction at hand‑off.

There are important considerations. High‑fidelity outputs may still miss fine details; the Nielsen Norman Group observed problems with hierarchy, spacing and color contrast in machine‑generated designs. Tools often default to generic styles because they are trained on common patterns. Over‑reliance on automated prototypes can tempt teams to skip user research. Machine‑learning features need a proper data foundation and can introduce ethical risks; Farsight encourages developers to consider potential harms and stakeholders. Team readiness matters: designers may need to adapt to new workflows, and developers must decide how to integrate or rebuild machine‑generated code. Tool lock‑in is another risk — prototypes built in one environment may not transfer easily to another. Finally, prototypes are not substitutes for production systems; Figma Make’s outputs don’t connect to real APIs or authentication, so additional engineering work is still required.

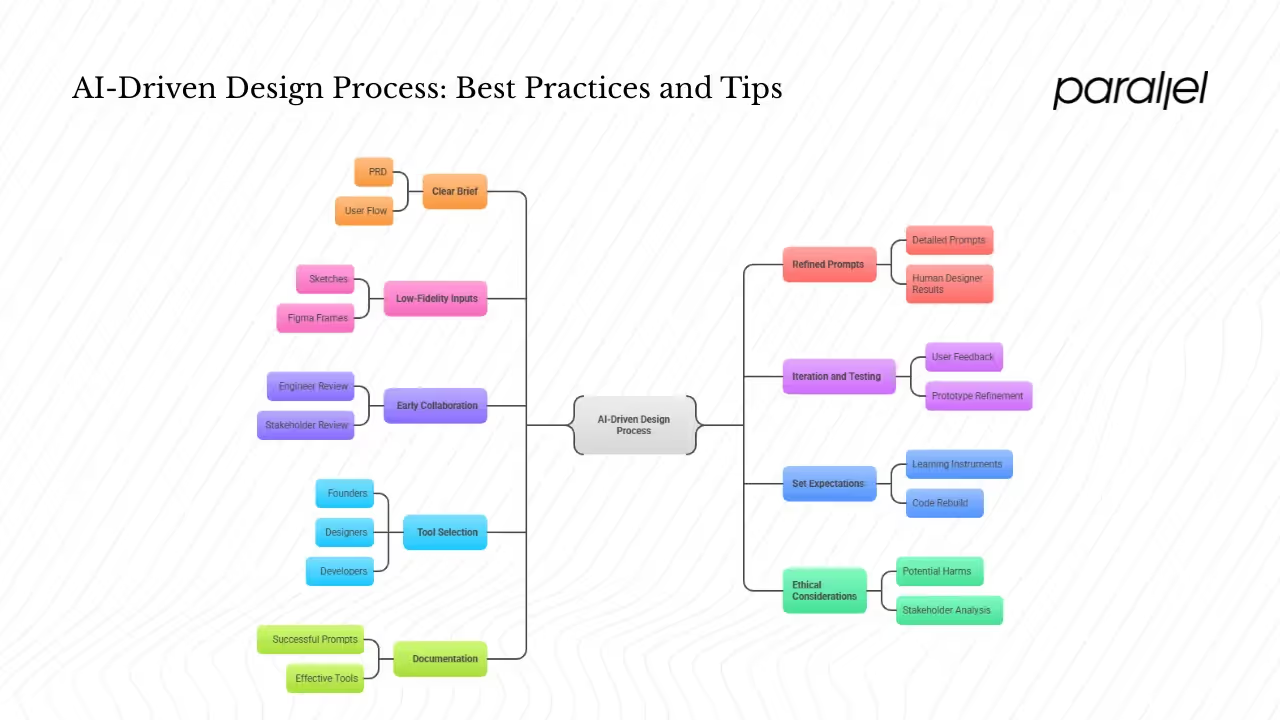

Practical tips and best practices

Here are some guidelines drawn from research and experience:

- Start with a clear brief. Draft a concise PRD or user flow before opening any tool. Medium’s experiment began by asking Claude to generate a PRD and then simplified it to fit the project.

- Refine your prompts. Longer, detailed prompts yield better outputs. Nielsen Norman Group found that clear, detailed design requirements consistently produced results closer to those of human designers.

- Use sketches or low‑fidelity inputs. Uploading a sketch or Figma frame improves accuracy. Even a photo of a drawing can guide the tool.

- Iterate and test. Use the prototype with real users, observe where they struggle and refine. AI tools can get you close, but human feedback reveals what matters. Don’t be afraid to regenerate screens or adjust your prompts.

- Collaborate early. Invite engineers and stakeholders to review prototypes. Tools like v0 and Lovable allow shared editing, which reduces surprises at hand‑off.

- Set expectations. Prototypes are learning instruments, not final products. Figma Make prototypes remain inside the design environment and must be rebuilt in code. Communicate this to avoid false assumptions.

- Choose tools based on maturity and need. Founders may prefer fast, full‑stack tools like Lovable. Designers may opt for Banani to get polished visuals. Developers might pick v0 or Replit for production‑quality code.

- Keep ethics in mind. Use resources like Farsight to surface potential harms and think through stakeholders. Responsible design should accompany speed.

- Document what works. As you experiment, record which prompts, tools and hand‑offs succeed. This institutional knowledge will help your team refine its process.

Case study snapshots

From idea to prototype in an hour: In a Medium article, designer Xinran Ma showed how a simple memo‑sharing app could be prototyped quickly. She drafted a PRD using Claude, generated wire‑frames with a Figma plugin and then used Bolt to build the prototype. Within about an hour she had a working web prototype that could be scanned on a phone and shared via a URL. The experiment illustrates the full workflow: clear requirements, prompt refinement, design generation and rapid testing. It also highlights the need to simplify prompts and iterate, as the initial PRD was too detailed.

Comparative testing across tools: GoPractice tested seven popular tools — Lovable, Bolt, Replit, v0, Tempo Labs, Magic Patterns and Lovable Agent Mode — by asking each to build a Slack‑like messenger using the same screenshot and natural‑language prompts. Their test measured how quickly a prototype could be assembled, how convenient the interface was and what advantages or disadvantages each tool had. The main takeaway: machine‑assisted prototyping compresses time from weeks to hours. Some tools excelled at generating fully functional back ends, while others produced cleaner interfaces but required more manual correction. The details were behind a free login, but the public overview shows that matching tool capabilities to project goals is crucial.

Responsible prototyping with Farsight: Georgia Tech’s Farsight tool teaches developers responsible approaches to working with language‑model‑driven prototypes. It alerts prototypers when a prompt could be harmful and shows news incidents and potential misuse cases. In a user study of 42 prototypers, participants who used Farsight were better at identifying potential harms. By placing ethical guidance within the prototyping workflow, Farsight demonstrates how responsible design can coexist with rapid iteration.

The future of ai‑powered prototyping

As machine learning and design tools mature, the gap between prototype and production will continue to shrink. Algorithms will move from simply generating screens to simulating behaviour, connecting to real data and automating more of the back‑end. Tools like Figma Make already handle natural‑language interactions and state generation, while v0 and Lovable produce working code. Future tools may integrate user analytics directly into the prototyping environment, so that design iterations respond to real usage patterns.

Responsible design will also play a greater role. Farsight illustrates how in‑situ warnings and harm envisioning can help prototypers think through consequences. The IDEO research team suggests that experiential prototypes — using role‑play and imagined scenarios — can test emotional value before any technology is built. As generative models become more capable, designers must remain vigilant about bias, accessibility and the human impact of their creations.

For start‑ups, this means staying agile while adopting new tools. Focus on clear goals, user feedback and collaboration. Use machine‑assisted prototyping to test ideas quickly, but continue to invest in research, ethics and human‑centred design. With the right balance, AI powered prototyping tools can become a valuable ally rather than a gimmick.

Conclusion

Speed matters for early‑stage ventures. AI powered prototyping tools compress months of work into days, giving founders and design leads the ability to test ideas, gather feedback and iterate rapidly. They automate much of the grunt work of design and coding, freeing teams to think strategically and enabling smoother collaboration. But speed without clarity is wasteful. The most successful teams start with a clear brief, refine their prompts, involve users early and maintain a healthy scepticism about machine‑generated outputs. As you experiment with AI powered prototyping tools, you will refine your prompts, improve cross‑functional communication and coordinate product, design and engineering. By combining machine‑assisted prototyping with human judgment and ethical awareness, startups can turn ideas into products that connect with real people. Pick a tool that suits your role, set a small goal—perhaps validating a single user flow this week—and learn through doing.

FAQ

1) What is the best AI prototyping tool?

There is no single best tool for everyone. It depends on your role, workflow and fidelity needs. The Banani review notes that Banani is the best overall for design ideas, while v0 is ideal for complex interactions and MagicPatterns is great if you want prototypes aligned with your existing code. Founders may lean toward Lovable for its full‑stack capabilities, whereas designers might prefer Banani for its polished screens.

2) What is AI prototyping?

AI prototyping uses machine learning to automatically create interactive designs from simple text descriptions or sketches. Instead of manually connecting screens or coding interactions, you describe what you want in plain language and the tool generates screens, flows and even back‑end code.

3) How do you generate prototypes using ai?

Start with a clear requirement or user flow; draft a prompt detailing the screens and actions. Feed that prompt or a sketch into a prototyping tool. Use design automation to generate high‑fidelity screens, then link them into an interactive prototype. Test with users, refine your prompt and repeat. Some tools can export production‑ready code; others remain in the design environment and serve as a reference.

4) Is the Galileo tool worth it?

Galileo is one among many machine‑assisted prototyping tools. Whether it is worth your time depends on how well it fits your workflow, integration needs, interactivity requirements and budget. Evaluate Galileo the same way you would evaluate any tool: test its output quality, see whether it integrates with your design and code stack, examine how it handles complex flows and confirm that it aligns with your team’s process.

.avif)