AI for Qualitative Data Analysis: Guide (2026)

Explore AI tools for qualitative data analysis, enabling researchers to efficiently code and interpret unstructured data.

Every early‑stage team collects more feedback than they can easily digest; hours of interview audio, thousands of chat messages and survey responses quickly overwhelm even the most disciplined researcher.

That scaling problem is why founders, product managers and design leaders are turning to AI for qualitative data analysis—not as a magic wand, but as a way to sift through mountains of qualitative feedback. By applying machine‑learning and NLP to help code, cluster and visualise non‑numerical data, this approach uncovers patterns that might otherwise be missed.

This article explains how AI augments—not replaces—human analysis, outlines a practical workflow, compares tools, explores opportunities and risks, and offers a roadmap for early‑stage product and design teams while excluding purely quantitative analytics and synthetic user generation.

Qualitative research and the traditional process

Qualitative research seeks to understand the “why” behind human behaviour. It relies on open‑ended methods such as in‑depth interviews, field observations, diaries, focus groups and user feedback. Classical qualitative analysis involves several steps: transcribing audio or video into text, open coding (creating labels for segments of data), axial coding (grouping codes into categories), selective coding (identifying core themes), clustering and memoing.

Researchers must interpret patterns, write memos, and validate findings with peers. This process is intellectually rewarding yet labour‑intensive and subjective. Inter‑coder reliability is hard to maintain, and scaling to hundreds of transcripts can take weeks.

A 2025 study in BMC Medical Informatics & Decision Making observed that computer‑assisted qualitative data analysis software (CAQDAS) saved between 20–30% of the time typically spent on data management, storage and retrieval. Yet even CAQDAS still requires manual coding. Enter AI/ML: researchers are applying machine‑learning to speed up transcription, suggest codes, cluster patterns and reduce bias. The goal is not to take humans out of the loop but to allow them to focus on synthesis and interpretation.

Machine learning, NLP and text mining for qualitative data

Understanding these fundamentals is critical because together they power AI for qualitative data analysis, enabling machines to parse and make sense of complex narratives.

Machine‑learning (ML) is a subfield of AI that enables computers to learn patterns from data without being explicitly programmed. It comes in two main flavours: supervised learning, where models learn from labelled examples (e.g., training a classifier to detect sentiment from tagged texts), and unsupervised learning, which finds structure in unlabelled data (e.g., clustering similar comments).

Natural language processing applies ML to text. Fundamental techniques include tokenization (breaking text into words or tokens), embeddings (numeric vectors that capture semantic similarity), named‑entity recognition, part‑of‑speech tagging and parsing. These building blocks feed into tasks like topic modelling, association rule mining, and sentiment analysis.

Speech recognition transforms audio into text; concept extraction and entity linking connect phrases to broader concepts. Emotion detection goes beyond polarity to identify feelings like frustration or joy. When combined, these techniques enable automated transcription, assisted coding, theme discovery, summarization and visualisations such as word clouds or co‑occurrence matrices.

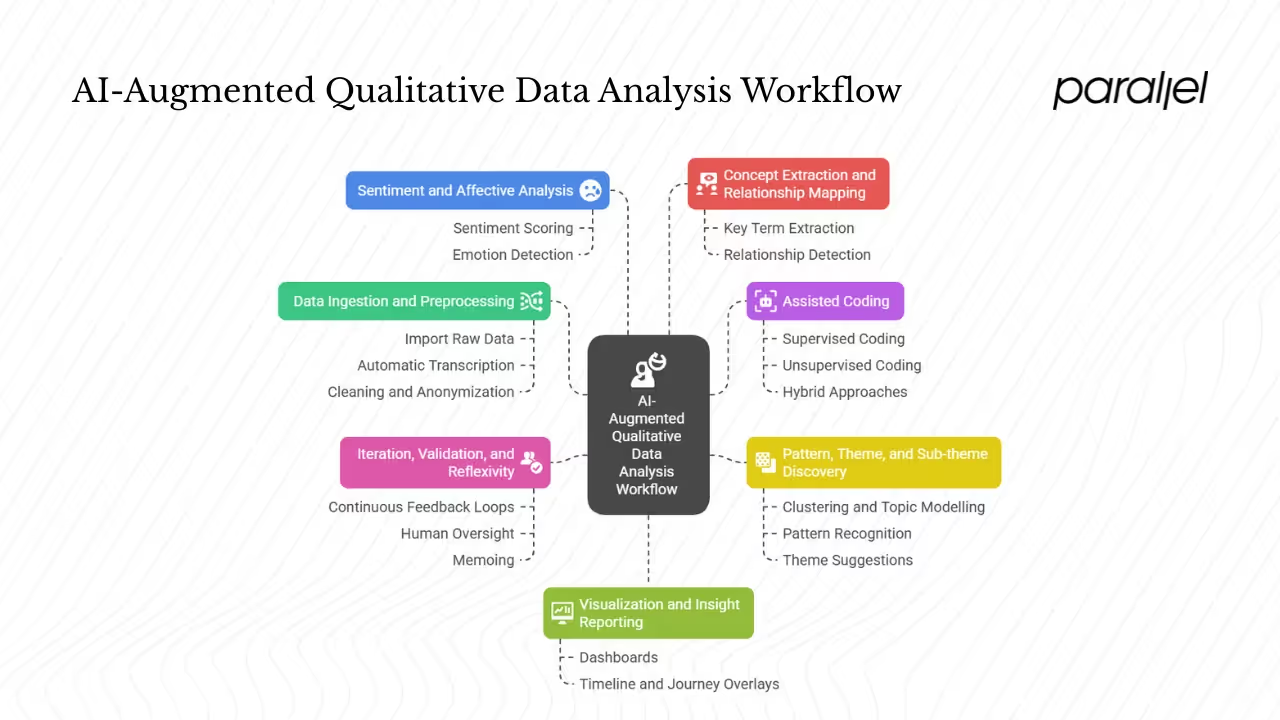

A practical AI‑augmented workflow

The following workflow illustrates how AI for qualitative data analysis can support qualitative analysis while keeping researchers in control:

1. Data ingestion and preprocessing

- Import raw data – audio recordings, video files, transcripts, chat logs, survey responses and social media feedback.

- Automatic transcription – modern speech‑to‑text models offer >90% accuracy and support multiple languages. Tools like Looppanel transcribe calls and distinguish speakers; Dovetail’s transcription handles over 40 languages and produces accurate text.

- Cleaning and anonymization – remove filler words, correct punctuation, and strip personally identifiable information. Normalise text (lowercasing, stemming) and split long transcripts into utterances or sentences.

2. Assisted coding

- Supervised or unsupervised coding – AI can suggest codes based on existing codebooks or cluster data to propose new ones. MAXQDA’s AI Assist add‑on, released in 2023, generates document summaries, chats with coded data and suggests code ideas. It does not auto‑code entire projects; instead, it applies one well‑described code at a time and provides explanations for each segment. Researchers review these suggestions, refine descriptions and decide whether to adopt or reject them.

- Hybrid approaches – combine AI’s speed with human judgment. For example, a researcher might provide a set of sample coded segments to finetune a model, review AI‑suggested labels, edit misclassifications and maintain an audit trail. and auto‑tagging but emphasises that the human researcher must interpret results.

3. Pattern, theme and sub‑theme discovery

- Clustering and topic modelling – unsupervised algorithms group semantically similar excerpts. BMC’s 2025 study compared nine generative models (from ChatGPT to Claude) on 448 responses and found that some models achieved perfect concordance with manual analysis (Jaccard index = 1.0). Such clustering can surface latent themes but needs human validation to ensure meaning.

- Pattern recognition – AI identifies co‑occurrences, sequences and transitions in codes. Researchers can then refine subcodes and explore relationships (e.g., “because of X, users felt Y”).

- Theme suggestions – generative models can propose candidate themes. NVivo 15.2 and ATLAS.ti 25 introduced generative AI summarisation and code suggestions in 2024. These suggestions help researchers explore overlooked angles but should not replace manual thematic analysis.

4. Sentiment and affective analysis

- Sentiment scoring – AI assigns polarity scores to segments or codes. Looppanel’s AI transcript analysis tool performs sentiment analysis with color‑coded positive and negative responses. Tools can map sentiment trends across participants or time, revealing when frustration spikes during onboarding.

- Emotion detection – models detect emotions from tone of voice, speed and pauses, offering nuance beyond simple sentiment.

5. Concept extraction and relationship mapping

- Key term extraction – NLP systems identify recurring entities, actions and concepts. They can build concept maps or code graphs linking concepts and highlight causal relationships.

- Relationship detection – AI finds patterns like “because of X, users felt Y,” enabling more nuanced narratives.

6. Visualization and insight reporting

- Dashboards – AI tools produce interactive dashboards with code clouds, heatmaps and co‑occurrence matrices. Dovetail generates digestible reports and stakeholder‑friendly summaries.

- Timeline and journey overlays – map themes across user journeys and time. Quote linking makes it easy to trace insights back to raw excerpts.

7. Iteration, validation and reflexivity

- Continuous feedback loops – refine prompts, revisit codes and re‑run analyses. A reflexive journal documenting why AI‑generated themes were adopted or rejected helps maintain transparency.

- Human oversight – evaluate inter‑coder agreement and validate AI outputs. Delve’s guide warns that AI may produce hallucinations, cherry‑picking facts to please the prompt. Always double‑check outputs and record decisions.

- Memoing – researchers reflect on how AI suggestions influence interpretation. Document your own assumptions and ensure that final narratives reflect human judgement, not algorithmic patterns.

Use cases & examples from practice

The following use cases demonstrate the benefits and limitations of AI for qualitative data analysis across different contexts.

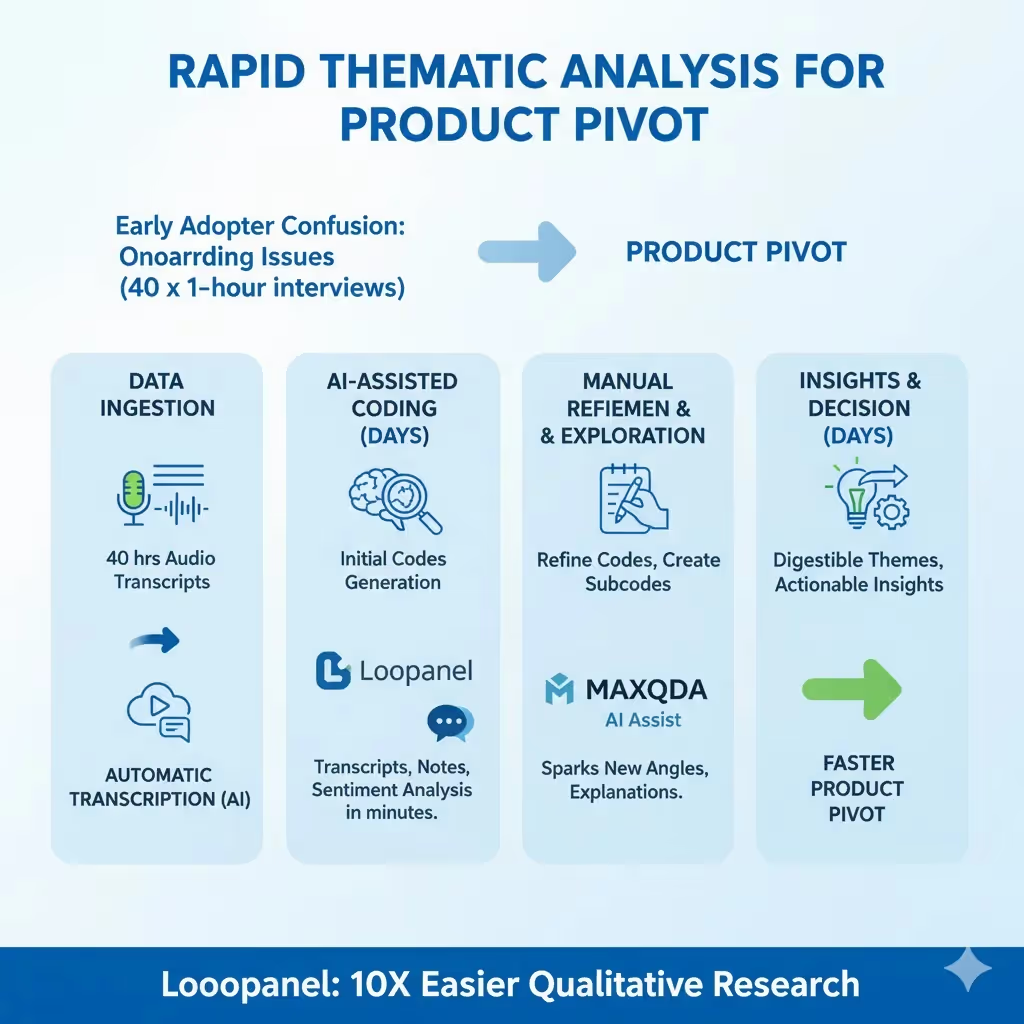

Rapid thematic analysis for a product pivot

Imagine your startup needs to pivot after learning that early adopters are confused about onboarding. You have 40 interview transcripts, each an hour long. By combining automatic transcription with AI‑assisted coding, you can narrow hours of audio into digestible themes within days instead of weeks. Looppanel’s platform promises to make qualitative research 10× easier by producing high‑quality transcripts, notes and sentiment analysis in minutes. In practice, you might run transcripts through Looppanel or Dovetail, generate initial codes, then refine them manually. Tools like MAXQDA’s AI Assist create subcodes and explanations that spark new angles.

Comparing AI‑assisted vs. manual coding

In 2024 a pilot study referenced in Delve’s guide observed that AI‑assisted coding can save time but still requires substantial human oversight. Researchers found that generative AI produced helpful surface‑level insights quickly but did not generate publishable code; reviewing AI outputs sometimes took longer than manual coding. Similarly, Perpetual’s design team tested Dovetail’s AI features on five interviews and concluded that while transcription and data summaries were accurate, auto‑highlighting had a success rate of only 40–50%, often missing important points. Auto‑tagging improved with context but still mislabelled data. Data querying and insight generation were promising yet required manual confirmation. These experiences reinforce the need for human review.

Additional examples

- Academic evaluation of LLMs – The BMC study mentioned earlier compared nine generative models and reported that some achieved perfect concordance with manual thematic analysis. It concluded that AI models can be highly efficient and accurate but should be combined with human expertise to ensure methodological rigour.

- Industry feedback on Dovetail – A 2025 Dovetail article notes that the platform’s AI features automatically transcribe interviews, identify themes, perform sentiment analysis and generate digestible reports. However, Perpetual’s hands-on review shows the necessity of verifying auto‑highlighting and tags. Different perspectives help set realistic expectations.

Tools & platforms: comparison and pros/cons

Selecting the right platform for AI for qualitative data analysis depends on your goals, team size and budget. Here is a comparison of popular tools:

Pricing models vary: Dovetail and Looppanel operate on subscription plans with per‑seat costs; MAXQDA and NVivo require licences with optional AI add‑ons; Looppanel offers a free trial; Delve provides course‑based pricing; ATLAS.ti offers perpetual licences with optional AI features. Open‑source tools like Taguette or QDA Miner Lite provide manual coding support but lack advanced AI. Emerging platforms such as CoAIcoder and ScholarMate experiment with collaborative AI coding and prompt‑based analysis, though adoption remains nascent.

Opportunities, constraints and risks

Opportunities

- Time savings – AI speeds up transcription, basic coding and pattern detection. CAQDAS adoption already cuts 20–30% of data management time; AI tools promise even more. Researchers can re‑allocate time to interpretation and stakeholder communication. This efficiency is a core appeal of AI for qualitative data analysis.

- Consistency – Machine‑learning applies codes uniformly across large datasets, reducing variability caused by human fatigue. AI tools operate at scale without missing transcripts.

- Rapid iteration – Generative models can quickly suggest alternative codebooks or themes, enabling rapid pivoting in product development.

- Lowering barriers – Teams with limited qualitative expertise can leverage AI to handle tedious tasks and focus on decision‑making.

Constraints and challenges

- Accuracy and misclassification – Auto‑highlighting and auto‑tagging success rates are modest (40–50% in Dovetail’s tests). Models may misinterpret nuance or apply generic tags. ChatGPT and similar models can hallucinate, cherry‑picking facts or invent plausible‑sounding insights.

- Black‑box algorithms and interpretability – Many AI models operate opaquely. Without transparency, researchers struggle to justify analytic decisions. Delve’s guide warns that AI outputs must be double‑checked.

- Bias amplification – AI inherits biases from training data. Without diverse training examples and careful prompt design, it may reinforce stereotypes or ignore minority voices.

- Loss of nuance – Qualitative research values context and subtlety. AI tends to summarise, which can strip away emotion or situational detail. Inductive approaches that require “reading between the lines” are not well suited for current AI.

- Over‑reliance risk – There is a temptation to accept AI suggestions without question. Researchers must remain reflexive and maintain control.

- Privacy and ethics – Uploading sensitive transcripts to cloud‑based AI tools raises data security concerns. Perpetual’s team initially hesitated to adopt AI due to data privacy issues. Open‑source or locally hosted models may mitigate this risk.

Mitigation strategies and best practices

- Human in the loop – Always review AI‑suggested codes and themes; treat AI as an assistant, not a decision maker.

- Transparent documentation – Keep an audit trail of AI prompts, outputs and human decisions. Document the rationale for accepting or rejecting AI suggestions.

- Diverse training data – If training your own models, include diverse voices and contexts to reduce bias.

- Prompt engineering – Design clear, context‑rich prompts and refine iteratively. Provide definitions and examples to guide the model.

- Anonymize data – Remove identifying information before uploading to AI services. Consider local deployment for sensitive projects.

- Methodological transparency – Clearly describe AI use in reports. Explain how AI and human judgement interacted and reflect on the influence of AI on interpretation.

- Stay reflexive – Maintain memoing practices. Recognise how AI nudges your thinking and challenge its suggestions.

Methodological implications

How does AI fit with grounded theory, discourse analysis or phenomenology? Grounded theory emphasises emergence from data; AI can assist with open coding but may conflict with the iterative constant comparison central to the method. Discourse analysis examines language in context; AI’s summarisation might strip away discursive nuances. Phenomenological research seeks lived experiences; AI cannot feel; it can only approximate. Researchers should justify the appropriateness of AI and specify its role in the methodology section. There will likely be rich debates within academia about the epistemological implications of AI in qualitative research.

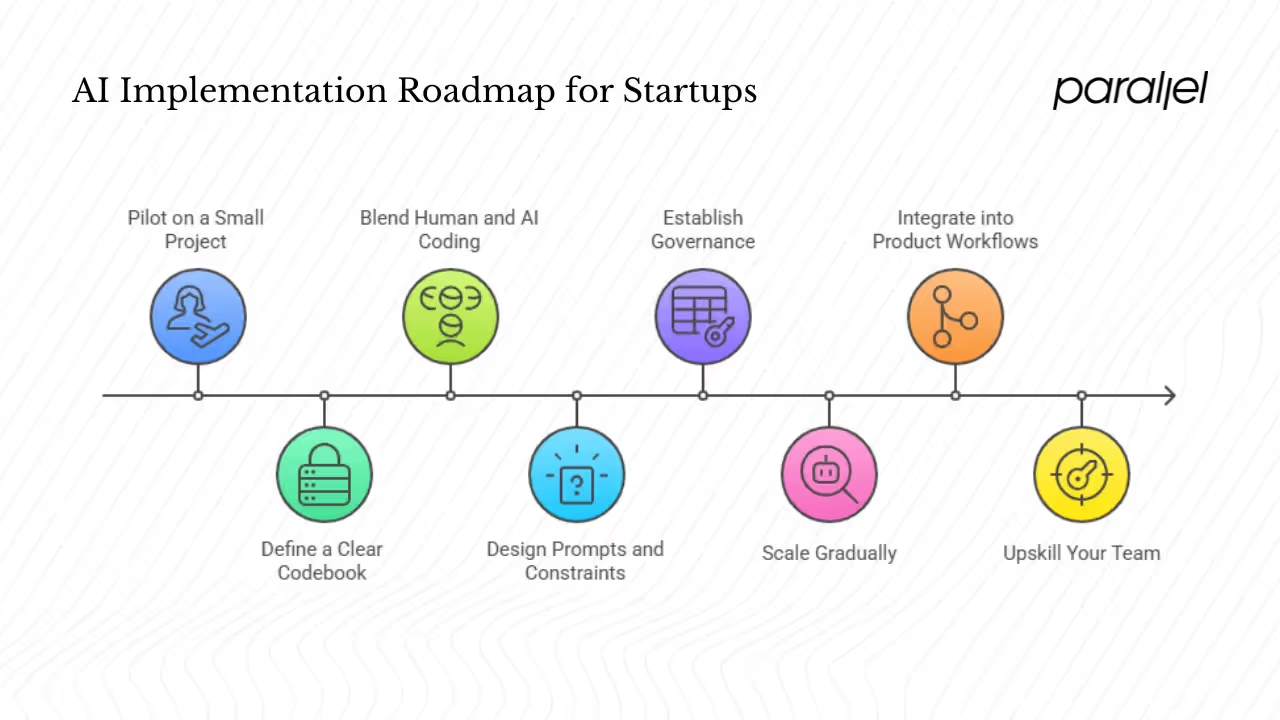

Implementation tips and roadmap for startups

When adopting AI for qualitative data analysis, start small and build confidence before scaling. The following roadmap can guide you:

- Pilot on a small project – Start with 10–20 interviews or support tickets. Choose a non‑critical project to explore workflows and measure benefits.

- Define a clear codebook – Whether deductive or inductive, decide on the codes you want to track. AI works best when guided by clear descriptions.

- Blend human and AI coding – Use AI for bulk coding but review suggestions before committing. Accept, reject or refine each suggestion. Monitor metrics such as the percentage of AI‑accepted codes and time saved.

- Design prompts and constraints – Provide context in prompts (e.g., “We are analysing support tickets for onboarding friction”). Limit the number of suggestions to avoid overwhelm.

- Establish governance – Maintain version control of codebooks and AI outputs. Implement audit logs to track changes.

- Scale gradually – After a successful pilot, roll out AI across projects. Use cross‑project codebooks to ensure consistency and adopt guidelines for when to update codes.

- Integrate into product workflows – Link research outputs into design and product management tools. Generate shareable reports and highlight quotes to ground decisions.

- Upskill your team – Provide training on prompt design, validation and interpretation. Encourage researchers to experiment and share learnings.

Future trends and research directions

Large language models will continue to improve. According to the AI Index, tasks that were only 4.4% solvable in 2023 jumped to 71.7% by 2024, and the cost of using powerful models plummeted from about $20 per million tokens in late 2022 to just a few cents by mid‑2024. The performance gap between open and closed models shrank to about 1.7% by February 2025.

These trends mean that teams can run LLMs locally for privacy and at lower cost, lowering the barrier to using AI for qualitative data analysis at scale. Future research will likely develop end‑to‑end co‑ethnographer systems that integrate data collection, analysis and reporting.

Scholars like Friese envision conversational analysis to the power of AI, where researchers engage with AI in dialogue rather than treat it as an auto‑coder. Interpretability and explainability will become critical, prompting models to reveal why they made certain suggestions. Hybrid human‑AI sense‑making tools like ScholarMate may support collaborative analysis and better reflect diverse viewpoints.

As AI becomes ubiquitous, we expect democratization: AI tools embedded in everyday product feedback channels will allow product teams to capture, analyse and act on user sentiment in real time. Ethical considerations will deepen – fairness, accountability, privacy, and transparency will shape the design and deployment of future qualitative analysis systems.

Conclusion

AI’s role in qualitative research is not to replace human insight but to liberate researchers from drudgery. For founders and product teams, adopting AI for qualitative data analysis can shorten feedback cycles, improve consistency and scale insight extraction. At the same time, the work of interpretation, narrative building and strategic decision‑making remains firmly human. If you are curious about AI, start with a small project. Define your codes, experiment with tools like Dovetail, Looppanel or MAXQDA, and keep track of what works and what doesn’t. Be transparent about how you use AI, monitor for bias, and maintain reflexivity. AI and human collaboration is a journey of continuous learning; the rewards lie in faster and more thoughtful product decisions.

Frequently asked questions

1) Can I use AI to analyze qualitative data?

Yes – with caveats. AI can assist with transcription, coding, pattern detection and summarisation. Tools like Dovetail and Looppanel process interviews, identify themes and perform sentiment analysis. However, AI is not a replacement for researchers. You must review suggestions, refine codes and interpret context. AI works best for deductive or semi‑structured analysis but struggles with deep interpretive nuance.

2) Can ChatGPT analyze qualitative data?

Large language models like ChatGPT can generate summaries, suggest codes and help brainstorm themes. The BMC study found that some generative models achieved perfect concordance with manual thematic analysis. Still, ChatGPT may hallucinate or mislabel data. The Delve guide notes that AI outputs may need as much time to verify as manual coding. For best results, use ChatGPT to augment your analysis: ask it to propose initial codes, summarise transcripts or highlight potential themes, then validate those outputs yourself.

3) Is NVivo an AI tool?

NVivo is a long‑standing qualitative analysis software that has gradually incorporated AI features. Since 2014 NVivo has offered machine‑learning auto‑coding, autocoding by theme, sentiment analysis and transcription. As of 2024 it includes generative‑AI summarisation and code suggestions. NVivo remains primarily a CAQDAS platform; its AI functions assist researchers but do not remove the need for manual coding and interpretation.

4) Is Atlas.ti free?

ATLAS.ti is a commercial qualitative analysis software. It offers perpetual licences and subscription plans; there is no fully free version, though students and academics may receive discounts. The latest release (ATLAS.ti 25) includes generative AI summarisation and “intentional AI coding” features. Open‑source alternatives like Taguette or QDA Miner Lite provide basic coding tools but lack advanced AI features.

.avif)