AI Qualitative Research: Complete Guide (2026)

Learn how AI supports qualitative research by analyzing text, audio, or video data to uncover patterns and insights.

Modern product work relies on understanding people. Qualitative research refers to methods like interviews, observation and open‑ended surveys that capture stories, feelings and context. Unlike quantitative studies that count and measure, qualitative studies look at meaning and pattern.

Large language models and other machine‑learning tools have opened up new possibilities for analysing unstructured text. Using AI qualitative research can make it possible to process more interviews in less time while still leaving room for human insight. For founders and product managers, shorter research cycles mean faster learning and stronger decisions.

This article lays out what AI qualitative research means, when to use it, and how to run studies responsibly. I draw on recent studies, industry practice and my own experience leading client projects at Parallel to offer a grounded view that cuts through hype. By the end you’ll know the upsides, the trade‑offs and the concrete steps to start experimenting.

What is “AI qualitative research”?

AI qualitative research blends human judgment with machine assistance.

- At one end of the spectrum are fully automated systems that classify or summarise text without human input.

- At the other end are human‑in‑the‑loop workflows in which a researcher guides the process, checks outputs and provides context.

Current practice favours the latter because even the best models still miss subtle meaning and context. Classic computer‑assisted qualitative data analysis software (CAQDAS) like NVivo, Atlas.ti and MAXQDA have offered semi‑automated coding for years. Newer tools integrate large language models to suggest themes, summarise passages or translate transcripts.

Important concepts and technologies

- Machine learning and deep learning: algorithms that find patterns in data. They enable models to learn from text and make predictions or classifications.

- Natural language processing (NLP): the branch of computing focused on understanding human language. NLP powers tasks like tokenisation, sentiment analysis and topic modelling.

- Semantic analysis and pattern recognition: techniques that spot clusters of meaning across responses, such as recurring motivations or concerns.

- Predictive modelling: in early stages within qualitative research, but models can forecast likely behaviours based on observed patterns.

- Automated coding and text analysis: systems that tag segments of text with tentative codes, saving researchers from manual first‑pass tagging. NVivo’s AI‑driven coding, for example, identifies themes but can't recognise sarcasm or slang, so researchers must stay critical of its suggestions.

Why now?

Earlier CAQDAS tools offered simple pattern‑based coding. With the release of large language models, the range of tasks machines can handle has expanded. Researchers can query models in everyday language, ask for summaries and get back clusters of themes almost instantly. In a 2024 study, generative models produced themes that matched 71% of the categories human analysts identified in an inductive thematic analysis. They took a median of 20 minutes to code datasets that took human coders nearly ten hours. That kind of speed has inspired what some call a methodological turn in qualitative work. Researchers are no longer limited to manual coding; they can blend human interpretation with machine assistance to move quickly without giving up depth. Ethical questions remain, and we will return to them later.

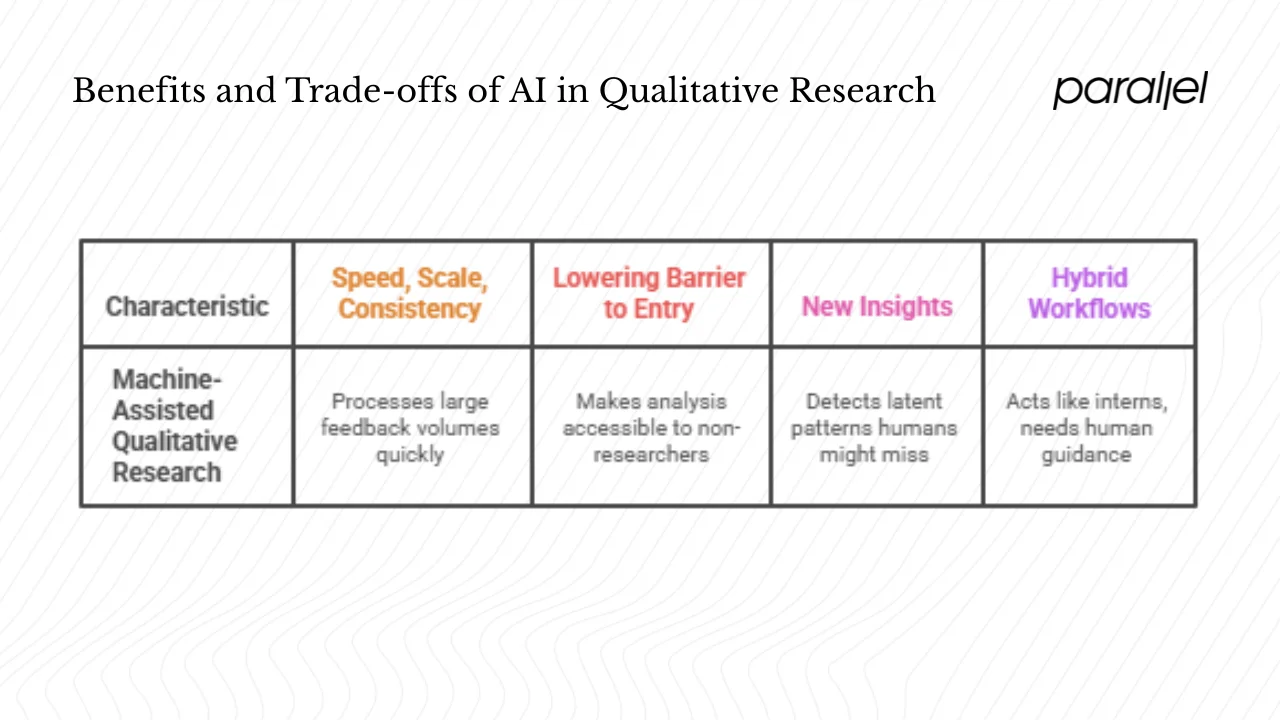

Benefits and trade‑offs

1) Speed, scale and consistency

For early‑stage teams juggling many experiments, machine‑assisted qualitative research shines when you need to process large volumes of feedback. A recent industry report found that more than half of UX researchers now use AI tools, a 36% increase from 2023. These tools handle tedious tasks like transcription, tagging and early synthesis. AI transcription services such as Whisper or Otter can turn an hour‑long interview into text in minutes, with error rates around 3% under good conditions. Automated coding platforms can cluster responses and suggest preliminary themes, cutting early coding time by 30% to 50%. In the 2024 JMIR study mentioned above, generative models matched most of the inductive themes and dramatically reduced analysis time.

2) Lowering the barrier to entry

AI assistants make analysis accessible to non‑researchers. Tools now offer builtin prompt templates, plain‑language queries and integration with collaborative platforms. According to a 2024 article by Nielsen Norman Group, AI can help with planning studies, drafting interview questions and organising consent documents. It can serve as a desk researcher to gather background information, though the article cautions that you must check cited sources. For founders and product managers who may not have formal research training, this support can unblock early discovery work.

3) New insights from pattern detection

Large language models excel at clustering similar sentiments and surfacing latent patterns that humans might miss. Semantic analysis can reveal surprising connections across interviews, such as unexpected drivers of user behaviour. In our own projects at Parallel, we’ve seen AI‑assisted clustering bring up recurring metaphors or concerns we might have overlooked. These new themes become starting points for deeper human interpretation.

4) Hybrid workflows, not replacements

AI tools act like interns. They work best when guided with clear instructions and constraintsnngroup.com. The same Nielsen Norman Group article emphasises that high‑quality outputs still require human oversight and review. In the Standard Beagle case study, researchers used AI for transcription and synthesis, then layered human interpretation on top. The outcome was faster delivery without sacrificing depth. This pattern—machines handle repetitive work while humans provide context—is becoming the norm.

Risks and limitations

- Loss of context and subjectivity: Generative models operate from a “view from nowhere.” They handle every transcript as equal and may miss subtle cues about power dynamics, sarcasm or emotional undertones. The PLoS One paper warns that while generative models can process text quickly, many “Big Q” qualitative approaches like grounded theory or phenomenology should not be delegated to machines.

- Prompt sensitivity: The quality of output depends heavily on how you ask. A 2024 study on prompt design found that providing clear task descriptions, data structure and output structures made ChatGPT more transparent and reliable. Without such guidance, the model may hallucinate or omit critical information.

- Hallucinations and misinterpretations: Models can make up references or misinterpret context. Nielsen Norman Group notes that AI tools can generate plausible‑sounding but incorrect information, so you should always check primary sources.

- Bias and fairness: Models reflect their training data. If underlying data are skewed, the outputs will be too. The PLoS article urges caution about methodolatry—over‑reliance on standardised checklists and automated tools at the expense of reflexivity.

- Data privacy and consent: Uploading interview transcripts to third‑party services raises privacy and compliance concerns. Standard Beagle’s team mitigated risk by anonymising transcripts and choosing vendors with strong security guarantees.

- When not to use AI: For ethnographic studies that require immersion, or for sensitive topics where every word matters, manual analysis remains essential. Some research approaches, such as autoethnography or phenomenology, depend on researcher positionality and can't be outsourced to machines.

Designing and running an AI‑assisted study

This section offers practical steps for founders, product managers and design leads who want to try machine‑assisted qualitative analysis. Consider these guidelines rather than hard rules; the goal is to blend machine efficiency with human insight.

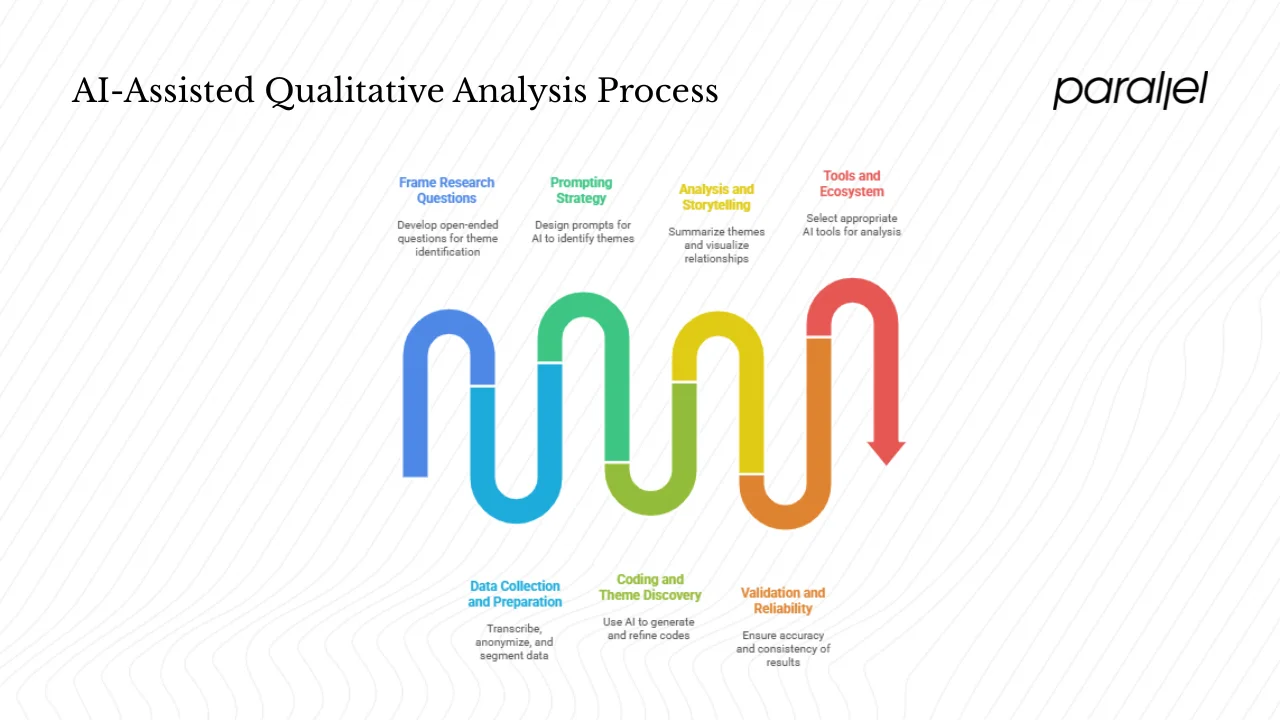

1) Framing your questions

Start with research questions that benefit from theme identification. Open‑ended questions like “Tell me about the last time you struggled with onboarding” generate narratives that machines can cluster. Decide whether your study is exploratory (open‑ended interviews, diary studies) or evaluative (targeted survey questions). Mixed methods can also work: for example, follow up a survey with a few interviews to add depth.

2) Data collection and preparation

- Transcription and cleanup: Use transcription tools to convert audio to text quickly. Check transcripts for accuracy, fix mis‑translations and remove identifying details.

- Anonymisation: Strip names and sensitive details before uploading text to external services. If your team works with health data or regulated industries, choose closed systems approved by your institution.

- Segmentation: Break transcripts into meaningful segments (individual sentences or paragraphs). Models perform better when given shorter units to classify.

- Handling multiple languages: Use language‑specific transcription and translation services, then have a bilingual team member review the results. In the Standard Beagle story, researchers verified translations to catch mistakes before analysis.

3) Prompting strategy

Prompt design is at the heart of this approach. Here is a simple framework inspired by recent research:

- Set the task: Describe what you want (e.g., “Identify recurring themes in the following interview segments”).

- Provide context: Outline the research goal and any relevant background.

- Define output structure: Specify whether you want a table, bullet list or narrative synopsis.

- Flag uncertainty: Tell the model to mark segments it finds ambiguous so you can review them.

- Iterate: Start with a broad prompt, review the output, then refine. Researchers at Pennsylvania State University found that clear, structured prompts improved transparency and user trust. In their study, participants reported higher confidence when prompts included task background, output structure and data source information.

You can choose between zero‑shot prompts (no examples) and few‑shot prompts (provide a couple of coded examples). Few‑shot prompts often yield better results because the model has a pattern to emulate.

4) Coding, theme discovery and triangulation

Begin with machine‑generated codes to map the terrain. Use topic modelling or semantic clustering to group similar responses. Review the suggested codes and merge or rename them to fit your conceptual framework. NVivo’s AI assistant offers preliminary themes but can't parse sarcasm or dialects, so manual review is vital. Compare AI codes with your own independent coding. In the JMIR study, generative models showed fair to moderate intercoder reliability compared with humans, suggesting that a hybrid approach improves consistency. Triangulate by comparing AI outputs with literature, other datasets and team discussions.

5) Analysis and storytelling

Once codes are refined, ask the model to summarise each theme, pulling illustrative quotes. Use network graphs or co‑occurrence matrices to see how themes relate. For example, you might visualise how feelings of frustration coincide with particular parts of the onboarding flow. Lightweight predictive models can be used sparingly to hypothesise connections (e.g., “When users mention confusion about pricing, they are more likely to churn”). Keep in mind that correlation does not imply causation.

6) Validation and reliability

- Human review: Always double‑code a portion of the data manually. Compare your codes with machine suggestions and resolve differences in a consensus meeting.

- Log prompts and versions: Keep a record of the prompts used, model versions and dates. This audit trail helps you reproduce results and understand why certain themes surfaced.

- Sensitivity checks: Alter prompts slightly to see if the output remains stable. If small changes lead to different themes, be cautious.

- Ethics and consent: Maintain transparency about how you use participants’ data. Some participants may not want their words used to train models; honour withdrawal requests and check vendor policies.

7) Tools and ecosystem

The ecosystem of AI‑assisted qualitative tools is evolving rapidly (pun intended). Established platforms like Dovetail, Condens and EnjoyHQ now offer AI‑driven coding and synthesis features. Another tool (not to be confused with the banned word on the list) markets itself as a virtual research assistant. Traditional QDA suites like NVivo and Atlas.ti have introduced AI add‑ons for auto‑coding and summarisation. Startups like Grain and Otter focus on real‑time transcription and summarisation for video calls. Decide whether to use cloud‑based tools or local models based on your data‑security needs.

Use cases and strategic implications

1) Large open‑ended surveys

Imagine a founder launches a new B2B SaaS product and collects thousands of open‑ended responses about pain points. Manual coding would take weeks. A hybrid approach allows the team to upload responses to an AI platform, generate initial clusters and then refine them. In our experience, this method surfaced patterns of confusion around onboarding steps that the team had not considered. Because the preliminary analysis was ready in hours, the team could run follow‑up experiments quickly.

2) Multi‑language user interviews

Standard Beagle’s story illustrates how AI qualitative research can handle interviews across languages. Their team processed twenty interviews—half in Spanish—using AI transcription and synthesis tools, verified the translations and then analysed the summaries. The result was on‑time delivery and actionable insights despite tight resources. The heart of the research—empathy and interpretation—remained human.

3) Social media and virtual ethnography

Product teams often monitor forums, app reviews or support tickets for new issues. Using large language models to cluster posts by sentiment and topic can flag new themes early. A caution: such data sources may include sarcasm, slang and coded language that models misread, so plan for human review. Keep track of data privacy rules; anonymise user names and strip metadata before analysis.

Published examples:

In the JMIR study, generative models achieved strong theme overlap with human coders. Another experiment by researchers at Pennsylvania State University showed that a structured prompt framework improved transparency and researcher trust in ChatGPT. However, the PLoS article warns that not all qualitative approaches should be automated and emphasises the need to protect reflexivity and context. These case studies show both the potential and the boundaries of the approach.

Strategic implications for startups:

For early‑stage companies, AI qualitative research offers a way to shorten the hypothesis‑validation loop. When teams can process user interviews in days instead of weeks, they can iterate on features faster. It also democratises access to insights; non‑research team members can run quick analyses and share results. However, over‑reliance on machine‑generated insights without human judgment risks misguided decisions. Build internal capability by training team members on prompt design, ethics and critical reading of AI outputs. Resist the temptation to outsource your understanding of users to a black box.

Future directions and best practices

Future trends

The next frontier for AI qualitative research includes tools that support co‑ethnography, where models suggest follow‑up questions in real time during interviews. Some platforms are experimenting with real‑time analytics that summarise live interviews and flag potential topics for deeper probing. Others work on domain‑specific models tuned to product or health contexts. Researchers are exploring ways to blend qualitative themes with quantitative predictive modelling, for example linking user stories to churn likelihood.

Ethics and governance are becoming pressing. As generative models make research faster, there’s a risk of methodolatry—using checklists and automated tools without reflecting on the underlying epistemology. Institutions are starting to write policies on consent, data retention and model training. When fine‑tuning domain‑specific models, guard against embedding biases from historical data.

Cheat sheet

- Prompt checklist: clearly state the task, provide context, define output structure, ask the model to flag ambiguity, iterate and refine.

- Human–machine split: let machines handle transcription, first‑pass coding and clustering. Reserve human effort for theme refinement, contextual interpretation and storytelling.

- Audit trails: record prompts, model versions and analysis decisions. This transparency aids replication and accountability.

- Validation heuristics: double‑code samples manually, conduct consensus meetings, adjust prompts and check stability.

- Data practices: anonymise transcripts, secure storage, confirm vendor compliance and honour participant withdrawal rights.

Open questions

Despite progress, many challenges remain. Bias and fairness of models are ongoing concerns. Interpretability of large language models is limited; we need better ways to understand why they group certain statements. Data privacy rules vary by region, and researchers must deal with them carefully. When models fail or mislead, teams need a fallback plan based on manual analysis. Philosophically, the tension between subjectivity and automation will continue to shape debates about the role of the researcher.

-

Conclusion

The rise of AI qualitative research is not about replacing human researchers. It’s about freeing them to focus on what matters: understanding people and telling compelling stories. Machine‑learning tools can process vast amounts of text, suggest patterns and save precious time, but they lack lived experience and context. Use them as partners rather than substitutes. Start small, design clear prompts, keep audit trails and stay curious about your own assumptions. When we combine human empathy with machine efficiency, we can build products that truly serve people.

FAQ

1) How is AI qualitative research used?

It assists researchers by transcribing interviews, tagging segments, clustering similar responses, detecting sentiment, suggesting themes and summarising findings. In hybrid setups, researchers review and refine these outputs.

2) Can ChatGPT do qualitative analysis?

ChatGPT can code, cluster and summarise textual data when prompted correctly. A structured prompt framework improves its transparency and user trust. However, it sometimes misinterprets context or fabricates details, so human validation is essential.

3) Will AI replace qualitative researchers?

No. Studies show that generative models match many human‑identified themes but still miss subtle meaning and require oversight. Human judgment, reflexivity and context remain central. AI tools function like assistants, not replacements.

4) Does NVivo use AI?

NVivo includes semi‑automated and AI‑driven auto‑coding tools that identify themes or sentiment. A recent chapter on NVivo and AI notes that while these tools accelerate coding, they rely on simple statistical associations and can't recognise sarcasm, slang or ambiguity. Researchers must remain critical of the suggestions.

5) How do I validate AI coding?

Always compare machine‑generated codes with human coding for a sample of your data. Log your prompts and model versions, conduct consensus meetings with your team and adjust prompts if results change dramatically. Use the machine outputs as a starting point, not an endpoint.

.avif)