AI User Research: Complete Guide (2026)

Discover how AI enhances user research, including data analysis, behavioral pattern recognition, and predictive insights.

Building features nobody needs is the quickest way to waste a runway. I’ve watched founders ship features based on hunches and then scramble when nobody uses them.

Research can feel like a luxury when you’re chasing a product–market fit, yet guessing costs more. This is where AI user research helps: machine learning and automation handle tedious tasks so you can focus on talking to real people. It isn’t magic.

In this guide I’ll unpack what artificial intelligence can and can’t do for research, share practical methods you can adopt today, and explain why keeping users at the centre remains essential.

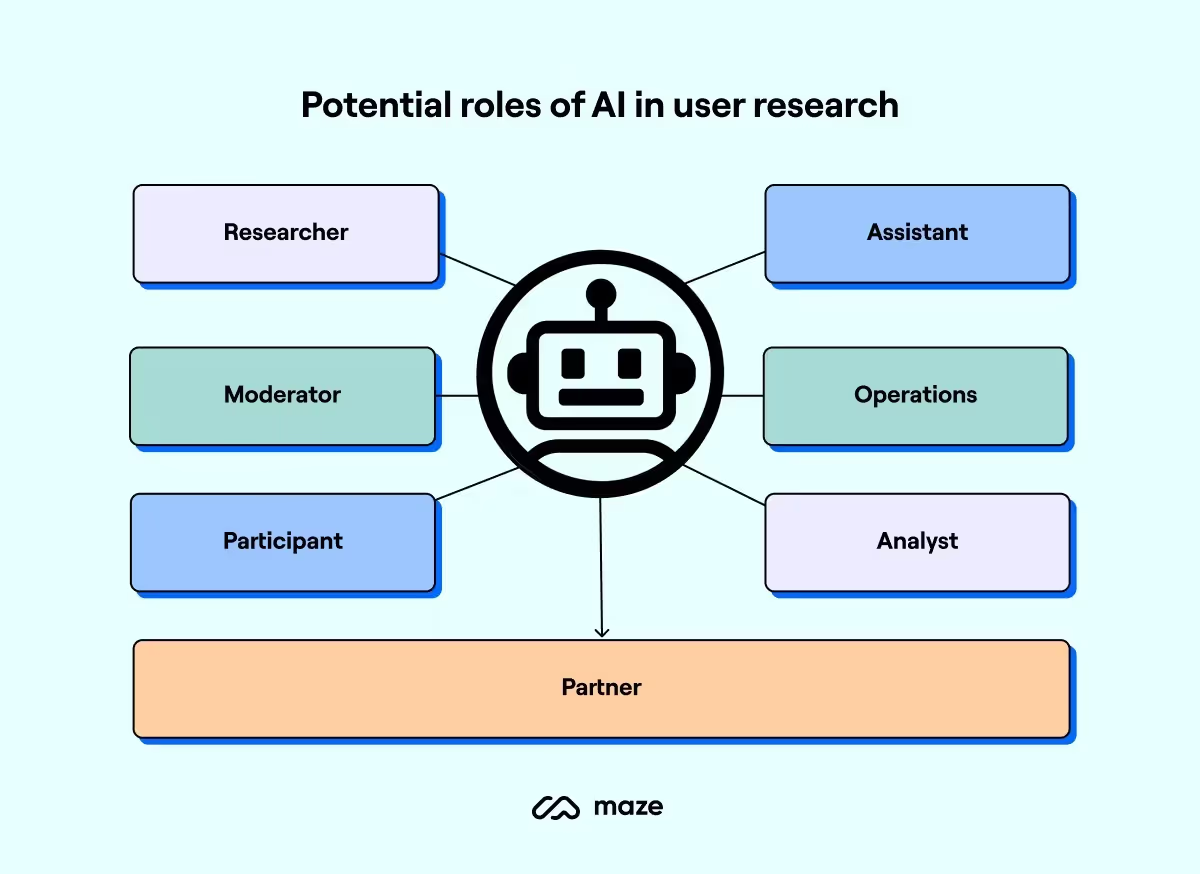

What is AI user research?

Traditional user research relies on interviews, surveys, usability tests and field studies to learn about people’s needs and behaviours. These methods produce valuable insights but they take time. Artificial intelligence can help by automating some of the slowest steps. In AI user research you still conduct interviews or run surveys, but large language models and other algorithms assist with summarising transcripts, grouping responses and even suggesting follow‑up questions. Maze’s 2025 report found that more than half of product teams surveyed already use such tools, mainly for analysing research data and transcription. When machines handle rote tasks, researchers can invest more of their energy in designing studies and engaging with participants.

It’s important to separate using artificial intelligence as a helper from outsourcing the entire job. Tools like ChatGPT can process text faster than any person, but they lack context and empathy. Good researchers design the study, engage with participants and decide what the findings mean. They use machines as an extra pair of hands rather than an oracle. For early‑stage teams with limited resources, this approach can lower the cost of entry. A founder who can’t hire a full‑time researcher might still run interviews and use a model to summarise them. A designer can run a survey with open‑ended questions and use a tool to spot themes quickly. The essential thing is to keep talking to real people.

Why use artificial intelligence in user research?

1) Speed is the first and most obvious advantage: Analysing qualitative data used to take days. In a 2024 interview, UX consultant Paul Boag said that machine‑assisted tools “made my job a lot easier” because they simplify research and offer new ways to gather and analyse data. He pointed out that sorting through hundreds of open‑ended survey responses can be intimidating, but a language model can surface common themes in seconds. In our own practice a transcript analysis tool cut the synthesis phase for a five‑interview study from several days to a single afternoon.

2) Scale is another benefit: When you build personas from a handful of interviews, your bias is high. Algorithms can group feedback from hundreds of survey responses or support tickets and surface clusters that represent distinct user types. The Intive report on research in the era of artificial intelligence observes that machine learning can generate personas and experience maps based on data and predict user needs and behaviours. For small teams this means you can focus conversations with real customers on edge cases instead of reinventing the basics each time.

3) Automation also helps by collecting and analysing behaviour at scale: Research platforms record sessions, transcribe them and apply natural language processing to identify patterns in how people move through a product. This lets product managers review dozens of sessions without watching every minute of video. Tools can analyse heatmaps, click paths and user flows, revealing patterns that might be invisible in a handful of interviews.

4) Perhaps the most meaningful advantage is earlier insight: Maze’s research shows that teams deeply integrating research into their decisions achieve 2.7 times better outcomes. When you can see themes within hours instead of days, you can adjust prototypes, prioritise features and advocate for changes with data. The promise of AI user research lies not just in speed but in enabling faster, evidence‑based decisions.

Applications of artificial intelligence in user research

Artificial intelligence is starting to show up throughout the research workflow. It can help write better surveys by suggesting question variations, checking for leading language and generating follow‑up questions based on previous answers.

During usability tests machines can analyse screen recordings and logs to flag where users hesitate and surface common error paths. Automated video analysis helps researchers spend their limited time watching the most informative clips rather than every second of footage.

Pattern detection at scale used to be reserved for organisations with data science teams. Now even a single product manager can cluster user sessions, identify drop‑off points or compare cohorts. Maze’s survey found that teams use artificial intelligence to analyse vast amounts of data, identify patterns and generate insights at scale.

Algorithm‑driven persona synthesis can be helpful early in a project when you need a working model of your audience. However, as the Nielsen Norman Group explains, synthetic personas are “fake users generated by machines”. They should supplement, not replace, insights from talking to real people.

Some teams experiment with using agents to simulate interactions within a product. This can provide quick heuristics for concept testing, but we always validate those findings with real customers because models behave differently from humans. Each of these applications shows how automation blends with human judgment.

Methods and tools

One of the most tedious parts of research is turning hours of interviews into digestible insights. LexisNexis designer Tania Ostanina used an internal large language model to process eight hour‑long interviews. Normally synthesis would take about a week. By uploading transcripts and iteratively refining the model’s output with a set of prepared prompts, she reduced the processing time to just over an hour per interview and estimated a 20% time saving overall.

Her method involved a “prompt deck”: templates for tasks like summarising, classifying or extracting quotes. Grouping prompts into categories helped maintain control over the model. We build libraries of tested prompts for tasks like listing pain points or surfacing quotes and always keep a human in the loop.

Working with large language models is an iterative process. The first outputs rarely capture the detail you need, so plan to refine your prompts and adjust based on what you learn. Having a clear goal for each prompt and experimenting with structure helps get better results.

You don’t need to build your own models. Many off‑the‑shelf platforms for beginners offer transcription, sentiment analysis and theme detection. For more control some organisations build custom workflows using APIs from providers like OpenAI or Anthropic to fine‑tune prompts or integrate research into internal dashboards.

Start small. Upload a transcript to a model and ask it to summarise themes or use artificial intelligence features built into your existing tools. Avoid heavy integration until you’ve proved the value. Small experiments teach you more than any article.

Challenges and limitations

- False confidence in model output: Language models generate what sounds plausible, not necessarily what’s true. Synthetic testing can create the illusion of customer experiences that never happened.

- Overly positive personas: Synthetic participants often claim perfect success in tasks, unlike real users who report challenges or drop-offs. Relying on such data can mislead product decisions.

- Lack of real feedback: Without human input, research risks being based on fiction rather than lived experience.

- Privacy and data ethics: Automated tools handling recordings or transcripts raise issues around consent, data storage, and potential misuse for model training. Always anonymise data and secure explicit permission before using external services.

- Accuracy concerns: Models can misclassify, invent patterns, or miss emotional context. They can summarise but not truly interpret tone or motivation.

- Need for human oversight: Only humans can design studies, interpret findings, and connect them to real-world insights.

Best practices for early‑stage teams

- Combine human insight with machine efficiency: Use automation for transcriptions and summaries, then follow up with real interviews to probe deeper.

- Validate all automated outputs: Read the source data behind summaries, verify findings through user calls, and cross-check across multiple data points.

- Start small: Begin with a limited use case (like summarising interviews), measure how well it works, and scale gradually.

- Focus on prompt design: Refine prompts to improve accuracy and share successful examples within your team.

- Build shared research skills: Encourage product managers and designers—not just researchers—to understand prompt design and validation. This makes research a team effort.

- Protect participant data: Remove personal identifiers and review the privacy policies of all tools you use.

- Prioritise trust: Transparency and respect for user data are at the core of responsible research.

The future of artificial intelligence in user research

Adoption is already rising. Maze’s survey found that 58% of respondents use machine‑assisted tools, up sharply from the previous year. As models improve they will handle more complex tasks like summarising video clips, generating scenario‑based surveys and predicting drop‑off points. However, experts caution against expecting them to replace researchers. Nielsen Norman Group emphasises that artificial intelligence–generated profiles should complement, not substitute, real research.

I expect the skill set on research teams to evolve. Competence in statistics and human‑centered design will still matter, but so will the ability to write good prompts, validate outputs and understand biases. Tools will democratise access to insights, enabling product managers and designers to run studies without a dedicated researcher. Yet the most meaningful work will still involve talking to people, observing their behaviour and translating their needs into product decisions. Keeping humans in the loop ensures AI user research augments rather than erodes our connection to the people we serve.

Conclusion

Artificial intelligence is reshaping how we gather and make sense of user insights. When used thoughtfully, AI user research can speed up analysis, reveal patterns you might miss and free you to spend more time with real customers. Reports from Maze show that teams using these tools achieve higher efficiency and faster turnaround. Practitioners like Paul Boag and Tania Ostanina share concrete examples of time savings. At the same time, critics remind us that synthetic users are no substitute for human empathy.

My advice is simple: start small, keep a human in the loop and view artificial intelligence as a helper, not a replacement. The goal of user research hasn’t changed—understand people so you can build products they value. Machines help us get there faster, but they don’t absolve us from listening. By combining the best of both, we can make informed choices faster without losing our connection to the people we serve. So start experimenting today. Give it a try.

FAQs

1) How is artificial intelligence used in user research?

Machines speed up analysis and broaden reach. Examples include automated transcription and theme detection for interviews, clustering survey responses into personas and analysing behaviour patterns in usability tests.

2) Will UX research be replaced by machines?

No. Synthetic users are fake and can’t replace the depth and empathy gained from speaking with real people. Smashing Magazine warns that relying solely on machine‑generated insights creates an illusion of user experience.

3) How do I get into machine‑assisted research?

Learn the fundamentals of UX, then experiment with machine‑assisted tools. Practise writing prompts for large language models, try automated transcription and analysis platforms and learn basic data analysis. Tania Ostanina found that refining prompts was crucial to achieving real time savings.

4) Who are notable researchers in artificial intelligence?

Yann LeCun, Geoffrey Hinton and Yoshua Bengio shared the 2018 Turing Award for advances in deep learning. Fei‑Fei Li, co‑director of Stanford’s Human‑Centered Artificial Intelligence Institute, helped launch modern image recognition and is often called the “godmother of artificial intelligence”. Their work laid the groundwork for the tools we use in AI user research today.

.avif)