AI for UX Research: Complete Guide (2026)

Explore how AI enhances UX research through automated insights, user behavior analysis, and predictive analytics.

Speed and empathy are constant tensions in product teams. As a founder or PM at an early‑stage startup, you feel pressure to ship faster while still staying true to your users. As a design leader, you want rigorous evidence without bogging the team down. The explosion of generative AI and data‑driven tools promises to ease that tension, yet the hype can be dizzying.

In this guide I’ll share how we at Parallel have adopted AI for UX research pragmatically. You’ll find methods for planning, recruiting, analysis, and communication, along with tools, best practices, and pitfalls.

Why AI + UX research?

User‑research tasks—recruiting participants, moderating sessions, transcribing recordings, coding notes—are labour‑intensive. Meanwhile, digital products generate massive clickstreams, feedback and survey responses. The trade‑off is often between speed and depth. Recent research emphasises that AI can help researchers process large datasets quickly, reduce bias and provide real‑time insights. For example, the Innerview guide notes that AI helps sift through overwhelming amounts of user data and offers instant analysis.

What “AI for UX research” covers

When I say AI for UX research, I’m referring to a broad spectrum of technologies:

- Generative models that produce text, images or code. These can draft research questions, summary reports, and even wireframes.

- Natural language processing (NLP) to transcribe, clean and classify interview data. NLP can categorise themes and detect sentiment.

- Predictive models that analyse behavioural data to forecast churn, satisfaction, or conversion.

- Computer vision and emotion analysis to read facial expressions, gaze patterns or gestures during usability tests.

- Agent‑based simulations and synthetic users that simulate interactions with prototypes for early design feedback.

These technologies shine in planning, transcription, clustering and surface‑level pattern detection, but they remain weak at deep interpretive sense‑making. The UXR Guild’s quick guide warns that AI cannot determine appropriate sample sizes or correctly interpret nuanced sentiment without human oversight. Hallucination, bias and loss of context are real risks.

State of adoption

Adoption is accelerating but still uneven. A HubSpot survey cited by UXmatters reported that about 49% of UX designers were using AI to experiment with new design strategies or elements in late 2024. The same article notes that skepticism remains, yet practitioners appreciate AI’s ability to accelerate analysis and pattern recognition. Another study highlighted in the Innerview guide points out that AI can reduce the cost of research by automating time‑consuming tasks. In my conversations on design forums and with early‑stage teams, we see AI used most often for transcription, summarisation and initial thematic clustering, while the final sense‑making and design recommendations remain firmly human.

The AI‑augmented UX research workflow

Below is a practical walkthrough of the research lifecycle, highlighting where AI can meaningfully plug in.

Step #1: Planning and research design

Generative models can help draft research questions, hypotheses, screeners and discussion guides. The UXR Guild guide lists several areas where AI can offer “breadth” suggestions—generating additional possible questions or rephrasing close‑ended items into open‑ended ones—but cautions that AI cannot prioritise research questions or estimate risk and importance without human input. Based on our experience:

- Break down prompts. Don’t ask the model to “write a full research plan”; instead, provide context (product stage, audience, constraints) and ask for a few alternate questions or hypotheses.

- Inject domain knowledge. Provide the AI with background on your users and previous findings.

- Vet outputs manually. Check suggestions for bias, leading wording, or misalignment with your goals before using them.

- Keep critical decisions human. The guide emphasises that AI cannot determine which research methods are appropriate or match sample sizes to statistical tests. Treat AI as a brainstorming partner, not a strategist.

Step #2: Recruitment and participant matching

Platform providers like User Interviews and UserTesting have begun integrating predictive models to help match participants to studies based on demographic and behavioural attributes. AI can flag potential fraud, suggest micro‑segments and even recommend recruitment channels. However, this uses personal data and thus raises privacy and consent concerns. We always inform participants when an algorithm helps select them and avoid black‑box models that might introduce bias. When building our own panels, we test AI‑driven screening questions by manually reviewing early matches to ensure the model’s predictions align with our criteria.

Step #3: Data collection, moderation and testing

AI brings efficiency to data capture. Tools with built‑in transcription transcribe interviews and usability sessions in real time. The Innerview guide highlights how NLP can categorise interview responses and organise qualitative data, saving researchers countless hours. We’ve seen success using Otter.ai and Dovetail to create nearly instant transcripts, freeing us to focus on rapport and follow‑up questions.

AI‑moderated sessions—bots that conduct interviews or usability tests—are emerging but still experimental. Synthetic users, such as LLM‑powered agents that click through prototypes, can provide early feedback on flows. In a 2025 pilot, one team we worked with used a simulated agent to test an onboarding flow, quickly uncovering a misaligned call‑to‑action before recruiting real participants. However, synthetic users cannot feel frustration or delight; they are best used for quick smoke tests. Real‑time nudges during sessions (e.g., suggested probes when the participant hesitates) can be helpful but should not distract the moderator.

Step #4: Data processing and analysis

This is where AI shines. According to the UXR Guild guide, AI can generate word‑frequency counts, propose themes and group similar responses for manual tagging. The Innerview article notes that AI excels at processing and analysing large datasets, identifying segments, predicting behaviour and highlighting bottlenecks. Here’s how we incorporate AI:

- Transcription cleaning and anonymisation. AI can remove personally identifiable information and correct typos.

- Qualitative coding and clustering. Use topic‑modeling or clustering algorithms to propose initial themes. Always audit and refine them; AI may miscategorise nuanced sentiment.

- Sentiment and emotion analysis. AI can detect tone and emotions in text or voice. We use this as a high‑level signal rather than definitive truth.

- Behavioural data analysis. Predictive models can detect funnels, drop‑offs and anomalies. For example, we built a logistic regression model to predict churn in a SaaS product based on clickstream features; this flagged high‑risk segments earlier.

- Survey and feedback synthesis. AI can summarise open‑ended responses, cluster topics and generate summarised narratives, accelerating report writing.

- Cross‑modal synthesis. Some platforms combine qualitative, quantitative and behavioural data into dashboards. We still cross‑check correlations and avoid drawing causal conclusions without triangulation.

Step #5: Reporting, communication and decision‑making

Generative AI can produce first drafts of slide decks and executive summaries. In our team, we feed transcripts and coded themes into GPT‑4 to create narrative outlines. But we always edit the tone and emphasise context. AI also helps tailor messages for different stakeholders—product managers may need actionable recommendations, while executives need strategic implications. Another useful application is generating backlog items or user stories directly from insights. However, transparency matters: we label AI‑generated content and keep an audit trail so that others can retrace the logic.

Step #6: Post‑research: repository and scaling

Insight repositories are vital for scaling research. AI can automatically tag, classify and link artifacts. In our repository (built on Notion + custom scripts), we use embeddings to surface related past studies when we upload new notes. Recommendation systems can suggest relevant research to designers based on upcoming features. We’re exploring “insight agents” that proactively suggest follow‑up studies when certain patterns recur. Quality control is essential: we periodically audit tags and ensure that AI‑generated links make sense.

Key themes and deep dives

Below are four domains where AI is particularly influential, along with potential benefits, methods, risks and use cases.

1) User‑experience optimisation

What AI brings: Predictive models can surface usability bottlenecks, suggest design changes and prioritise features. UXmatters explains that AI‑driven predictive analysis helps teams understand user behaviours and make data‑driven decisions. By analysing conversion rates and engagement patterns, AI can recommend which flows to refine or which content to personalise.

Methods/tools: Behaviour analytics platforms (Amplitude, Mixpanel), predictive modelling in Python/R, AI‑driven A/B testing tools.

Risks/mitigations: Overfitting to historical data can entrench existing biases. To mitigate, combine AI insights with qualitative feedback; treat predictions as hypotheses to test.

Example: A fintech startup noticed a 40 % drop‑off during onboarding. Using AI‑driven funnel analysis, they discovered that a mandatory ID verification step caused friction. They redesigned the flow to provide clear copy and optional deferment, improving completion by 25 %.

2) Behaviour analysis and clickstream analysis

What AI brings: AI can parse event logs, sequence user actions, detect hidden flows and correlate behaviours with outcomes. The Innerview guide emphasises that machine learning can sift through millions of data points to identify segments and highlight areas for improvement.

Methods/tools: Sequence clustering, Markov models, anomaly detection, time‑series analysis.

Risks/mitigations: Correlation isn’t causation; cross‑check with qualitative observations. Ensure data privacy, especially when combining datasets.

Example: We analysed clickstream data from a learning platform using hidden Markov models. The model suggested that users who skipped the tutorial were twice as likely to abandon within two sessions. This insight prompted us to redesign the tutorial as an opt‑in mini‑game, reducing early churn.

3) Data‑driven design and personalisation

What AI brings: AI can suggest interface variants tailored to segments, enabling adaptive experiences. The IJRPR paper notes that AI creates personalised experiences by adjusting recommendations, interface layouts and text suggestions.

Methods/tools: Recommendation engines, reinforcement‑learning bandits, dynamic UI frameworks.

Risks/mitigations: Cold‑start issues for new users, fairness concerns when recommendations skew towards certain demographics. Mitigate by blending AI with rule‑based defaults and offering opt‑outs.

Example: A health‑app team used a contextual bandit algorithm to customise dashboard widgets based on predicted interests. Early trials showed a 15 % increase in daily active use but surfaced equity concerns—the algorithm recommended high‑intensity workouts to users with limited mobility. Adding guardrails and user‑controlled settings resolved this.

4) Customer feedback and survey analysis

What AI brings: NLP can categorise sentiment, extract themes and summarise open‑ended responses. The UXR Guild guide lists capabilities such as generating word‑frequency counts and clustering similar responses. Innerview highlights that AI helps organise qualitative data and ensures valuable insights aren’t overlooked.

Methods/tools: Topic modelling (LDA), zero‑shot classification, sentiment analysis, summarisation models.

Risks/mitigations: Models may misclassify slang or sarcasm. Always review clusters and refine training data.

Example: For a B2B SaaS platform, we processed 500 NPS comments with a zero‑shot classifier, grouping feedback into themes like “onboarding complexity” and “reporting features.” Human review corrected misclassifications (e.g., sarcasm in “love the bugs!”). The resulting themes guided Q2 road‑map priorities.

Tools and platforms

The ecosystem of AI‑enabled UX research tools is growing rapidly. Below is a curated overview by function. Tools marked with an asterisk are ones we’ve used.

From our experience, domain‑specific tools such as Dovetail or Condens integrate well with existing design stacks and allow manual override, which is critical. When evaluating, consider the tool’s ability to integrate with your current workflows, transparency of its models, cost structure, and support for human oversight. The UXTweak article notes that AI tools enhance data processing and enable personalised segmentation, but human judgment remains vitalblog.uxtweak.com.

Best practices and workflow guidelines

Working effectively with AI for UX research requires more than just picking a tool. Below are principles we follow at Parallel.

- Human‑in‑the‑loop. Always review AI outputs. Use them as drafts or hypotheses rather than final answers. The UXR Guild emphasises that AI cannot accurately prioritise research questions or determine appropriate methods.

- Prompt engineering. Provide context and constraints in your prompts. For example, specify the user segment, product maturity and research goal. Break big tasks into smaller, modular prompts.

- Versioning and transparency. Keep an audit trail of AI‑generated and human‑edited content. Label what machine‑produced.

- Ethical guardrails. Anonymise data, obtain informed consent for recording and clearly communicate when AI is involved. Audit models for bias and fairness.

- Methodological rigor. Combine qualitative and quantitative data; triangulate across multiple sources. Don’t let AI replace user empathy.

- Team upskilling. Train researchers and designers in AI literacy. Teach them to critique outputs and understand limitations.

- Change management. Expect workflow shifts—less time spent on transcription and more on strategic synthesis. Involve stakeholders early to build trust.

Challenges, limitations and risks

Despite the excitement, there are serious risks that we need to anticipate.

- Hallucinations and inaccuracies. Generative models sometimes fabricate plausible yet false information. Always cross‑validate with raw data.

- Bias and overgeneralisation. AI may amplify existing biases in training data. For example, predictive models might under‑represent minority groups if not carefully balanced. Conduct bias audits and diversify datasets.

- Loss of nuance and empathy. AI struggles to interpret context, sarcasm or edge cases. Maintain human review to capture emotional nuances.

- Privacy and security. Storing recordings and behavioural logs can pose risks. Ensure encryption, minimisation of personal data and compliance with regulations.

- Tool lock‑in and black boxes. Proprietary models may limit transparency. Prefer tools that allow export of raw data and provide explainability.

- Dependency and skill erosion. Over‑reliance on AI might reduce researchers’ craft. Encourage manual analysis alongside automated methods.

- Cultural barriers. Teams may resist adopting AI due to fear of job replacement. Communicate clearly that AI augments rather than replaces roles.

Use cases and examples

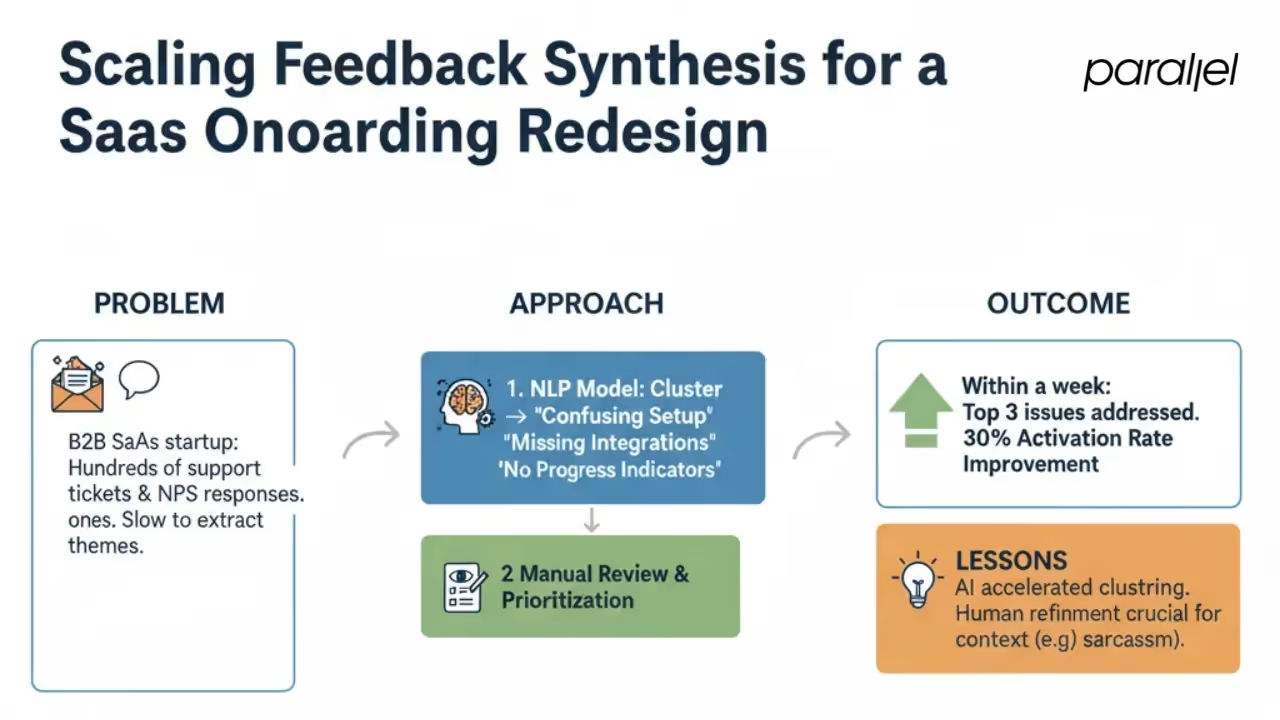

Scaling feedback synthesis for a SaaS onboarding redesign

- Problem: A B2B SaaS startup collected hundreds of support tickets and NPS responses about its onboarding but struggled to extract themes quickly.

- Approach: We used an NLP model to cluster comments into themes like “confusing setup instructions,” “missing integrations,” and “lack of progress indicators.” After manual review, we summarised each cluster and prioritised fixes.

- Outcome: Within a week, the team addressed the top three issues and saw a 30 % improvement in activation rate.

- Lessons: AI accelerated the initial clustering but needed human refinement to avoid misclassification of sarcastic remarks.

Detecting drop‑off paths with behavioural modelling

- Problem: An e‑commerce platform noticed high cart abandonment and wanted to identify hidden friction.

- Approach: We fed clickstream logs into a sequence‑mining algorithm. The model highlighted that users who added a coupon code were twice as likely to abandon when shipping costs appeared at checkout.

- Outcome: Updating the cart page to display estimated shipping earlier reduced abandonment by 18 %.

- Lessons: Predictive modelling revealed a non‑obvious bottleneck. However, we validated the hypothesis through follow‑up interviews to understand the underlying frustration.

Simulated user testing before going live

- Problem: A healthcare startup needed to validate a high‑risk dashboard but had limited time to recruit participants.

- Approach: We used an LLM‑driven agent to navigate the prototype and report on task completion. The agent flagged an inaccessible color contrast on a critical graph. We fixed it before real users encountered it.

- Outcome: The early fix improved accessibility compliance and reduced rework.

- Lessons: Simulated agents are useful for catching low‑hanging issues, but they can’t assess trust or emotional reactions. We still ran moderated tests with clinicians before launch.

Future trends and research agenda

Looking ahead to 2025 and beyond, we see several exciting directions:

- Multimodal models: Combining text, audio, video and biometric signals will enable richer context understanding. For instance, models that analyse voice tone and facial expressions concurrently could detect frustration mid‑session.

- Real‑time adaptive research: AI agents that adjust interview questions or tasks on the fly based on participant responses.

- Synthetic users and digital twins: More powerful simulated personas that mirror specific user segments for early prototype testing.

- Human‑AI co‑creation: Tools that allow researchers to collaborate with AI on study design, analysis and storytelling.

- Evaluation frameworks: Academic research will continue examining how AI affects research quality, ethics and researcher roles. We need methods to evaluate AI‑assisted workflows for rigor and fairness.

Implementation strategy for startups and teams

For founders and PMs eager to experiment, here’s a pragmatic roadmap:

- Identify a pain point. Pick one aspect of your research workflow that feels heavy—often transcription, coding or survey analysis.

- Pilot a tool. Run a small project using an AI tool in parallel with your existing process. Track time saved and insights generated.

- Validate outputs. Compare AI results with manual work. Adjust prompts and parameters until you trust the quality.

- Measure ROI. Calculate quantitative metrics (e.g., time saved, cost reduction) and qualitative ones (stakeholder satisfaction).

- Upskill the team. Provide training on AI literacy and prompt writing. Encourage cross‑functional collaboration.

- Scale gradually. Integrate AI into more stages only once you have built confidence. Document guidelines and maintain governance.

- Monitor ethics and compliance. Regularly audit data handling, consent processes and algorithmic fairness.

This measured approach helps build trust and prevents over‑reliance. Remember, the goal is to augment your team, not automate them away.

Wrapping up and next steps

AI’s role in UX research is unmistakably growing. It accelerates data processing, surfaces patterns and opens up new possibilities like synthetic users and adaptive tests. Yet, its value lies in augmentation, not replacement. Human intuition, empathy and methodological rigor remain irreplaceable. As you integrate AI for UX research into your practice, start small, validate thoroughly and preserve the human touch.

Starter checklist

- Define a research pain point where AI could help.

- Choose a tool aligned with your workflow and evaluate its transparency.

- Draft prompts that include context and constraints.

- Pilot the tool alongside existing methods and compare outcomes.

- Review results manually and iterate on the process.

- Document learnings and share them with your team.

- Repeat with another research stage once confident.

FAQ

1) How is AI used in UX research?

AI enhances planning, recruiting, data collection, analysis and reporting. For example, AI can generate additional research questions, supply first‑draft survey items and transcribe interviews. Predictive models analyse clickstream data to identify patterns, and NLP tools cluster qualitative feedback into themes.

2) Will AI take over UX research?

Full takeover is unlikely. AI can automate repetitive tasks and aid analysis, but it cannot fully interpret nuance, context or human emotions. As UXmatters notes, AI cannot replace the need for human intervention in the design process. The future is human + AI collaboration, with researchers guiding, critiquing and applying outputs responsibly.

3) Is there an AI for UX design?

Yes. Generative design tools create images, wireframes or even code snippets, and predictive models inform layout decisions. However, they remain unreliable for end‑to‑end design without human oversight. Use them for inspiration and rapid prototyping rather than finished solutions.

4) How do I use AI ethically in UX research?

Obtain informed consent, anonymise data, and be transparent about AI’s role. Audit models for bias and fairness and ensure data privacy. Keep humans in the loop to maintain empathy and context.

5) What’s a good starting point for integrating AI?

Begin with tasks that are time‑consuming but low‑risk, like transcribing interviews or clustering survey responses. Use AI suggestions as drafts and validate with human judgment. Over time, integrate predictive modelling and generative reports as your team becomes comfortable.

.avif)