Designing for AI Transparency and Trust: Guide (2026)

Explore design strategies to promote AI transparency and trust, ensuring users understand and trust AI decisions.

Trust in artificial intelligence feels fragile. People have seen what it can do, but they are unsure whether to rely on it. In early‑stage startups, this uncertainty is magnified because you lack the brand recognition of a large company. According to Zendesk, artificial intelligence transparency means understanding how systems make decisions, why they produce specific results and what data they use. This article explores designing for AI transparency and trust from a product perspective. It shows why it matters, outlines core principles and shares practical steps you can take in your startup. Throughout this article, I'll focus on designing for AI transparency and trust to show how to put these ideas into practice.

Why transparency and trust in AI are critical for startup products

Artificial intelligence usage is rising rapidly. The AI Index report notes that by 2024, 78 % of organisations were using artificial intelligence. Yet only 46 % of people globally say they trust artificial intelligence systems, and in high‑income countries the figure drops to 39 %. This gap between adoption and trust is dangerous for new products. Many businesses worry that lack of openness will drive customers away; a Zendesk survey found that three quarters of companies believe absence of transparency could lead to churn. For startups, reputational damage may be existential. Regulatory pressure is also increasing. The proposed EU AI Act requires providers of high‑risk systems to disclose training data, model architecture and performance metrics. Governments worldwide are considering similar rules.

The goal is not just compliance; it is about building confidence. Without trust, users will not adopt your tool. McKinsey notes that over 40 % of business leaders see lack of explainability as a key risk of artificial intelligence yet only 17 % are addressing it. Addressing this risk early can set your product apart. Founders and product managers must also consider fairness and ethical implications. Bias in training data can lead to discrimination. Without processes to identify and mitigate bias, you risk harming users and violating laws. Building transparent systems takes work, but it differentiates you from competitors who treat it as an afterthought.

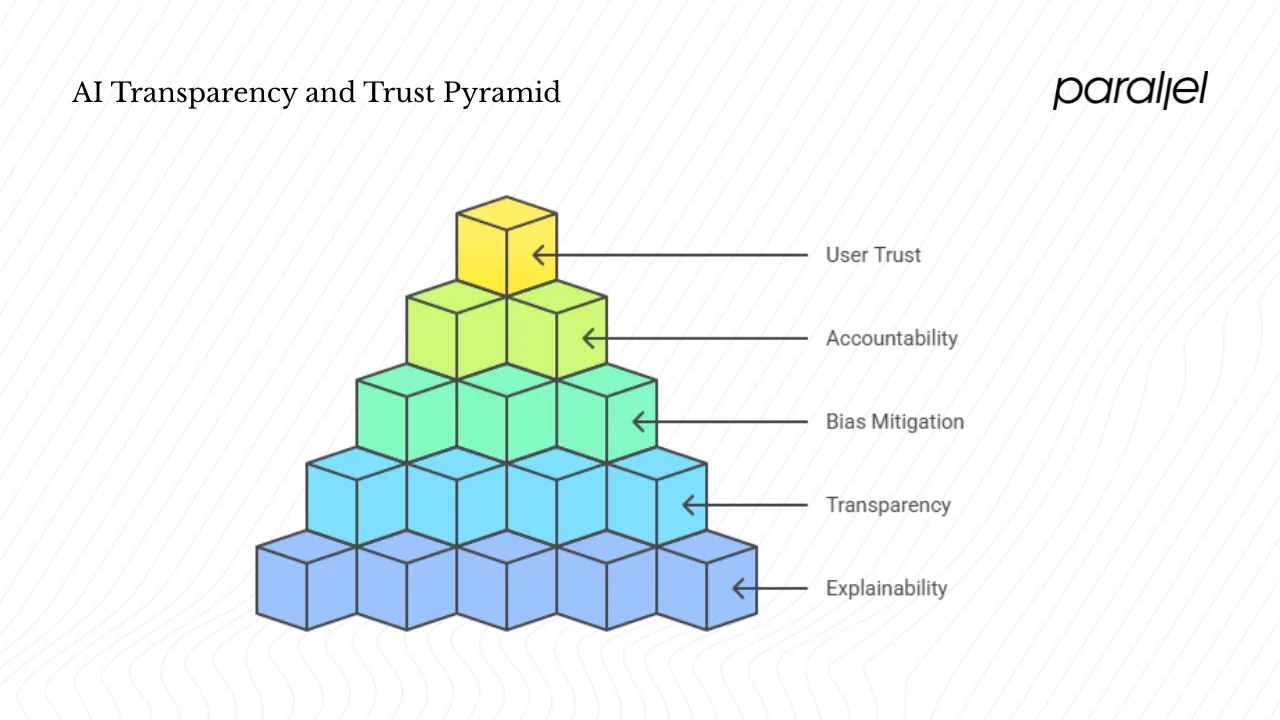

Core foundations of transparency and trust in AI

Strong design rests on clear principles. Five pillars can guide your efforts.

1) Explainability and model interpretability

Explainability means being able to describe why a model produced a particular outcome in a way that is understandable to a human. Interpretability involves understanding the mechanics of the model itself. TechTarget explains that artificial intelligence transparency encompasses explainability, governance and accountability. Users and regulators should have visibility into the machine learning model, the data it is trained on and its error rate. Differentiating between interpretability and explainability matters. Interpretability helps engineers debug models; explainability helps users trust the decisions. Ensure your product offers clear rationales without overwhelming people with technical details.

2) Algorithm transparency and decision auditability

Algorithm transparency involves revealing the inputs, logic and outputs of an algorithm. Decision auditability means you can trace the steps leading to a decision and challenge them if needed. The Frontiers journal notes that explainable AI methods such as LIME and SHAP provide local models to explain predictions, but complex models remain hard to interpret. Regulations like the EU AI Act and the U.S. Algorithmic Accountability Act require providers of high‑risk systems to disclose training data, model architecture and performance metrics. For early‑stage companies, providing logs of model decisions and document versions can build confidence internally and externally. When designing for AI transparency and trust, include audit trails from the start so you can trace how each output was created.

3) Bias mitigation and ethical AI

Bias undermines credibility. Models trained on imbalanced data may reproduce existing inequalities. Ethical artificial intelligence encompasses fairness, inclusivity and a broader understanding of potential harm. Zendesk warns that biases in artificial intelligence models can unintentionally discriminate. Mind the Product advises designers to clearly communicate how results are ranked and let users adjust inputs to reduce bias. Transparent systems support ethics by allowing inspection of data sources and decision logic. In your startup, commit to bias mitigation techniques like balanced datasets, adversarial de-biasing and fairness metrics. Share these efforts with your users; being open about your process builds credibility.

4) Accountability and regulatory compliance

Accountability means being clear about who is responsible for the outcomes of your artificial intelligence system. Data protection laws, such as the GDPR, contain provisions giving individuals the right to know how automated decisions are made. The EU AI Act categorises certain applications as high‑risk and demands transparency around training data and model architecture. Startups need to document their design decisions, data sources and model changes. Assign a person or team responsible for compliance. When designing for AI transparency and trust, build internal processes to respond to user queries and regulator requests. Keep a record of model updates and communication logs.

5) User trust, privacy protection and feedback mechanisms

Trust is not only technical; it is relational. Zendesk’s report emphasises that transparency about data usage is a defining element in building and maintaining customer trust. Users must know what data you collect, why you collect it and how you secure it. Respecting privacy—collecting only what is necessary and using secure practices—is essential. Feedback mechanisms help users feel heard and can surface problems you might miss. Mind the Product encourages continuous feedback loops, such as version control for prompts, input management and correction workflows. When designing for AI transparency and trust, build channels for users to ask questions, flag problems and see how their feedback influences improvements.

Practical design and product strategies for startups

Principles only matter if they translate into real practice. Below are strategies drawn from research and my experience working with early‑stage SaaS teams.

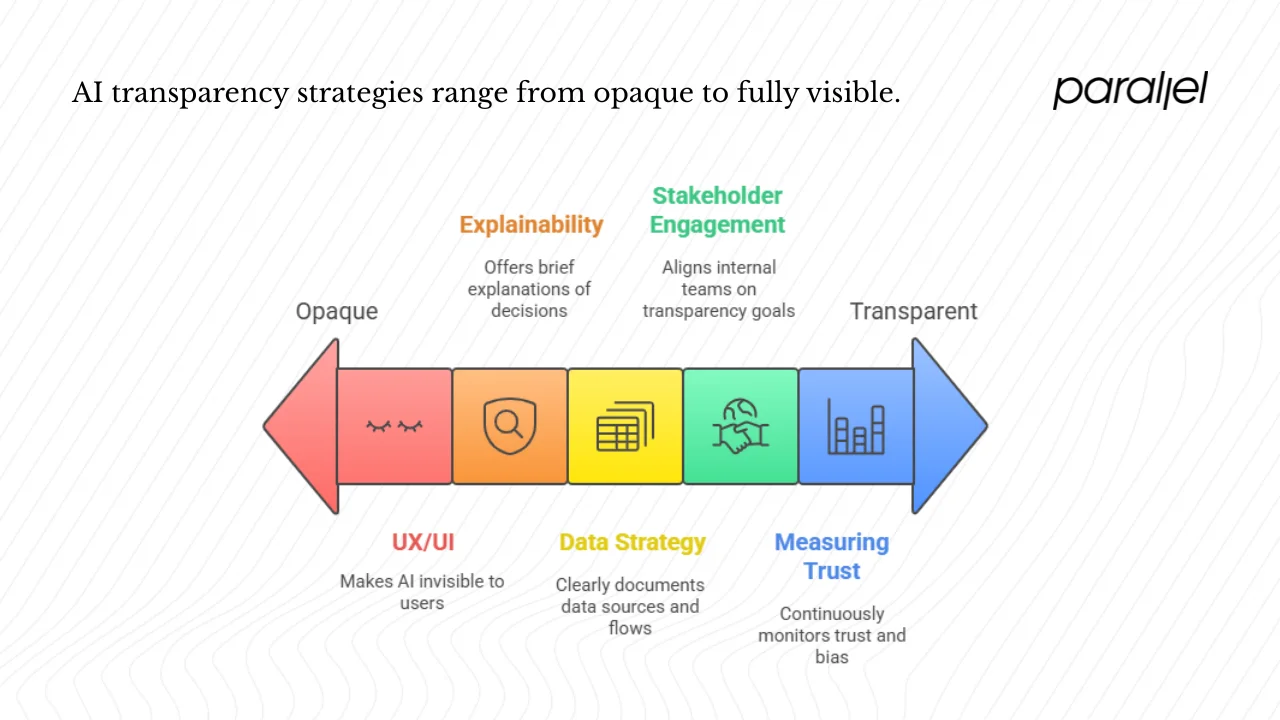

1) UX and UI considerations for transparent AI

Make artificial intelligence visible. The UXmatters article notes that clear visibility helps users understand how artificial intelligence contributes to their experience and what results to expect. Show when a feature is driven by algorithms and offer concise explanations of its purpose. Use simple language. Provide “why this recommendation” links or tooltips summarising the reasons behind an output. Design interactive explanations that allow curious users to drill down for more detail. Avoid dark patterns. Let users undo or override automated actions when appropriate. By designing for AI transparency and trust, your interface becomes a guide rather than a black box.

2) Designing for explainability without overwhelming users

Too much information can confuse; too little can erode confidence. A layered approach works well. Offer a brief summary of why a decision was made, with an option to see more context for those who want it. For example, a recommendation engine might say, “We recommended this because you previously chose similar items,” with a link to view specific factors. UXmatters warns that users do not need to see complex algorithms but should be able to understand the reasoning. Be upfront about limitations—artificial intelligence models can hallucinate or misinterpret data. When designing for AI transparency and trust, honesty about weaknesses builds credibility.

3) Product architecture and data strategy

Transparency starts with data. Make clear what data your model uses, what is collected and how it is stored. Mind the Product suggests citing sources to reassure users that outputs are grounded in reliable data. Use data lineage tools to track how information flows through your system. Enable logs and audit trails so decisions can be traced. Version your models; record changes and communicate them to stakeholders. Build infrastructure that supports auditability and clear documentation.

4) Stakeholder engagement and internal culture

Trust begins inside your company. Founders, product managers and designers must align on transparency goals. Cross‑functional collaboration among design, product, engineering, legal and ethics teams is essential. Educate internal teams about transparency so everyone tells the same story. A misaligned story about data use can erode trust. Make sure everyone understands what data is used and communicates consistently.

5) Measuring and monitoring trust and transparency

You cannot improve what you do not measure. Track adoption, churn and override rates, and ask users if they understand decisions. Watch support tickets to spot trust issues. Continuously evaluate bias and drift, set thresholds for error and fairness, and adjust as needed.

Scenario and use‑case considerations for early‑stage startups

Not all applications carry the same risk. A product that recommends movies differs from one that screens job candidates or approves loans. High‑stakes applications require stricter oversight and human review; low‑stakes ones can use simpler explanations and controls. Startups pivot quickly, so modular architecture helps you adapt while keeping your explanations intact. Balance performance, transparency and speed—sometimes a simpler, more explainable model is the better choice.

Framework: a startup checklist for designing transparent and trustworthy AI

Based on our work with startup clients and research, here is a checklist you can use:

- Define the scope and risk. Identify the system’s purpose and potential impact on people.

- Map stakeholders and expectations. List everyone affected and gather their expectations for openness.

- Design transparency mechanisms. Plan how to show explanations and provide feedback loops and audit logs.

- Implement bias mitigation and ethics review. Check data for bias and review ethical implications.

- Design users flow with openness in mind. Weave transparency into onboarding, status indicators and “why this result” features.

- Monitor and iterate. Track trust, adoption and feedback, and update models and explanations.

- Communicate externally. Publish privacy policies and change logs to keep people informed.

Follow this checklist to make sure you cover the essentials and adapt it to your specific context.

Common pitfalls and how to avoid them

Teams often fall into traps that undermine openness:

- Over‑claiming transparency. Promising full explainability when you cannot deliver sets false expectations. Be honest about what you can show.

- Using jargon. Technical language can alienate users. Translate complex concepts into plain terms.

- Ignoring user control. If users cannot question or override an artificial intelligence output, trust erodes. Offer ways to seek clarification or opt for human review.

- Neglecting measurement. Without metrics, you cannot know if transparency efforts work. Monitor trust indicators and adjust.

- Treating transparency as a one‑off. Openness is an ongoing process. As models evolve, your explanations must evolve too.

- Forgetting compliance. Keep track of laws like the EU AI Act to avoid legal risks.

Avoid these pitfalls by building humility into your process.

Conclusion

Trust is earned, not given. As artificial intelligence becomes part of everyday products, people will choose tools that respect them. My experience with early‑stage teams shows that clarity about how models work and empathy for users must be built in from the start. Designing for AI transparency and trust is not optional; it is the foundation for adoption, engagement and ethical standing. Take the principles and checklist above and make them part of your design and product process. When you embed openness into your work, you help ensure that artificial intelligence serves people rather than surprises them.

FAQ

Q1. What are the five pillars of artificial intelligence?

A common framework includes Data (quality and governance), Algorithms (model design and correctness), Infrastructure (compute and operations), Trust/Transparency/Ethics (explainability, accountability and fairness) and Adoption/Usage (human involvement and interface). Each pillar should be considered in your product development.

Q2. How can we ensure transparency when using artificial intelligence?

Be clear about what your system does and does not do. Provide layered explanations for decisions. Offer users control and feedback mechanisms. Maintain audit logs and model traceability. Engage stakeholders early to align expectations.

Q3. What are the components of trust in artificial intelligence?

Research shows that trust often involves competence (the system works well), predictability or consistency (it behaves as expected) and integrity or ethics (it behaves fairly and responsibly).

Q4. How do we build trust in artificial intelligence?

Combine strong performance with clear, user‑friendly explanations. Allow users to query and adjust the system. Monitor for bias and drift. Communicate openly about data usage and model updates. Create feedback loops so users feel heard.

.avif)