Designing Interfaces for AI Products: Guide (2026)

Learn best practices for designing interfaces for AI products, focusing on usability, transparency, and human‑AI interaction.

Artificial intelligence has slipped quietly into our everyday tools. Large language models write emails, computer vision sorts photos and suggestion engines pick our next track.

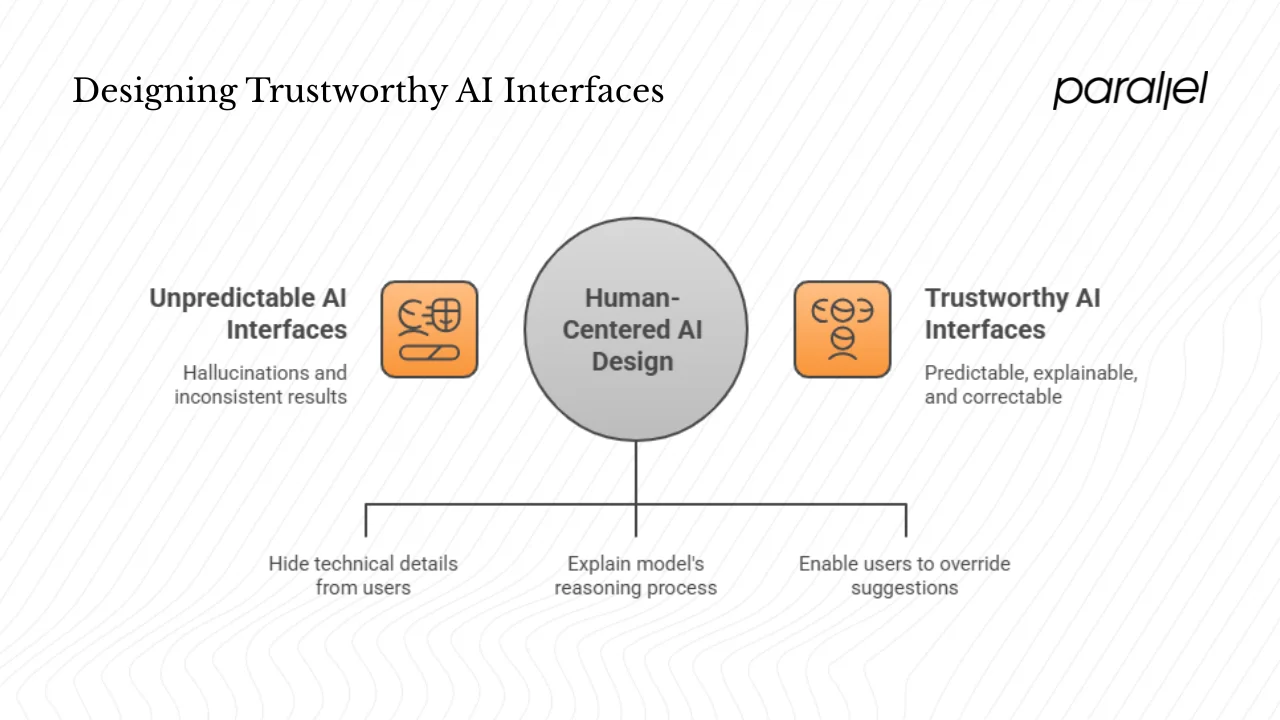

Yet when you begin designing interfaces for AI products you soon realise it’s nothing like wiring up deterministic flows—you are choreographing a conversation between messy human intent and a probabilistic model that might hallucinate.

In this article I’ll explain why this work is different, share principles from early‑stage projects and suggest patterns to help founders, product managers and design leads build trustworthy artificial‑intelligence products.

Why artificial‑intelligence interfaces feel different

1) Deterministic software meets probabilistic models

Traditional interfaces follow repeatable logic: click a button to open a data‑entry page and, after submitting, you see a result. Generative artificial intelligence breaks that predictability. Researchers at the Nielsen Norman Group observed that these systems are known for producing “hallucinations — untruthful answers (or nonsense images)”nngroup.com. A hallucination occurs when a model generates output that seems plausible but is incorrect or nonsensicalnngroup.com. Because the model’s output is a statistical guess rather than a hardcoded rule, the same prompt can yield wildly different results. In their April 2024 study on artificial‑intelligence design tools, Nielsen Norman Group observed that a single ChatGPT prompt for a front‑end design produced three drastically different layouts. This inherent randomness means there are no “happy paths” in the conventional sense; designers must anticipate and design around variance.

2) Interfaces as the visible layer of complex systems

An artificial‑intelligence interface hides a mountain of hidden complexity: data pipelines, model training, inference infrastructure, privacy safeguards and tuning logic. The person on the other side doesn’t care about any of this. They care about outcomes and feedback. Human‑centred artificial‑intelligence guidelines emphasise that such products must prioritise human needs, values and capabilities. Human‑centred approaches aim to augment human abilities rather than replace them. That means we must abstract away technical complexity and provide just enough mental models for users to predict what will happen next.

3) New risks: opacity, trust and failure modes

Because artificial‑intelligence systems can surprise us, the risk profile of these interfaces is very different from classic software. People rely on such systems in sensitive domains such as healthcare, finance or hiring; if the system produces a bad suggestion, the consequences can be serious. Users also struggle to identify machine mistakes because the output often looks confidentnngroup.com. When the system produces wrong information with the authority of the UI, users may assume it is correct. This creates two design challenges: first, how do we give users insight into why the model made a suggestion, and second, how do we allow them to correct or override it? The remainder of this article offers principles and patterns to address these challenges.

Core principles for designing artificial‑intelligence interfaces

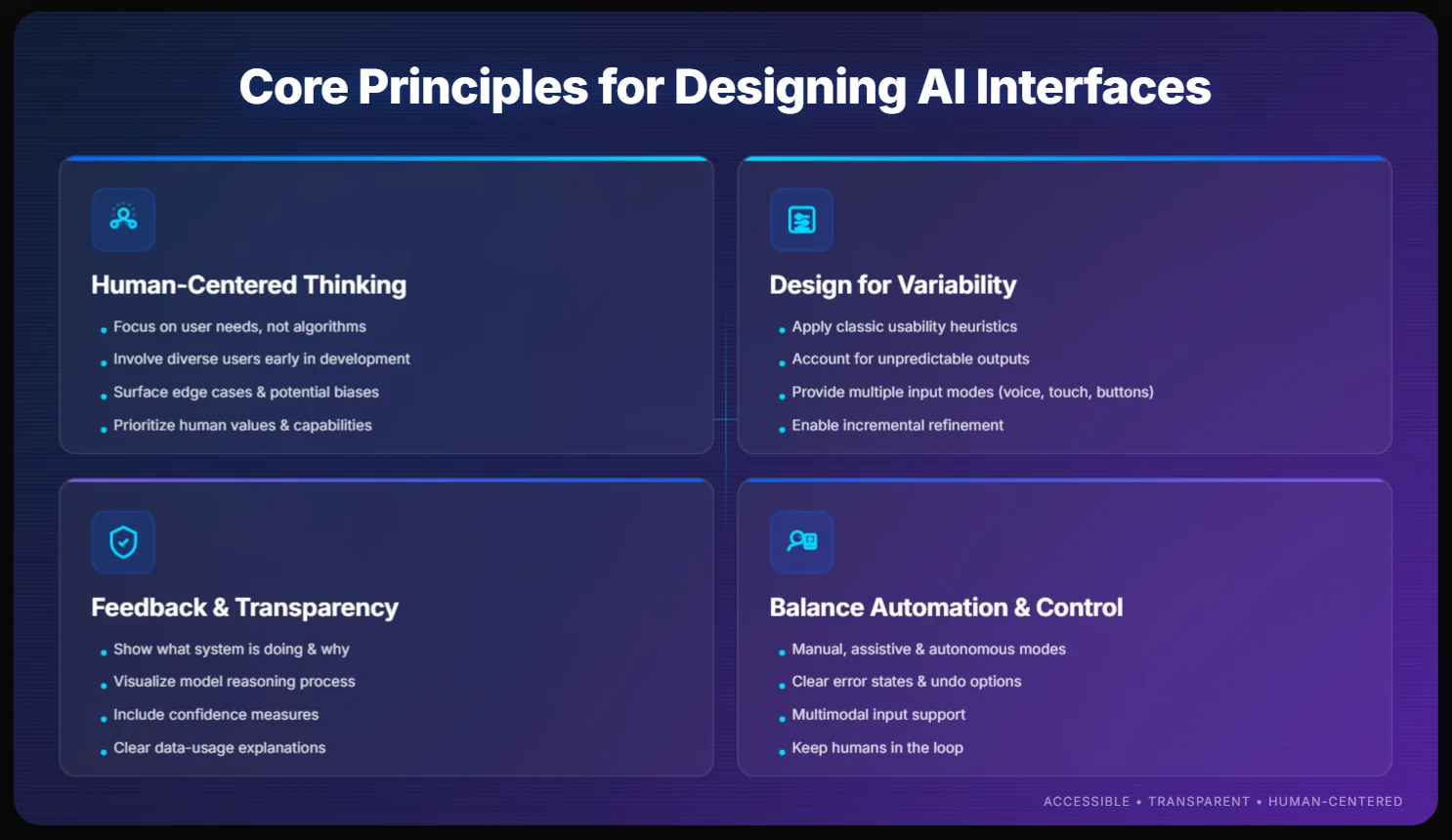

Designing interfaces for AI products begins with human‑centred thinking. Focus on the people who will use the system rather than the algorithm itself. Prioritise human needs, values and capabilities and involve users from varied backgrounds early to surface edge cases and biases.

Usability matters even in a world of unpredictable outputs. Classic heuristics like learnability and error prevention still apply, but your flows must account for variability. Don’t assume the model will always produce a neat answer; instead, design incremental responses that users can refine or reject. Research shows that current design tools powered by artificial intelligence deliver inconsistent results. Provide multiple input modes (buttons, sliders, voice) to capture intent and simple controls to tweak parameters.

Feedback, transparency and accessibility are critical. A good interface continually informs the user what the system is doing and why, and it listens when the user corrects it. Dashboards that visualise the model’s reasoning, thumbs‑up/down ratings and “Why this?” links support this two‑way conversation. Transparent designs that reveal both the “what” and the “why” build trust, as do clear confidence measures and data‑usage explanations—79 % of consumers worry about how brands handle their data. Accessibility isn’t optional: offer keyboard navigation, screen‑reader support and adjustable automation settings so personalisation doesn’t exclude anyone.

Finally, balance automation with human oversight. Decide which tasks the system should perform and when the person stays in control. Offer manual, assistive and autonomous modes and let users switch between them as trust grows. Design clear error states and give people the ability to undo or override automated actions. Multimodal input (voice, touch, vision) and accessible defaults broaden who can use your product, but always provide an escape hatch when the model is uncertain. Keep the human in the loop and treat automation as a collaborator—not a replacement.

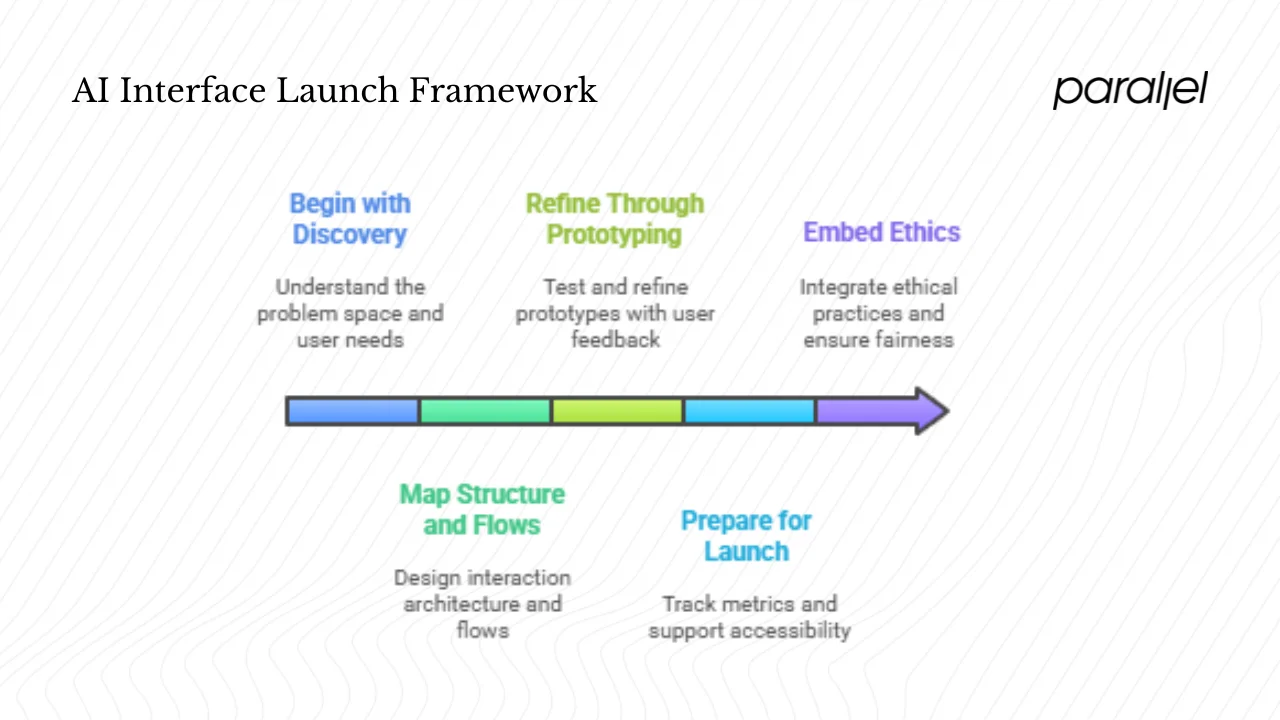

A step‑by‑step framework for launching an artificial‑intelligence interface

1. Begin with Discovery

Before jumping into design or prototyping, invest time in understanding the problem space.

- Talk to real users: Observe how they currently complete tasks and where their friction points lie.

- Identify true value: Look for areas where a model can meaningfully improve efficiency, accuracy, or satisfaction.

- Understand mental models: Learn how users think about automation, decision-making, and control.

- Define the use-case: Be precise about what the interface will do and what it should not do.

- Select the interaction style:

- Assistive – the model helps users perform actions faster.

- Suggestive – the model recommends options but leaves final choice to the user.

- Autonomous – the model takes action on its own in low-risk contexts.

- Collaborative – the system and user share control in ongoing, adaptive ways.

- Assistive – the model helps users perform actions faster.

- Clarify control boundaries: Document who—human or model—makes decisions at each step. Always ensure a human can intervene in high-stakes situations.

2. Map the Structure and Flows

Once the use-case is defined, outline how people and the system will interact.

- Design the information architecture: Identify the main screens, inputs, and outputs.

- Plot interaction flows: Map triggers, responses, and feedback loops.

- Account for uncertainty: Create branching paths for when the system lacks confidence or the user rejects its output.

- Prototype with realism: Use data that reflects real-world noise and edge cases to reveal weak spots early.

- Surface usability issues: Watch for confusing prompts, unclear hand-offs, or unpredictable model behavior.

3. Refine Through Prototyping and Testing

Turn insights into working prototypes and iterate quickly.

- Test with actual users: Observe where they hesitate, misunderstand, or mistrust the system.

- Refine interaction details:

- Add loading indicators to set expectations.

- Use confidence badges or “Why this?” explanations to make reasoning transparent.

- Offer simple, direct controls—accept, reject, edit—to keep users in charge.

- Add loading indicators to set expectations.

- Adjust tone and feedback: Ensure the interface communicates clearly, even under uncertainty or failure.

4. Prepare for Launch and Continuous Improvement

Launch isn’t the end—it’s the start of a feedback loop.

- Track experience metrics: Monitor completion rates, error frequency, user retention, and satisfaction.

- Track model metrics: Measure accuracy, confidence calibration, and user corrections.

- Close the loop: Feed validated user feedback into model retraining.

- Support accessibility: Provide adaptable defaults for automation level, font size, and contrast.

5. Embed Ethics, Privacy, and Accountability

From the first design discussion, integrate responsible practices.

- Be transparent: Explain what data is collected, how it’s used, and who can access it.

- Offer user control: Include opt-outs or limited data-sharing modes.

- Ensure fairness: Regularly audit outcomes for bias or disproportionate errors.

- Escalate when needed: Always allow users to reach a human reviewer in sensitive or high-impact cases.

- Keep records: Log important interactions and decisions for traceability and accountability.

Patterns and a design‑pattern library for artificial‑intelligence interfaces

Designers have catalogued patterns specific to designing interfaces for AI products. Vitaly Friedman’s research shows that chat‑based interfaces are giving way to more structured controls like prompts, sliders and templates. Here are five patterns we use frequently:

- Expressing intent and presenting output. Help users articulate what they want with pre‑prompts, query builders and voice input. When showing results, avoid raw text—use tables, cards or dashboards to make differences clear.

- Refinement and control. Few people accept the first result. Provide sliders, presets and editable text so they can tweak outputs. Always include undo and “Why this?” links to maintain transparency.

- Machine actions and context. Let the system complete tasks such as scheduling or summarising, but embed these actions where users already work—inside dashboards or editors—rather than in a separate chat window. Offer logs and review options to maintain trust.

- Confidence and human override. Show confidence measures with colour or labels and allow users to set how aggressive automation should be, from manual to autonomous. This acknowledges varying comfort zones and builds trust gradually.

- Multimodal and accessible inputs. Combine text, voice and file uploads in one panel so that people can communicate in the way that’s easiest for them.

Challenges and pitfalls

When designing interfaces for AI products, no interface is foolproof. Common pitfalls include over‑automation (users feel powerless), cognitive overload (too many options crowd the screen), opacity (black‑box behaviour erodes trust), and the inevitable hallucinations or biases that come from imperfect modelsnngroup.com. Personalisation can inadvertently exclude users with disabilities, and constant data collection can exhaust people. Design adaptable flows, provide clear escape hatches and transparency, and test with varied users to surface these issues early.

Future trends and how to prepare

Looking ahead, multimodal inputs (text, images and audio) will become standard, and systems will increasingly anticipate intent rather than wait for explicit commands. Human–machine collaboration will deepen as tools enable real‑time co‑creation instead of one‑way automation. Transparency will differentiate products—Lumenalta notes that clients and stakeholders demand visibility into the artificial‑intelligence‑driven design process. Finally, agents will live across devices and contexts, so design with portability and shared state in mind.

Checklist for founders, PMs and design leads

To ground these ideas, here’s a checklist you can use when designing interfaces for AI products:

- Define the user benefit. Are you solving a real problem with artificial intelligence, or adding it because it’s trendy?

- Map user flows and model touchpoints. Identify where the system intervenes and how users transition between model‑driven and manual steps.

- Clarify control. Explicitly state what the user controls versus what the model controls. Offer override options.

- Show system state and confidence. Use visual cues to indicate when the model is working, its confidence measure and any errors.

- Embed accessibility and personalisation early. Include settings for degrees of automation, preferences and accessibility modes from day 1.

- Measure both UX and model metrics. Track task success, satisfaction and retention alongside model accuracy and correction rates.

- Design for transparency and trust. Provide explanations, show data usage and let users opt out of personalisation.

- Plan to iterate. Collect feedback and refine both the model and the interface continuously after launch.

- Consider ethics and privacy. Evaluate bias, fairness and data usage. Ensure you have consent flows and fallback procedures for failures.

Closing thoughts

Designing interfaces for AI products is as much about people as it is about algorithms. The probabilistic nature of models introduces unpredictability; hallucinations are a real issuenngroup.com. With thoughtful design, clear communication and human‑centred principles we can turn complexity into usable value. These systems should augment human capabilities, not eclipse them. Use the patterns and principles shared here as a starting point, experiment with real users and adapt them to your context. Those who invest in empathy, explainability and collaboration will build the most enduring products. Keep learning and evolving your approach as the technology matures. Stay curious and keep iterating.

FAQ

1) What does “designing interfaces for AI products” actually mean?

It refers to designing the user interface and interaction flow for software whose core functionality is powered by artificial intelligence. This involves visual design, interaction design and ethical considerations around how the model behaves, how users interact with it, how to collect feedback, handle errors, build trust, personalise experiences and ensure accessibility.

2) How is an artificial‑intelligence interface design different from regular UI/UX design?

Artificial intelligence introduces unpredictability and opacity. Outputs are probabilistic, so flows must accommodate varied responses. Designers must build transparency and trust mechanisms, allow overrides and handle complex failure modes. Traditional UI/UX often assumes deterministic flows with clear success and error states.

3) How much should users control versus how much should the system automate?

It depends on the context. Give users meaningful control and the ability to override. Offer modes ranging from manual to autonomous and let people choose their comfort zone. In high‑stakes domains, lean towards human oversight; for low‑risk tasks, automation can be more aggressive.

4) What are best practices for handling errors from artificial‑intelligence systems in the interface?

Expect errors and design for them. Surface low‑confidence states, provide clear error messages and offer fallback options. Allow users to correct outputs and feed those corrections back to the model. In critical scenarios, require human review before acting.

5) How can we build trust in artificial‑intelligence interfaces?

Be transparent about how the system works and what data it uses. Provide plain‑language explanations for suggestions. Show confidence measures and limitations. Offer user controls for personalisation and privacy. Handle failures gracefully and involve humans in the loop.

6) What role does personalisation play in artificial‑intelligence interface design?

Personalisation tailors experiences to individual users, increasing relevance and engagement. However, it must not diminish user agency or exclude people with different abilities. Allow users to adjust personalisation settings, explain how data is used and respect privacy.

.avif)