Ethical Considerations in AI Design: Guide (2026)

Understand ethical considerations in AI design, such as fairness, transparency, bias mitigation, and user privacy.

As a designer and founder working with AI, I constantly see the tension between pushing a product forward and ensuring it behaves responsibly. Modern AI systems can amplify our work at an incredible scale, but they also have the power to harm if we neglect the human impacts.

This guide is for early‑stage founders, product managers and design leaders who want to bake ethical considerations in AI design into their products from day one. Getting it wrong is costly: public confidence in AI companies declined in 2024, with global confidence that AI companies protect personal data dropping from 50% in 2023 to 47% in 2024. Building responsibly is not just about compliance; it is a chance to earn trust, stand out in a crowded market and build resilience for the long haul.

TL;DR

Ethical AI design isn’t optional—it’s a must for building user trust, avoiding harm, and meeting emerging regulations. This guide explains why ethics should be built into AI products from day one and outlines clear steps for early‑stage founders, designers, and PMs to prevent bias, protect privacy, ensure transparency, and build accountability. Backed by research and real‑world practices, it offers a practical framework for integrating responsible AI principles into everyday development.

Why should ethics be built into AI design from day one?

When AI products are rushed to market without ethical guardrails, the consequences land on users and the organisation. Biased training data, incomplete models and poor oversight create systems that reinforce discrimination. A review of healthcare AI found that machine‑learning models can base predictions on non‑causal factors like gender or ethnicity, perpetuating prejudice and inequality. The same review highlighted privacy breaches where patient data was shared without consent and emphasised that AI systems must be protected from breaches to avoid psychological and reputational harm.

Beyond harming users, ethical failures erode trust and attract regulatory scrutiny. Stanford’s 2025 AI Index shows that optimism about AI’s benefits increased, but confidence that AI systems are unbiased declined and fewer people trust AI companies to protect their data. Regulators have responded: the UNESCO Recommendation on the Ethics of Artificial Intelligence, adopted by 193 member states, places human rights and oversight at the centre of AI governance and calls for mechanisms that ensure auditability and traceability throughout an AI system’s life cycle.

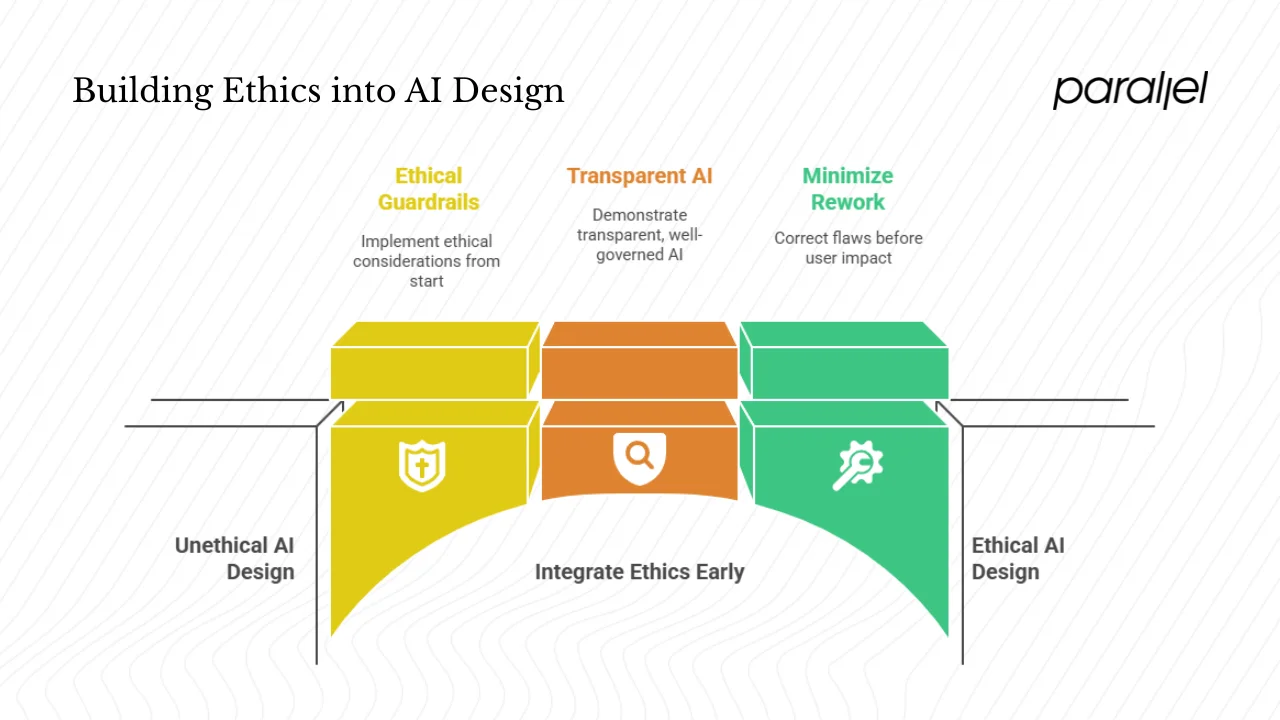

Ethics as a competitive advantage for startups

Startups often view ethics as a later problem, but my experience shows that integrating ethics early can differentiate a young company. Responsible AI practices build user trust and reduce the risk of public backlash. According to McKinsey’s 2024 research, 91% of executives doubt their organisations are “very prepared” to implement and scale generative AI safely, yet only 17% are actively working on explainability. This gap represents an opportunity for startups: if you can demonstrate transparent, well‑governed AI, you will stand out. Ethical design also minimises rework later; it’s much cheaper to correct a bias or privacy flaw before your product touches thousands of users.

Defining your ethical baseline

An ethical baseline clarifies what your team stands for and how you expect your product to behave. Three concepts anchor this baseline:

- Moral responsibility: Acknowledging that decisions made by AI systems reflect human choices and values. The UNESCO recommendation states that responsibility and liability for AI decisions must always be attributable to natural or legal persons. Startups should assign clear accountability for any harm caused by their models.

- User consent: People must know when AI is involved and must have control over their data. The UNESCO guidance calls for data governance that keeps control in users’ hands, allowing them to access and delete information.

- Autonomy: AI should augment rather than replace human agency. Your product should preserve the ability for users or stakeholders to override recommendations or opt out of automated decisions.

These principles align with broader frameworks, including UNESCO’s emphasis on human rights, transparency and fairness. They set the stage for the more specific considerations below.

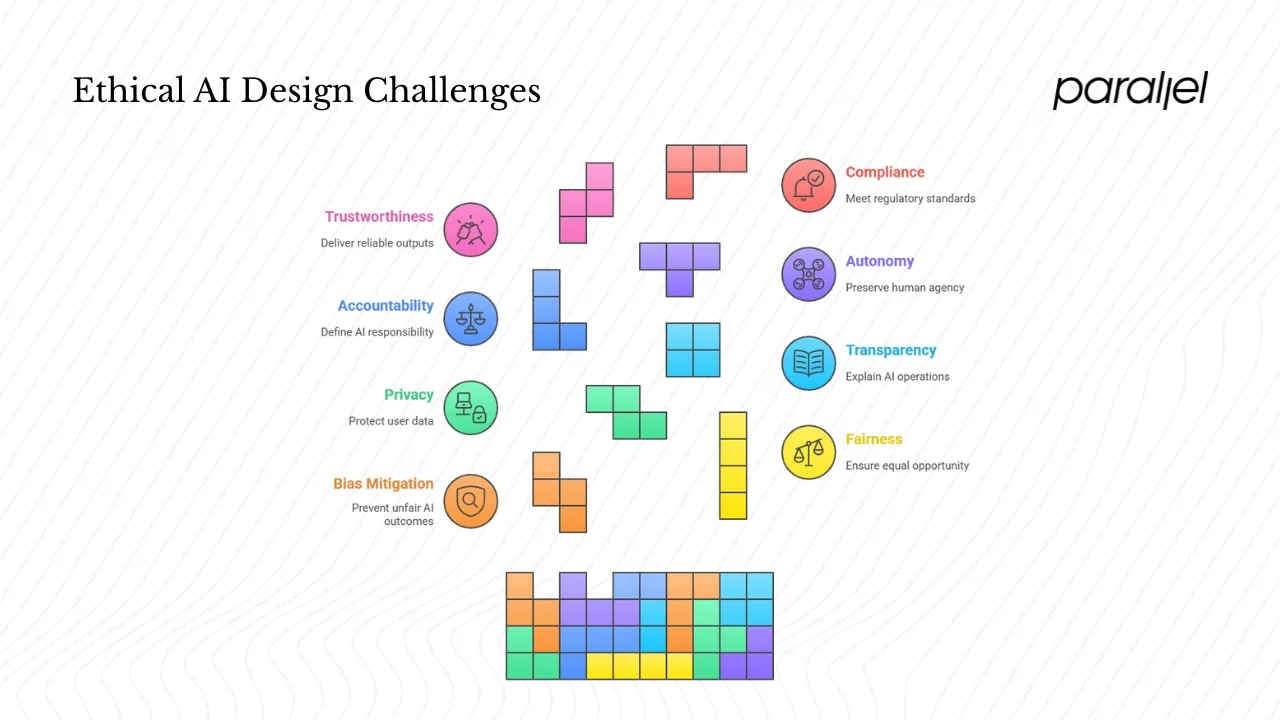

Key ethical considerations in AI design

Each of the themes below is a lens through which you can assess your AI product. I’ll describe the problem, share practical guidance and relate it to early‑stage startup realities.

1) Bias mitigation and discrimination prevention

Bias occurs when training data, model design or proxy variables lead to systematic unfairness. Healthcare studies show that unrepresentative data can under- or over‑estimate risks in specific populations. Preventing discrimination means ensuring that your AI doesn’t disadvantage or exclude groups based on race, gender, age or other attributes.

Practical steps include:

- Collect diverse data: Ensure your training data represents the full spectrum of your user base. This may mean deliberately sourcing data from under‑represented communities.

- Audit models regularly: Use fairness metrics (e.g., equal error rates across groups) to evaluate performance. In our client work at Parallel, we’ve found that simple stratified testing surfaces issues early.

- Test edge‑cases: Run scenarios that stress the model beyond typical inputs. Include adversarial testing to uncover hidden bias.

- Invite diverse voices: Include people from different backgrounds in user research and model reviews. Even small teams can bring in advisors or testers from outside the core demographic.

2) Fairness and inclusive design

Fairness in AI goes beyond mathematical parity. It’s about giving equal opportunity and correcting historical disadvantages. PMI’s ethics guide urges designers to scrutinise training data and refine models to prevent discrimination based on race, gender or socioeconomic status. Inclusive design ensures that under‑represented user groups are considered in every step, from research to consent flows.

To practice fairness and inclusion:

- Conduct inclusive user research: Recruit participants across age, ability, gender and socio‑economic backgrounds. Use inclusive methods such as remote interviews and accessible prototypes.

- Design for accessibility: Follow Web Content Accessibility Guidelines (WCAG) and design for assistive technologies. Inclusive design also covers cognitive load—ensuring instructions are clear and jargon‑free.

- Monitor outcomes: Track how different groups use and experience your AI. If error rates or satisfaction levels diverge, investigate why.

3) Privacy protection and data security

Privacy is fundamental. The healthcare review emphasises that respecting patient confidentiality and acquiring informed consent for data use are ethically required. It warns that misuse of personal data, like the Cambridge Analytica scandal or the sharing of patient data without consent, undermines trust.

For startups, this means:

- Privacy by design: Build privacy into the architecture. Use anonymisation and pseudonymisation where possible. Avoid storing sensitive data when you don’t need it.

- Restrict access: Limit who can view training data and model outputs. Implement role‑based access controls and encryption at rest and in transit.

- Clear consent flows: Communicate what data you collect, why and how long you keep it. Provide opt‑in and opt‑out options.

4) Transparency and explainability

Transparency means telling users and stakeholders how your AI operates. Explainability goes further: providing understandable reasons for specific outputs. The PMI blog notes that transparency builds trust and urges teams to be upfront about how AI systems work and how user data is used. McKinsey’s research underscores the importance of explainability, pointing out that 40% of organisations in 2024 identified explainability as a key risk but only 17% were working to address it.

In practice:

- Expose high‑level logic: Share simplified descriptions of how your model arrives at recommendations. Use analogies and visualisations rather than technical jargon.

- Use explainable models when possible: Favour interpretable techniques (decision trees, linear models) in high‑impact contexts. When using complex models, provide feature importance scores or example‑based explanations.

- Create model cards: Document your models’ purpose, training data, performance metrics and limitations. This is part of internal governance and external transparency.

- Log and monitor decisions: Maintain audit trails that capture inputs, outputs and key steps in the reasoning process. This aids debugging and accountability.

5) Accountability and human oversight

Accountability refers to who is responsible when an AI system errs. The healthcare review notes that holding AI systems accountable is challenging because liability may be unclear. The UNESCO recommendation insists that ultimate responsibility for AI decisions must reside with people.

To ensure accountability:

- Define roles: Assign an ethics champion or product owner responsible for the model’s impact. Establish escalation paths for issues.

- Keep humans in the loop: PMI emphasises that there is no “set it and forget it” with AI—human oversight is essential. For high‑stakes decisions (hiring, lending, healthcare), ensure human reviews or can override the AI’s output.

- Document decisions: Capture reasoning, assumptions and data sources so that issues can be traced. This helps respond to user complaints and regulatory inquiries.

6) Autonomy and user consent

Autonomy means preserving human agency when AI makes recommendations. Users should know when they are interacting with AI and should be able to opt out. The UNESCO recommendation calls for data governance that keeps control in users’ hands and emphasises stronger consent rules. PMI’s guidelines add that transparency should secure informed consent.

Practical steps include:

- Clear disclosures: Indicate when an AI is generating content or making decisions. Use plain language labels such as “AI‑generated suggestion”.

- Opt‑out mechanisms: Allow users to revert to human assistance or manual processes. In our work, we’ve found that offering an override builds trust.

- Granular consent: Let users choose which types of data they share and what they receive in return. For instance, allow them to provide anonymised data for model improvement while withholding personal identifiers.

7) Trustworthiness and moral responsibility

Trustworthiness is earned by consistently delivering reliable, safe outputs. It goes hand in hand with moral responsibility—design teams must consider the broader societal impacts of their AI. The IDEO ethics cards emphasise respecting privacy and the collective good and reminding designers not to presume AI’s desirability. They also stress that data is not truth: data is incomplete and can be biased.

From a startup perspective:

- Be honest about limitations: Acknowledge uncertainties and potential errors. Setting realistic expectations builds credibility.

- Prioritise human values: Use your company’s core values to guide decisions. Harvard’s ethics blog argues that a values‑based approach, grounded in organisational culture, is more flexible and responsive than a rigid principles‑based approach.

- Engage stakeholders: Values‑based ethics encourage active stakeholder engagement, ensuring diverse perspectives shape your AI.

8) Regulatory compliance and broader governance

Globally, AI regulation is evolving. UNESCO’s recommendation calls for oversight, impact assessments, audit mechanisms and user data control. The EU AI Act and other regional frameworks impose transparency, documentation and risk management requirements. McKinsey notes that the EU AI Act classifies high‑risk systems (e.g., recruiting software) and requires companies to disclose capabilities, limitations and logic.

For product leaders:

- Stay ahead of regulations: Track local and international rules, including data protection laws like the GDPR and India’s Digital Personal Data Protection Act. Build compliance into your roadmap.

- Design for auditability: Adopt processes that will stand up to regulatory scrutiny. Model cards, data lineage documentation and fairness metrics should be ready for review.

- Scale governance: As your startup grows, formalise your ethics processes. A lightweight ethics checklist may suffice initially, but you’ll need more robust governance, such as a cross‑functional ethics review board, as you scale.

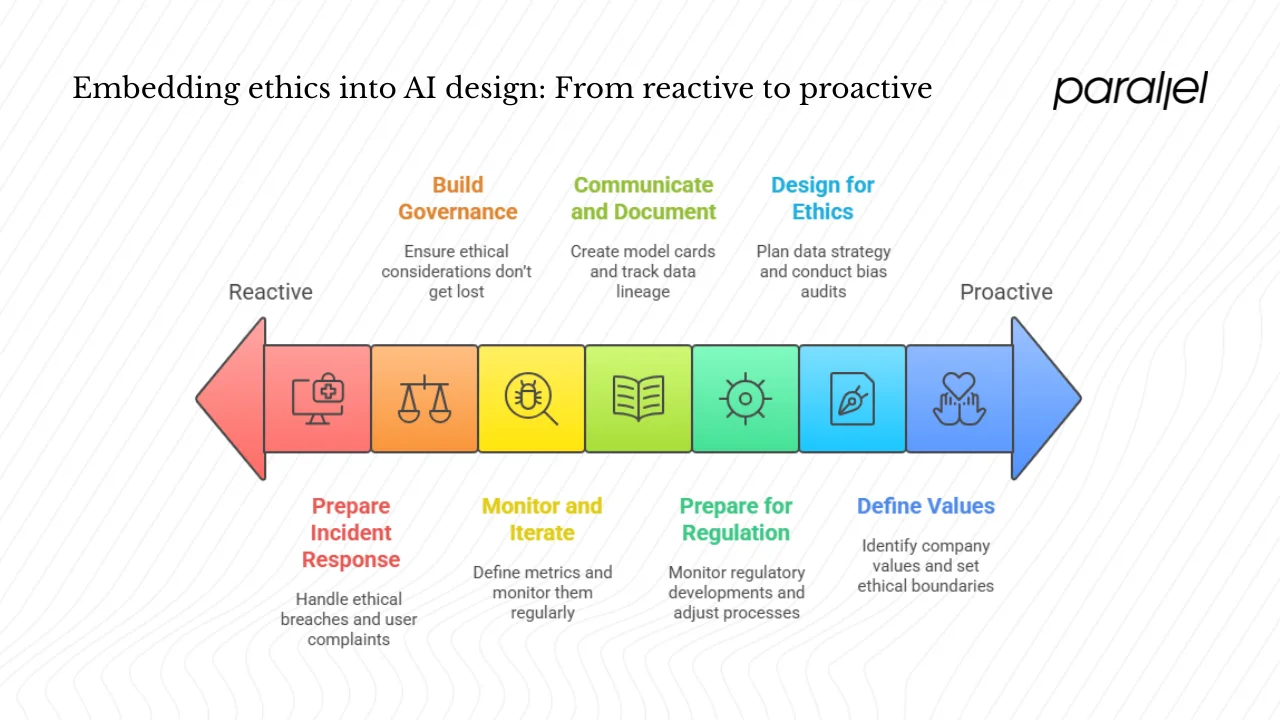

A practical framework for embedding ethics into AI design

Ethical design is not a one‑off task. It’s an ongoing practice that should be integrated into your product development cycle. Here’s a step‑by‑step framework based on what has worked for our team.

1. Define values and principles upfront

Start with introspection. Identify the values your company stands for and use them to set ethical boundaries. Harvard’s values‑based approach emphasises aligning decision‑making with core organisational values. Tools like IDEO’s ethics cards can prompt fruitful conversations about data, consent and unintended consequences.

2. Map stakeholder impact and risk

Identifying your AI will affect who. Users are obvious, but consider non‑users who may be impacted indirectly (e.g., job applicants rejected by an algorithm). List potential harms—bias, privacy loss, autonomy erosion—and estimate their likelihood and severity. This risk mapping should inform design choices and testing priorities.

3. Design for fairness, privacy and transparency from the start

Ethics cannot be bolted on. During the data collection and model development phases:

- Plan your data strategy: Document sources, ensure diversity and check for bias. Aim for representativeness and quality, as advocated by UNESCO’s new data governance model.

- Conduct bias audits: Use fairness metrics and cross‑group comparisons. Document assumptions and decisions.

- Build consent and logging into the interface: Let users know how their data is used and provide them with control. Create logging mechanisms for traceability.

4. Build governance and human oversight

Create structures that ensure ethical considerations don’t get lost amidst deadlines:

- Define roles and responsibilities: Assign an ethics champion and set up an ethics review process. Keep it lightweight initially; a monthly review may suffice.

- Incorporate ethics into sprints: Add ethical impact as a criterion during planning. For instance, treat bias checks as tasks in your backlog.

- Prepare incident response: Define how you will handle ethical breaches or user complaints. Plan escalation steps and communications strategies.

5. Monitor, measure and iterate

Ethical design is iterative. Define metrics—fairness scores, user trust indicators, transparency metrics—and monitor them regularly. The healthcare review highlights that AI systems can evolve, raising new risks. Model drift can reintroduce bias; continuous monitoring and retraining help mitigate this. Use user feedback and quantitative metrics to drive improvements.

6. Communicate and document

Documentation is part of transparency and accountability. Create model cards for each model, track data lineage and record design decisions. Communicate your ethics policies publicly—this builds trust and invites feedback. The UNESCO recommendation urges Member States to implement oversight and accountability measures, which also applies to private companies.

7. Prepare for regulation and scalable governance

As you scale, your governance should mature. Monitor regulatory developments and adjust processes accordingly. Use frameworks like UNESCO’s recommendation and the EU AI Act to anticipate compliance requirements. Invest in tools for bias detection, privacy management and explainability; these will help you meet external audits and build resilient products.

Case studies and startup‑specific considerations

Where AI ethics failed

Several real‑world failures illustrate the cost of ignoring ethics. In the criminal justice system, the COMPAS algorithm used in US courts was exposed for its racial bias by a ProPublica investigation. In healthcare, unrepresentative data has led to misdiagnoses and inequitable treatment. These cases show that biased models can harm people and damage reputations.

Pragmatic ethics in early‑stage startups

Resource constraints are real. Early teams juggle fundraising, product-market fit and growth. Here are pragmatic tips:

- Minimal viable governance: Start with a simple ethics checklist and a monthly review meeting. Document decisions and revisit them as you learn.

- Integrate ethics into your backlog: Treat bias tests, consent flows and privacy reviews like any other feature. Allocate time for them in sprints.

- Cross‑functional collaboration: Engage legal counsel, domain experts and user researchers early. Diversity of expertise helps identify blind spots.

- Lightweight audit checklists: Before each release, run through a short list: Did we test for bias? Do we have a consent banner? Is there human oversight where needed? Iterate and expand as you grow.

Conclusion

Designing AI ethically is not optional—it's a fundamental requirement for building products that users trust and regulators will accept. Failing to address ethics invites bias, discrimination and privacy breaches, and the resulting reputational damage is hard to recover from. Global public confidence in AI fairness and data protection is already declining, and organisations that ignore ethics will be left behind.

But there is a bright side: embedding ethical considerations in AI design can be your advantage. By defining your values, mapping stakeholders, designing for fairness and transparency, building governance, monitoring outcomes and preparing for regulation, you lay the foundation for resilient, trustworthy AI. This not only mitigates risk but also differentiates your product, attracts conscientious users and supports long‑term success.

As you move forward with your AI project, ask yourself: How will your product impact the people who use it? What values do you refuse to compromise? The answers will guide you to build responsible AI that serves both your business and society. Let’s choose to build with care and set a standard that future products will follow.

FAQ

Q1. What are the most common ethical issues in AI design?

Bias and discrimination arise when models are trained on unrepresentative data, leading to unfair outcomes. Lack of transparency and explainability erodes trust, while data privacy breaches and inadequate human oversight are recurring issues. Organisations must also consider moral responsibility and accountability.

Q2. How do I start implementing ethical considerations in a small startup?

Begin by defining your organisation’s values and ethical baseline. Map stakeholder impacts, design for fairness and privacy from the start, and create a simple governance process (e.g., an ethics checklist and regular review). Include ethics tasks in your backlog and engage cross‑functional partners early.

Q3. What is the difference between transparency and explainability in AI?

Transparency means being upfront about how your AI works and how data is used. Explainability goes further: it provides understandable reasons for specific outputs, helping users and regulators trust and verify the system.

Q4. How can we measure fairness in our AI system?

Use metrics appropriate to your domain: equal error rates across demographic groups, demographic parity or equal opportunity metrics. Monitor these regularly, run stratified tests and compare outcomes across segments to detect disparities.

Q5. Does regulatory compliance mean we just follow laws?

No. Laws are evolving. The UNESCO recommendation calls for broader oversight, audit and due diligence mechanisms, and the EU AI Act imposes transparency requirements for high‑risk systems. Aim for ethical best practices—fairness, privacy, accountability—beyond legal minimums to build resilience.

Q6. Who is responsible if an AI system causes harm?

Humans are ultimately responsible. The UNESCO recommendation states that responsibility and liability for AI decisions must be attributable to natural or legal persons. Define accountability within your organisation and ensure human oversight.

Q7. How do we balance innovation speed and ethical safeguards?

Embed ethics into your development process instead of treating it as an afterthought. Create lightweight governance, integrate ethics tasks into sprints, and use frameworks like values-based ethics to guide decisions. In my experience, early investment in ethics prevents costly fixes later and builds long‑term trust.

.avif)