How to Recruit Participants for Research Study (2026)

Learn how to recruit participants for a research study, including defining criteria, outreach methods, and incentives.

The way you recruit people for product research is as important as the number of people you involve. If you field the wrong group, your data will mislead your product, wasting precious time and money. As a founder at Parallel, I’ve seen early‑stage teams chase volume instead of relevance—only to realise that the real question isn’t “How many?” but “How do you recruit participants for a research study” who actually match your goals. This article answers that question with principles, methods and examples that work in 2025.

Step #1: Define your research objective and target population

Before thinking about platforms or incentives, be clear about what you hope to learn. Are you testing a prototype’s usability? Exploring behavioural patterns? Seeking feedback on features? Articulate the decision you need to make. Without a clear objective, you’ll gather random opinions that don’t inform product strategy.

Next, identify your target population—people whose experiences matter to your decision. Consider:

- Demographics: age, location, education or job role.

- Behaviours: frequency of product use, workflows, tasks.

- User segments: novices vs power users, paying customers vs prospects, early adopters vs hold‑outs.

Your target population is not everyone. The US National Institutes of Health emphasises that recruitment must be equitable and reflect the population needed to answer the research question. Defining inclusion criteria ensures you invite people who meet key characteristics. Exclusion criteria remove those who might distort findings or face undue risk—for example, participants who speak a different language or have health conditions that make participation unsafe.

If you build a budgeting app and want to improve onboarding for first‑time users, your inclusion criteria might be “people who started using a budgeting tool within the last month.” Exclusion might be “anyone with prior experience building financial software.” Having a crisp target population keeps recruitment aligned with your objectives and sets the stage for quality data.

Step #2: Design your sample and choose sampling methods

Sampling is how you decide which members of your target population to invite. It influences representativeness, bias, cost and speed. There are two broad categories—probability sampling and non‑probability sampling. In probability sampling, every member of the population has a known chance of being selected. Simple random sampling draws participants from a complete list using random methods. Stratified sampling divides the population into sub‑groups (e.g., age or experience level) and samples randomly within each stratum to ensure representation for minorities.

Non‑probability methods are more common in lean product research. Convenience sampling selects whoever is available, making it quick and inexpensive but potentially biased. Purposive sampling targets individuals with specific knowledge or experience that matches the research goal. Snowball sampling enlists participants to refer peers, useful for hard‑to‑reach groups. Quota sampling recruits to fill quotas for certain segments (e.g., equal numbers of iOS and Android users). When resources are tight, I often combine purposive and convenience sampling—start with known users who meet the criteria, then expand through referrals.

Choosing a method depends on the question, timeline and resources. If you need statistically generalisable results, probability sampling is the gold standard; for exploratory design research, purposive or snowball sampling often suffices. The key is to remain transparent about limitations and avoid overstating findings.

Selecting the right sampling method is part of understanding how do you recruit participants for a research study. When resources and timelines are tight, clearly communicating the trade‑offs between representativeness and speed helps stakeholders appreciate why a certain approach was chosen.

Step #3: Ethical and institutional oversight

Even small studies involve human subjects; ethics matter. Institutional Review Boards (IRBs) or Human Research Protection Programs (HRPPs) oversee recruitment and protect participants. Brown University’s policy states that recruitment materials must be reviewed and approved by the IRB, must provide sufficient time for participants to consider participation, and must avoid undue influence or guarantees of benefits. The NIH policy manual forbids finders’ fees and recruitment bonuses and requires compensation plans to be described in the protocol and consent forms.

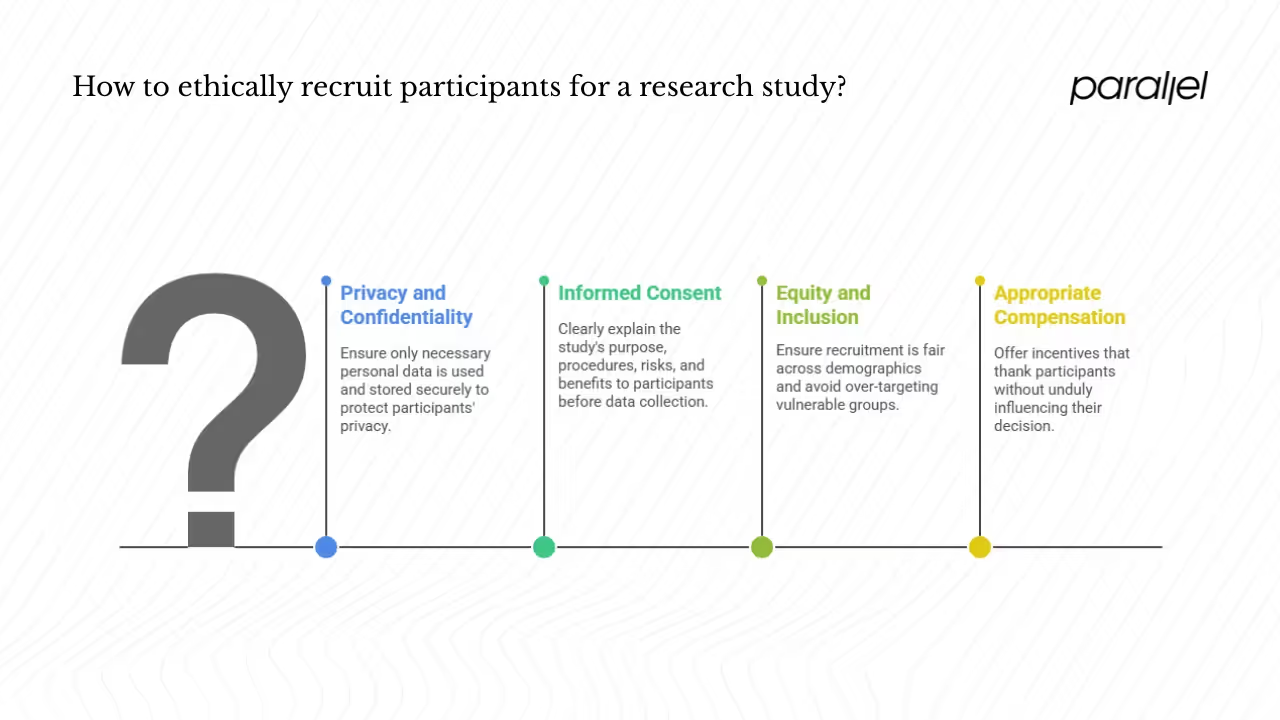

Ethical recruitment means:

- Privacy and confidentiality: use only necessary personal data and store it securely.

- Informed consent: clearly explain the purpose, procedures, risks and benefits before any data is collected.

- Equity and inclusion: ensure recruitment is fair across demographics and avoid over‑targeting vulnerable groups. Brown’s guidance emphasises equitable recruitment and warns against multiple contacts that could exert undue pressure.

- Appropriate compensation: incentives should thank participants for their time without unduly influencing their decision.

When wondering how do you recruit participants for a research study in a way that respects people, always start with ethics. IRBs exist to ensure that our recruitment practices serve participants’ interests and protect their rights.

If your study involves sensitive data or certain populations (children, prisoners, pregnant women), consult your IRB early. Even for product research, it’s wise to adopt ethical practices—transparency builds trust with participants and your team.

Step #4: Choose recruitment channels and platforms

Now that you know how do you recruit participants for a research study conceptually, let’s discuss where to find them. There are two main avenues: offline outreach and online platforms.

Offline outreach

Even in 2025, offline channels matter, especially when your target includes people less active online or with limited English proficiency. Flyers, posters and postcards at community centers, libraries or coffee shops can attract participants. A pilot trial article suggests creating simple, visually appealing materials and distributing them through bulletin boards, local events, social organisations and listservs. Working with community gatekeepers—respected leaders or organisations within a community—builds trust and helps reach underrepresented groups. Spend time volunteering or engaging with the community before recruiting; trust cannot be rushed.

Online recruitment platforms

Most product research now happens online. Platforms like Prolific, Respondent and UserTesting offer large pools of pre‑screened participants. Use prescreening filters to match your inclusion criteria and avoid unqualified respondents. According to a 2025 Userlytics report, 55% of companies recruit from internal user databases and 49% use external agencies. That means your own customer base is a powerful source—send in‑app invitations or email campaigns targeting users who fit your criteria. Social media ads and forums (e.g., Reddit communities, Slack groups) can reach specific segments quickly. However, volume doesn’t guarantee quality: only 3–20% of contacted individuals actually participate in studies. Plan for a low response rate and over‑recruit accordingly.

When using any platform, craft clear messages that convey:

- Benefits of participating: what they’ll learn or how their feedback will influence the product.

- Time commitment: session length and tasks.

- Privacy assurances: data protection and confidentiality.

- Incentives: type and amount.

User research is about building relationships, not just collecting data. Even automated recruitment can be personalised—address prospective participants by name, mention how they were selected, and invite questions. Avoid jargon and be transparent about any compensation and privacy policies.

#5: Create a thoughtful screening process and incentives

After capturing interest, you need to filter people who truly match your inclusion criteria. Screening questionnaires are the first line of defence. Keep them short and focused on behaviours relevant to your objective: ask about recent product use, experience levels and demographics. Use multiple choice questions rather than open text; they are easier to analyse and reduce attrition. When screening for a budgeting app onboarding study, questions could include:

- How long have you used your current budgeting tool? (less than 1 month, 1–6 months, more than 6 months)

- How often do you log into your budgeting app? (daily, weekly, monthly)

- Have you designed or built a finance app before? (yes/no)

Screening is not a bureaucratic hoop; it’s at the core of how do you recruit participants for a research study that aligns with your goals. Thoughtful questions prevent misaligned participants from slipping through and respect their time by disqualifying early when necessary.

Multiple screening stages can improve quality but slow down recruitment. For short studies, one stage is enough; for sensitive research, follow up with a 5‑minute call to confirm suitability. Keep in mind that 46% of companies cite difficulty finding participants and 44% cite lack of resources—efficient screening helps you manage limited budgets.

Incentives and motivations

Compensation matters, but it shouldn’t coerce. The University of Oregon notes that compensation can be monetary (cash, gift cards, vouchers) or non‑monetary (trinkets, course credit), and it should not be so large that it unduly influences decision‑making. Payments should be pro‑rated if participants withdraw early and should never be considered benefits of participation.

Monetary rewards are effective for general consumer studies; however, non‑monetary motivations can be powerful. Many early‑stage SaaS users appreciate early access to new features, the opportunity to influence product direction, or the chance to learn something new. For enterprise research, emphasise the professional benefit—how participation can improve their workflows or decision‑making.

We’ve found that modest gift cards or product credits accompanied by a sincere thank‑you work well. Avoid paying participants more than you can justify; high payouts can distort responses and raise ethical concerns.

Step #6: Manage logistics and participant experience

Organising sessions requires more than calendars. You must coordinate schedules, accommodate time zones (especially when recruiting globally), and consider participants’ comfort. Remote research—through video or unmoderated tools—offers convenience but increases dropout risk. A 2025 viewpoint on digital trials notes that remote participants are more likely to disengage because of unclear requirements, lack of rapport and technical challenges. To mitigate this:

- Send clear instructions and reminders: outline expectations, provide links and test equipment ahead of time.

- Host orientation or warm‑up sessions: build rapport and reduce anxiety.

- Have a contingency plan: schedule buffers and over‑recruit to account for no‑shows.

For in‑person sessions, ensure locations are accessible, safe and comfortable. Offer flexible scheduling (including evenings or weekends) and provide translation or sign‑language services for participants with limited English proficiency. The AMA journal highlights underrepresentation of people with limited English proficiency and calls for early engagement, inclusive recruitment and language assistance. Address these needs proactively; they are not afterthoughts.

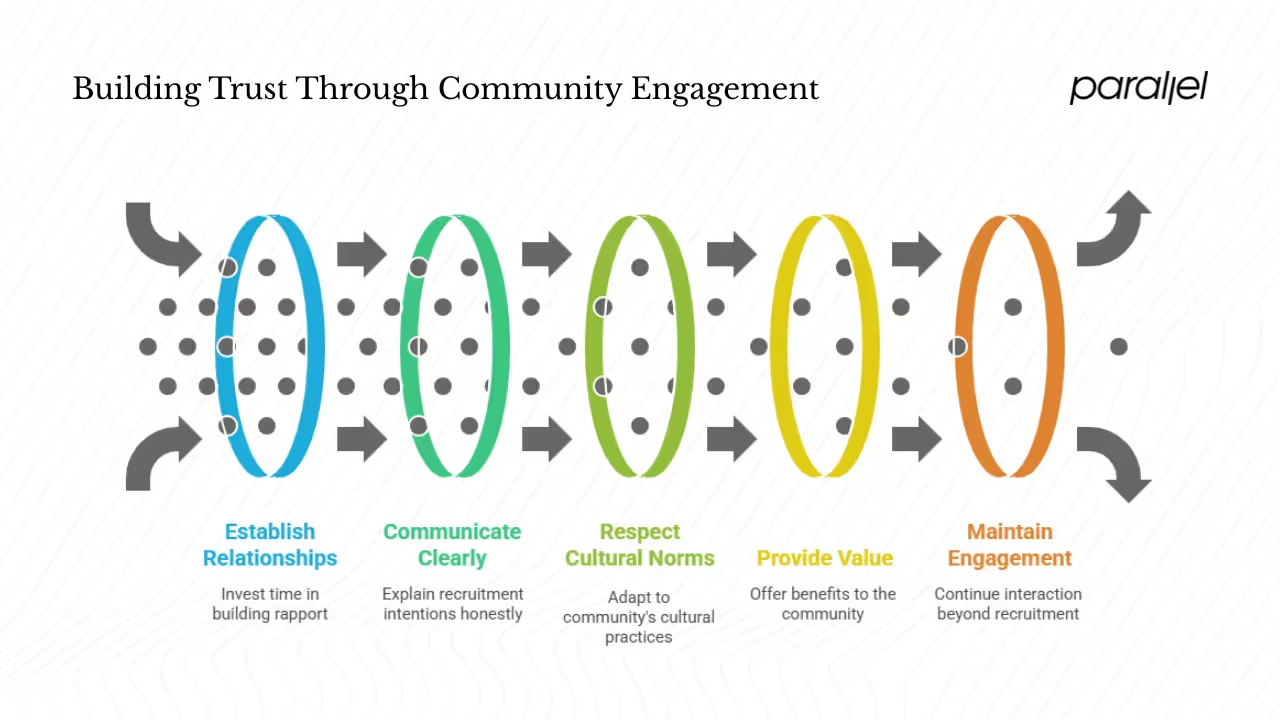

Step #7: Engage communities and build trust

Traditional recruitment overlooks marginalised groups or those without digital access. Community engagement helps bridge this gap. According to the pilot trials article, researchers should identify gatekeepers—formal or informal leaders who influence communities—and invest time in relationships. Gatekeepers can introduce you, vouch for your intentions and help you reach participants who might otherwise ignore a flyer or ad.

Building trust involves:

- Being clear and honest: explain why you’re recruiting in their community, what you’ll do with the information and how it might benefit them.

- Respecting cultural norms: ask gatekeepers about appropriate language, venues and timing.

- Providing value: volunteer, sponsor an event or share findings with the community. People are more willing to participate when they see mutual benefit.

Always remember that communities are not recruitment channels; they are partners. Engagement is ongoing—return results, thank participants publicly and maintain the relationship beyond a single study.

Step #8: Optimise online platforms and prescreening

Online tools simplify how you recruit participants for a research study at scale. Here’s how to use them effectively:

- Choose the right platform: Prolific is strong for academic and behavioural studies; Respondent is good for professional B2B participants; UserTesting or PlaybookUX specialise in usability testing. Each has different cost structures and prescreening capabilities.

- Apply prescreening filters: filter on demographics, occupation, product usage or country to narrow the pool. This reduces screening workload and increases match rates.

- Balance reach and qualifications: a broad search may yield more candidates but lower quality. Narrow filters improve quality but may slow recruitment. Start broad, then tighten criteria if you have too many unqualified applicants.

- Protect data quality: include attention checks or validation questions during screening to weed out bots or inattentive respondents.

Internal user databases remain one of the richest sources—55% of companies use them. Integrate analytics to identify active users who match your criteria. Respect privacy laws (GDPR, CCPA) and obtain consent before contacting users. Use a personalised tone, thank them for their loyalty and explain how their feedback will shape improvements.

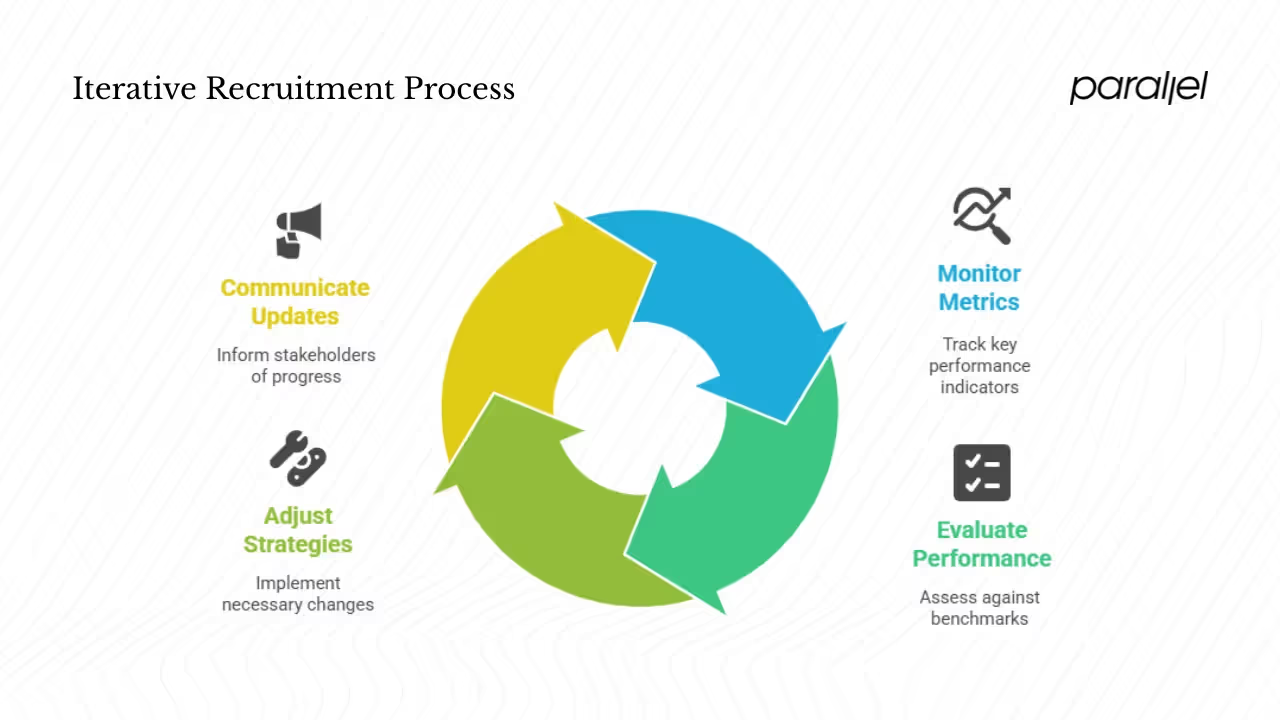

Step #9: Monitor, evaluate and adjust your recruitment process

Recruitment is iterative. Monitor metrics like:

- Response rate: number of people who respond to invitations vs those contacted.

- Eligibility rate: ratio of respondents who pass screening.

- Cost per recruit: total spending divided by number of participants.

- Time‑to‑recruit: days from initial outreach to session completion.

- Participant quality: quality of feedback or usability metrics.

Continuous monitoring and adjustment is part of how do you recruit participants for a research study in a professional way. By treating recruitment as an ongoing process rather than a one‑off task, you can refine methods and outcomes over time.

Low response or eligibility rates may indicate that your outreach channels aren’t reaching the right people or that your screening is too restrictive. If recruitment is too slow, adjust inclusion criteria (while staying true to the research objective) or try new channels. Keep budgets and timelines aligned; when they slip, inform stakeholders early.

Case studies and examples

Shoestring budgeting app pilot: A startup in our portfolio wanted to test a new onboarding flow for people new to budgeting apps. They defined inclusion as “users with < 1 month of using their current budgeting tool” and excluded those with prior finance app development experience. They recruited through their existing email list, social media ads targeting “personal finance beginners” and partnerships with finance bloggers. Screening questions filtered out experienced developers. Participants were thanked with $20 gift cards. The team conducted 10 remote usability sessions; 3 people no‑showed, so they over‑recruited by 30%. Feedback helped them simplify onboarding screens, reducing time‑to‑setup by 30%. This small pilot demonstrates how you recruit participants for a research study without spending heavily.

Community partnership for language inclusion: A bilingual product team wanted feedback on a healthcare appointment scheduler from Spanish‑speaking adults. Rather than rely on general panels, they partnered with a local community health centre. Gatekeepers at the centre introduced them to potential participants, explained the study in Spanish and helped schedule sessions. The team provided language assistance and delivered results back to the community. Recruitment took longer than sending out a survey but resulted in higher engagement and more relevant insights. The process embraced the AMA’s call for early engagement and inclusive recruitment of people with limited English proficiency.

Internal database for B2B SaaS: Another early‑stage SaaS company used its product analytics to identify customers who had recently churned after a poor onboarding experience. They filtered the database by industry, company size and role (product manager, data analyst) and sent personalised invitations. Out of 100 invitations, 20 responded; 12 qualified and completed interviews. Insights revealed a confusing data import workflow. By addressing it, they reduced churn by 20%. This illustrates the power of tapping your own users and underscores why 55% of companies recruit from internal databases.

Conclusion: recruitment is an investment, not a chore

Good research starts with the right people. If you skip the upfront work of defining your objective, identifying your target population, choosing suitable sampling methods and planning ethically, you risk learning the wrong lessons. Recruitment is not just a logistical step; it shapes the quality and credibility of your insights.

At Parallel, we treat recruitment as an investment. We use a mix of sampling methods, engage communities, apply rigorous screening, offer fair incentives and constantly evaluate our process. We never forget that participants are people with their own time and expertise. Respecting them is key to unlocking insights—and the answer to “how do you recruit participants for a research study” lies in that respect. Follow the principles and examples here, adjust them to your context and you’ll increase both the relevance and impact of your research.

FAQ

1) What is the recruitment method in research?

It’s the plan for identifying and inviting people to participate in a study. It includes the sampling strategy (probability vs non‑probability sampling), outreach channels (internal databases, social media, panels), screening procedures and consent process.

2) What is the fastest way to recruit quality research participants?

Use your existing networks—customers, colleagues, friends of friends—and leverage platforms with pre‑qualified participants. Targeted ads on social media can generate leads quickly. But speed often trades off with representativeness; set realistic expectations and over‑recruit to account for drop‑outs.

3) How to recruit users for user research?

Clarify your user segments and behaviours that matter. Use product analytics or customer data to find users who match those criteria. Reach out via email or in‑app invitations, emphasise the benefit of participating, and offer fair compensation. You can also use third‑party platforms, but always maintain control over screening and consent.

4) What is an example of participant recruitment?

A startup testing a mobile budgeting app defined their target as new users (< 1 month of usage) and excluded people with finance app development experience. They recruited via their email list, social media ads and partnerships with bloggers, screened respondents with brief questions, provided $20 gift cards and ran remote usability sessions. This example shows how do you recruit participants for a research study effectively on a tight budget.

.avif)