How Does GenAI Work? Complete Guide (2026)

Understand how generative AI works, including neural networks, training data, and applications across industries.

Generative artificial intelligence (GenAI) isn’t just another hype cycle. In the last two years, private investment in this space surged almost 20%, with more than US$33.9 billion flowing into generative models in 2024. Business adoption is keeping pace: a McKinsey survey published in May 2024 found 65% of organisations now use generative systems in at least one function, up from a third of firms less than a year earlier.

As I’ve worked with early‑stage software teams, these numbers match what we see on the ground—everyone wants to know how GenAI work and whether it’s the right fit for their product. This piece covers the mechanics behind GenAI, explains its place within artificial intelligence, and gives practical guidance for founders, product managers and design leaders.

What makes GenAI different?

Traditional artificial intelligence systems classify, predict or rank. Generative artificial intelligence systems go further. They take patterns learned from large datasets and produce new content—text, images, code, audio, video or structured data—rather than just evaluating input. Large language models (LLMs) such as GPT‑4, Claude, Gemini or Llama are prime examples: they process a sequence of tokens and suggest a plausible next token based on probabilities learned during training. This simple mechanism can produce paragraphs of coherent prose, drawings or computer programs. The term GenAI has become shorthand for this class of models; when I use it here, I mean any artificial intelligence model that synthesises new content from learned patterns.

Practical examples

A few real‑world examples show GenAI’s versatility:

- Text generation: Tools like ChatGPT, Claude and Copilot write summaries, marketing copy or internal knowledge base articles. In our studio, we’ve used ChatGPT to produce a draft UX copy for onboarding flows; we then refine tone and clarity based on our brand’s voice.

- Image creation: Diffusion‑based models such as DALL·E and Stable Diffusion turn text descriptions into illustrations. Designers can quickly try out mood boards or test icon concepts without commissioning artwork.

- Audio and video: Models like Suno or Runway Gen‑2 generate music or short video clips for prototyping motion design.

- Synthetic data: When user data is sensitive or scarce, generative models can create synthetic user responses or datasets; however, as IDEO researchers show, synthetic personas seldom match the richness of real participants.

Relevance to start‑ups and product design

Early‑stage teams move quickly and must iterate. GenAI can accelerate ideation and reduce overhead by automating small tasks: drafting error messages, generating icons, synthesising research transcripts or even producing SQL queries. We’ve seen teams reduce time‑to‑value by nearly 30% by using large language models for initial documentation and support responses.

But generative systems are not a replacement for human insight—synthetic users often gloss over subtle needs. Used well, GenAI extends human creativity; used carelessly, it can mislead product direction. As you read on, keep asking yourself: how does GenAI work in your context?

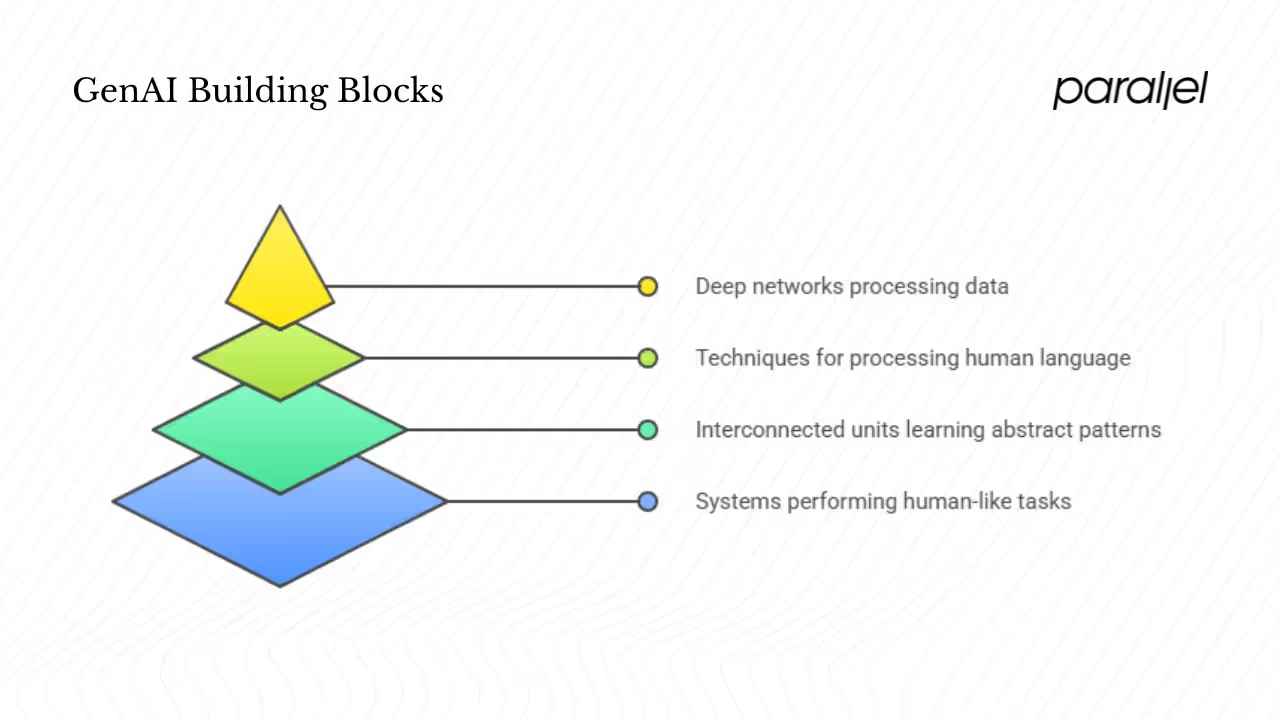

Important concepts and building blocks

Artificial intelligence and machine learning

Artificial intelligence is a broad field of computer science concerned with building systems that perform tasks normally requiring human cognition—recognition, reasoning or decision‑making. Machine learning is a subset where models learn patterns from examples rather than being explicitly programmed. The MIT Sloan Management Review explains machine learning as algorithms that “learn without explicitly being programmed” and are “as good as the data and the models that we have”. Machine learning includes supervised, unsupervised and reinforcement methods.

Neural networks and deep learning

Most generative models are built from artificial neural networks. These networks consist of layers of interconnected units (neurons) with trainable parameters. A simple network might have one hidden layer, but deep learning refers to architectures with many layers, enabling models to learn abstract representations. The depth and width of a network influence its capacity; deeper models can capture complex patterns but need more data and compute to train. An important innovation behind GenAI is the transformer architecture, which uses attention mechanisms to weigh connections between tokens in a sequence and supports massive parallelism.

Understanding these building blocks helps answer the central question: how does GenAI work when deep networks process data?

Natural language processing (NLP)

NLP encompasses techniques for working with human language. Tokenization (splitting text into units), embeddings (turning tokens into vectors) and language modeling (predicting the next token) are building blocks of large language models. GPT‑style models use unsupervised training on billions of tokens, learning to predict the next word in a sentence. Fine‑tuning and reinforcement learning from human feedback refine these models for specific tasks or safety requirements.

Architecture and model types

1) Transformer models and large language models

The transformer architecture underpins most modern language models. It replaces recurrence with self‑attention, allowing models to consider all tokens in a context simultaneously. Multi‑head attention and positional encoding let transformers capture both local and global connections. Large language models (LLMs) such as GPT‑4 or Claude consist of stacked transformer layers with billions of parameters. They generate text by sequentially sampling tokens from a probability distribution conditioned on a prompt and the model’s internal state. Scale is a major differentiator—larger models with more parameters generally perform better at a wider range of tasks, but they also require substantial compute resources for training and inference.

2) Generative adversarial networks and diffusion models

For images and other modalities, models like generative adversarial networks (GANs) and diffusion models excel. GANs pit two networks against each other: a generator that creates images and a discriminator that tries to distinguish generated images from real ones. Through this adversarial process, the generator learns to create realistic outputs. Diffusion models start with random noise and gradually denoise it into a coherent image through a series of learned steps; they have become popular for their stability and creative flexibility.

3) Variational autoencoders and multimodal models

Variational autoencoders (VAEs) learn latent representations of input data by encoding and decoding it through probabilistic layers. They are often used for controllable generation where smooth interpolation between inputs is needed. Multimodal models integrate text, images and audio, enabling cross‑modal tasks (e.g., generating images from text or describing images in natural language). As generative systems expand, models that integrate multiple modalities will be important for product design—think chat interfaces that can return visual suggestions or voice prototypes.

4) Training data and preparation

Generative models depend on data scale and quality. The Stanford index reports that training compute doubles every five months and datasets double every eight months, reflecting the rapidly increasing appetite for data. The source, variety and quality of training data all influence model performance and biases. Data preprocessing includes cleaning, filtering and tokenisation. Models trained on labelled data (supervised) can learn task‑specific patterns, while unsupervised or self‑supervised models learn general language or image representations. Label scarcity often leads to synthetic pretraining followed by fine‑tuning on smaller labelled datasets.

This preparation process is part of understanding how does GenAI work end‑to‑end.

How does GenAI work under the hood?

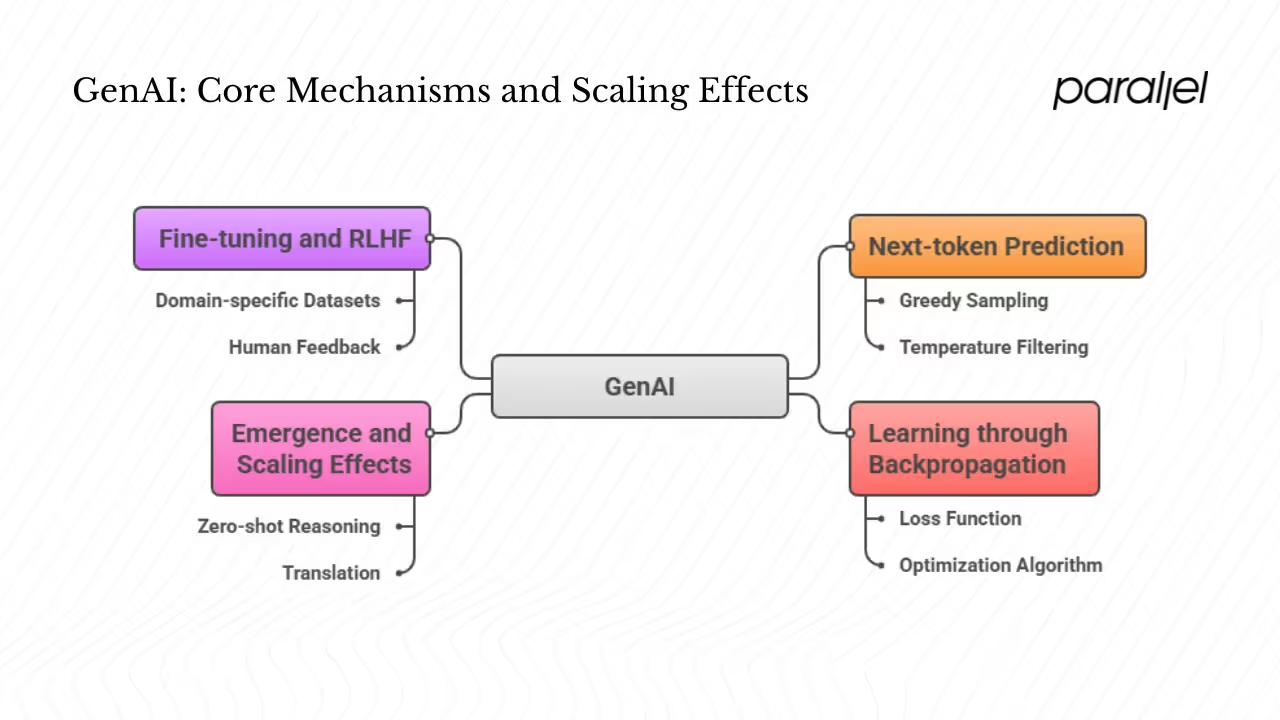

1) Next‑token prediction

At its core, text generation models perform next‑token prediction. Given a context of tokens, the model computes a probability distribution over the vocabulary for the next token. It then samples the most likely token (greedy), chooses a random token according to probability (sampling), or uses temperature or top‑k filtering to balance creativity and coherence. This process repeats until a stopping condition is met. Even though the mechanism appears straightforward, emergent behaviours arise when models are trained at scale.

2) Learning through backpropagation

Neural networks learn via backpropagation: after a forward pass produces a prediction, a loss function measures the error between the prediction and the target. The network computes gradients of this loss with respect to each parameter, and an optimisation algorithm such as stochastic gradient descent or Adam adjusts the parameters to minimise the loss. In large models, this process requires distributed training across many GPUs or specialised hardware. Training is expensive: the inference cost for a system performing at a GPT‑3.5 tier dropped 280‑fold between November 2022 and October 2024, thanks to more efficient small models and hardware improvements, but cost remains a major consideration for start‑ups.

3) Emergence and scaling effects

One of the most fascinating aspects of large generative models is emergent capabilities—skills that were not present in smaller models or explicitly programmed. As models scale in parameters and data, they begin to perform tasks such as zero‑shot reasoning, translation or code generation without direct supervision. The Stanford index notes that artificial‑intelligence systems made major strides in generating high‑quality video and even outperforming humans in limited programming tasks. Such emergence is both an opportunity and a risk; the behaviour is unpredictable and often hard to explain.

4) Fine‑tuning and RLHF

Most start‑ups will use pre‑trained models rather than training from scratch. Fine‑tuning adapts a model to a specific domain or tone by training on a smaller, domain‑specific dataset. Reinforcement Learning from Human Feedback (RLHF) further refines behaviour by rewarding desirable outputs and penalising harmful or irrelevant ones. RLHF is essential for controlling the tone of chatbots and aligning them with organisational values. Teams can also prompt tune or few‑shot prompts a model to change output using carefully crafted examples. These methods are cheaper than full training but still require careful dataset curation and evaluation.

How does GenAI compare with related technologies?

Traditional predictive models

To grasp how does GenAI work relative to traditional approaches, it helps to contrast generative systems with predictive models. Traditional machine learning systems excel at classification and regression. They predict outcomes based on input features—credit scoring, image recognition, product recommendations. Generative models share many techniques but generate new content rather than only predicting. According to MIT experts, machine learning “makes decisions that generalise patterns that we would not have found otherwise” and suits situations with abundant structured data. Generative models build upon these foundations but produce wholly new outputs, which is both powerful and risky.

Simpler NLP systems

Before transformers, many chatbots relied on rule‑based scripts or simple recurrent networks. These systems were limited to defined intents and couldn’t handle open‑ended dialogue. Modern generative models produce fluid responses because they learn from vast amounts of text. However, they may hallucinate facts or stray from context. The difference between scripted bots and GenAI is like the difference between reading a menu and being served an improvised meal; one is predictable, the other can surprise you—for better or worse.

Connection to data analysis

GenAI and predictive models often work together. As MIT’s Rama Ramakrishnan explains, generative models can “squeeze a little bit more” from structured data by inferring connections outside what traditional models detect. At Parallel, we’ve used generative models to summarise user research transcripts, then fed those summaries into clustering algorithms to detect themes. The generative step aids comprehension; the predictive step quantifies patterns.

What are the challenges, limitations and trade-offs of GenAI?

1) Hallucinations and accuracy issues

Generative models sometimes hallucinate—they produce plausible‑sounding but false or irrelevant content. In the 2024 McKinsey survey, inaccuracy was the most recognised risk, and organisations were more likely than the year before to invest in mitigating it. Hallucinations arise because models optimise for plausibility rather than truth; they can’t verify facts. For product teams, this means any GenAI output used in a user‑facing context must be validated. We’ve built guardrails such as post‑processing rules, retrieval‑augmented generation (using trusted documents) and human review to reduce false outputs.

2) Bias, fairness and ethics

Generative models reflect the biases of their training data. If the underlying data over‑represents certain demographics or viewpoints, the output will mirror those biases. IDEO researchers warn that synthetic users lack a variety of experiences and can lead to misguided decisionsideo.com. Additionally, generative models can reproduce harmful stereotypes or produce disallowed content. Product leaders must invest in fairness audits, careful data selection and fair design.

3) Compute cost and infrastructure

Training large models is resource‑intensive. The Stanford report shows that U.S. private investment in artificial intelligence reached US$109.1 billion in 2024, but early‑stage firms rarely have budgets for such computers. Even inference can be costly when dealing with high traffic. Options include using smaller open‑source models, distilling larger models into lightweight versions, or relying on API‑based access from providers. We’ve also seen success with hybrid architectures: simple heuristics filter requests, and only complex queries are sent to a generative model.

4) Interpretability and black‑box problems

Neural networks operate in high‑dimensional spaces; their internal states are not easily interpretable. This opacity makes it hard to diagnose errors or ensure compliance. Tools like attention visualisation and feature attribution help, but there is still a gap between what designers need to know and what they can observe. Without clear mental models, users may trust outputs too much or too little. The IBM design principles emphasise the need to design for appropriate trust and reliance.

5) Data privacy, IP and legal issues

GenAI models often train on public data, raising questions about copyright. When a model outputs material similar to proprietary content, who owns the rights? Regulatory frameworks are still evolving. Product teams must ensure that training data, fine‑tuning datasets and prompts do not expose confidential information. Internal governance, access controls and user education are essential. In some domains (health care, finance), using generative models may trigger regulatory obligations.

What should founders and product/design leads consider?

Questions to ask when using GenAI features

- Do we have the right data? Generative models need quality training or fine‑tuning data. Ask whether the data is representative and free of sensitive information.

- How will we validate outputs? Establish a review process—automated tests plus human oversight—before exposing results to users.

- What are our guardrails? Define unacceptable outputs, set up content filters and use retrieval‑augmented generation to ground responses.

- How will users interact with it? Input design (prompts, controls) and output presentation (confidence indicators, options to refine) matter for user trust.

- What happens when it fails? Create fallback options—offer a manual flow or redirect to a human agent when the model can’t answer.

Trade‑offs

- Speed vs quality vs cost: Smaller models respond quickly but may be less accurate. Larger models provide higher quality but consume more compute and have higher latency. Domain‑specific fine‑tuning improves relevance but increases maintenance overhead.

- Off‑the‑shelf vs custom models: Off‑the‑shelf LLMs offer quick time‑to‑market but limit customisation. Building an in‑house model offers control over data and tone but demands expertise and resources. We often start with hosted models and move to fine‑tuned open‑source models once we understand the problem.

- General vs domain‑specific: General models handle wide topics but may hallucinate in specialised domains. Domain‑specific models perform better on narrow tasks but can’t answer outside that scope. A hybrid approach uses a general model for broad queries and a fine‑tuned model for specialised questions.

UX implications

The People + artificial intelligence Guidebook and IBM’s research emphasise designing for mental models, co‑creation and imperfection. Practical tips:

- Prompt design: Provide context and examples in prompts. Offer suggestions or templates to help users craft effective queries.

- User feedback: Let users rate responses, flag issues or request a revision. This feedback can inform future fine‑tuning.

- Confidence and transparency: Display uncertainty (e.g., “I’m 70% sure”) and allow users to see sources when possible.

- Control and exploration: Give users the ability to refine outputs, choose between multiple suggestions and co‑edit generated content.

Operational considerations

- Monitoring and safety: Track model performance, latency, and incident reports. Set up automated alerts for unusual behaviour or drift.

- Compliance: Stay aware of emerging regulations (EU act on artificial intelligence, data protection laws). Document model development and testing processes.

- Team training: Educate everyone on how generative models work and their limitations. Encourage cross‑functional collaboration between engineering, design, research and legal teams.

What do case studies and real-world examples show?

IDEO’s cautionary tale on synthetic users

IDEO experimented with ChatGPT and other generative tools to create synthetic users for a rural health project. After three weeks, they realised these synthetic personas lacked subtlety; a single real interview uncovered rich, unexpected insights that the synthetic data missed. The authors outline limitations such as missing tangents, lack of emotion and shallow context. The lesson: generative tools can speed up tasks like summarising transcripts, but they can’t replace conversations with real users.

Adoption statistics from the Stanford index

According to the 2025 Stanford index, 78% of organisations report using artificial intelligence in at least one function, up from 55% the previous year. Generative usage jumped from 65% to 71% in 2024, and private investment in generative models reached US$33.9 billion. For start‑ups, this means that generative capabilities are quickly becoming table stakes. Those who adopt early can differentiate; those who wait risk falling behind.

Adoption across industries

The Netguru overview notes that generative adoption grew from 33% in 2023 to 71% in 2024. The same article points out that organisations are scaling across functions and that most use generative artificial intelligence in at least one business area. Meanwhile, the MIT Sloan survey found that 64% of senior data leaders believe generative models could be the most transformative technology of our generation.

Lessons from our projects

Within Parallel, we’ve integrated generative models into user research analysis, early content drafting and design asset generation. In one project, we used LLMs to summarise dozens of customer interviews and cluster themes. The process cut synthesis time by about half and freed up researchers to think critically about next steps. However, we still validate insights through real user sessions and cross‑check generative summaries against raw data. Another project attempted to generate onboarding flows for a B2B SaaS product. The first drafts looked polished, but they lacked context; we had to revise them to reflect the problem space. The message: use generative tools to augment, not replace, your human expertise.

What are the future trends and directions in GenAI?

1) Research frontiers

The Stanford report shows rapidly improving benchmarks in reasoning and video generation. Expect continued work on multimodal models, enabling systems that can understand and generate across text, image, audio and video. New architectures, such as Mixture‑of‑Experts and sparsely activated transformers, aim to reduce compute cost without sacrificing performance.

2) Democratization and smaller models

Inference costs are falling: the cost of running GPT‑3.5 tier models dropped over 280× from late 2022 to late 2024. Companies are releasing smaller open‑source models that run on laptops or edge devices. This trend will allow start‑ups to deploy generative features locally, preserving privacy and reducing reliance on external vendors. Domain‑specific models will proliferate, enabling vertical applications in health care, law or engineering.

3) Regulation and ethics

Governments around the world are increasing their oversight; the U.S. introduced 59 rules related to artificial intelligence in 2024, and global cooperation is intensifying. As regulation evolves, product teams must be proactive: implement transparency, risk assessments and fairness audits now rather than waiting for enforcement.

4) Toward explainability and user control

The IBM design principles stress co‑creation, generative variability and imperfection. Future research will likely focus on making model decisions more interpretable, giving users more control over outputs, and developing standards for responsible deployment. Human–model collaboration will shift from one‑off prompts to continuous, interactive workflows where models and people co‑author solutions.

Conclusion

Understanding how does GenAI work is now part of product literacy. Generative models rely on deep neural architectures, massive training data and probabilistic token prediction. They open up new possibilities for content creation and ideation, but they also introduce risks: hallucination, bias, cost and opacity. For founders, product managers and design leaders, GenAI is a tool—not a silver bullet. Use it to accelerate early drafts, summarise research and inspire concepts. Combine it with predictive models and human judgment. Set up guardrails, validate outputs and design interfaces that respect users’ mental models. And, above all, keep talking to real people. That’s how we turn innovation into real impact.

FAQ

1) How does GenAI work technically?

Generative models are neural networks trained on large datasets to predict the next token or generate new data. They use architectures such as transformers, GANs or diffusion models, learning patterns through backpropagation and optimisation. During inference, the model takes an input prompt, calculates a probability distribution over possible outputs and samples from that distribution to produce new content. Fine‑tuning and reinforcement learning can adapt the model to specific domains or tone.

2) How does generative artificial intelligence actually work?

In practical terms, a generative model encodes input into high‑dimensional vectors, processes them through layers of attention or convolution, and decodes them into output. For text, this means turning words into tokens, mapping them to embeddings, computing self‑attention to weigh context and sampling the next token. For images, diffusion models start from random noise and iteratively denoise it to produce an image. The same underlying principles apply: learn patterns from data and then generate new data based on those patterns.

3) How is GenAI different from ChatGPT?

GenAI is the broader category encompassing any model that can generate new content. ChatGPT is a specific product built on a large language model. It combines a base transformer model with reinforcement learning from human feedback and safety filters. Other Genartificial‑intelligence systems include image generators, music models and code assistants. While ChatGPT is a well‑known interface, GenAI also refers to the technology powering many different applications.

4) How does GenAI work for beginners?

Imagine you have read thousands of books and can guess what word comes next in a sentence. A generative model does something similar: it reads huge amounts of text or images during training and learns statistical patterns. When you ask it a question, it looks at your prompt, uses its learned patterns to guess what a good response might look like and writes an answer one word at a time. The magic comes from the scale of data and the architecture that allows the model to consider many possibilities quickly.

5) What should product managers do when incorporating GenAI?

Start small. Identify a clear problem where generative models can save time or inspire creativity—drafting help‑desk responses, synthesising customer feedback or generating test data. Ensure you have quality input data, set up validation workflows, and include designers and researchers in prompt and interface design. Keep an eye on cost and compute constraints. Always plan for fallback flows and human oversight when the model gets it wrong.

.avif)