How to Do Usability Testing: Complete Guide (2026)

Discover how to perform usability testing, from planning and recruiting participants to analyzing results and improving design.

Imagine two founders launching an app they are sure is easy to use. They watched their designer click through every screen, and every control seemed obvious. When they invited real people to try it, the first task took twice as long as expected, error messages appeared in places no one anticipated, and frustration grew. Their story illustrates a common pattern for young products: assumptions about ease of use seldom hold up when people outside the team get involved. Knowing how to do usability testing early can prevent costly rework and lost trust.

Usability testing matters because small teams have limited resources. A modest investment in observing users pays back many times over; Nielsen Norman Group notes that spending about 10% of a design budget on usability can more than double quality metrics. Wider industry studies even report that every dollar invested in user experience returns roughly $100, a 9,900% return. Usability testing is not about asking people if they like your idea—it's about watching them try to complete realistic tasks and learning where they struggle. This guide explains the process, from planning and recruiting to running sessions, measuring outcomes, analysing findings and iterating.

What is usability & why it matters

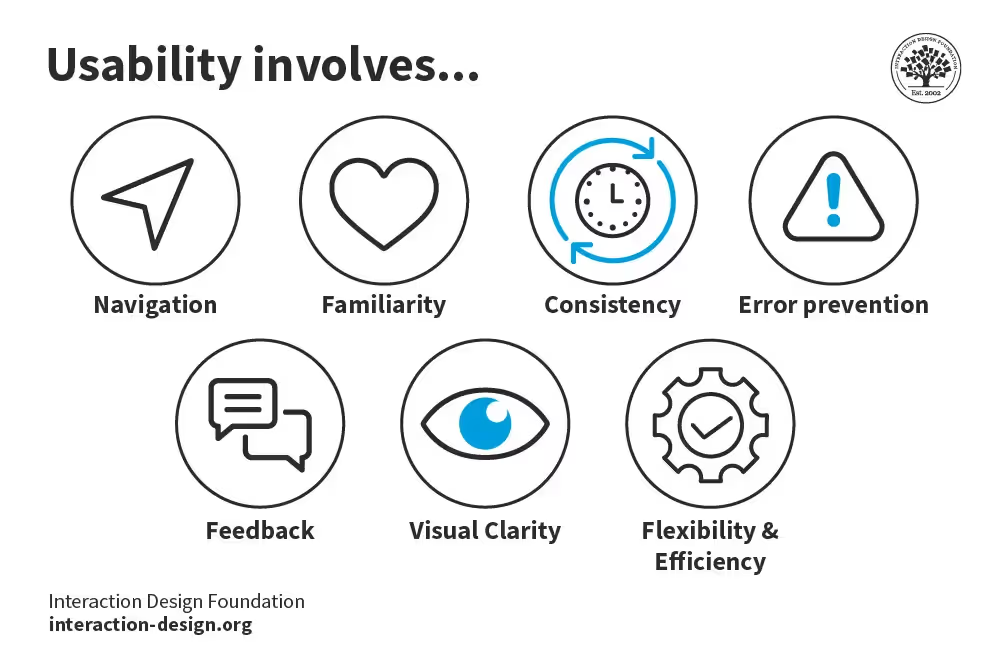

Usability assesses how effectively and pleasantly someone can use a product. Jakob Nielsen defines it by five quality components: learnability (can newcomers complete basic tasks?), efficiency (how quickly do people work once they’ve learned the interface?), memorability (can returning users regain proficiency?), errors (how many mistakes happen and how easily can users recover?) and satisfaction (is the experience enjoyable?). These components highlight that usability goes beyond aesthetics; it's about reducing cognitive load and enabling people to accomplish their goals without friction. Utility—the feature set—and usability must both be present for a product to be useful. Poor usability leads to abandonment: on the web, visitors simply leave when they get lost.

Usability testing fits within the wider practice of user research and product development. It complements market research, analytics and customer feedback by giving a direct view of how people interact with the interface. Unlike focus groups, it requires observing individual participants attempting tasks while thinking aloud. It doesn't replace discovery work that uncovers deeper motivations, but it surfaces concrete issues that hinder task completion. It also has limitations: it cannot tell you if your feature solves a strategic problem, and it won't reveal underlying emotional drivers. However, it is indispensable for catching friction points in flows you have already defined.

When & why you should do it

Usability testing is valuable at multiple stages:

- Prototype: early sketches, paper mock‑ups or clickable prototypes reveal misunderstandings before code is written. Low‑fidelity tests are quick and flexible, allowing you to change layouts between sessions.

- Minimum viable product (MVP): once a basic working version exists, testing reveals whether the core value proposition can be accessed without confusion. At this stage, improvements can still be made without upsetting a large user base.

- Live product: for released software, tests identify pain points that analytics may flag but not explain. They also help prioritise improvements that drive retention.

- New features: each new capability should be tested to avoid introducing friction.

Nielsen's research shows that testing with around five participants per segment often uncovers most severe usability problems. Smaller iterative studies are more efficient than large one‑off sessions. Early testing also saves development costs; Nielsen estimates that investing 10% of project budget in usability can more than double success metrics. Outside research echoes this return: Forrester’s widely cited analysis suggests every dollar spent on user experience yields roughly $100 in return.

There are different modes of testing:

- Moderated vs unmoderated: moderated sessions involve a facilitator guiding the participant, while unmoderated tests let participants complete tasks at their own pace using software. NN/g describes unmoderated tests as remote, asynchronous studies where tasks are set up in a tool and users’ screens and voices are recorded.

- Remote vs in‑person: remote sessions are conducted via screen sharing, enabling geographic diversity and lower logistics, while in‑person testing allows richer observation of body language and easier troubleshooting.

Selecting a mode depends on stage, budget and goals. Early concept tests often use moderated sessions to gain richer feedback. Later, unmoderated studies scale to larger samples for quantitative measures.

Test planning & preparation

Knowing how to do usability testing starts with clear objectives and thoughtful planning. Rushing straight into sessions without goals risks collecting unfocused data.

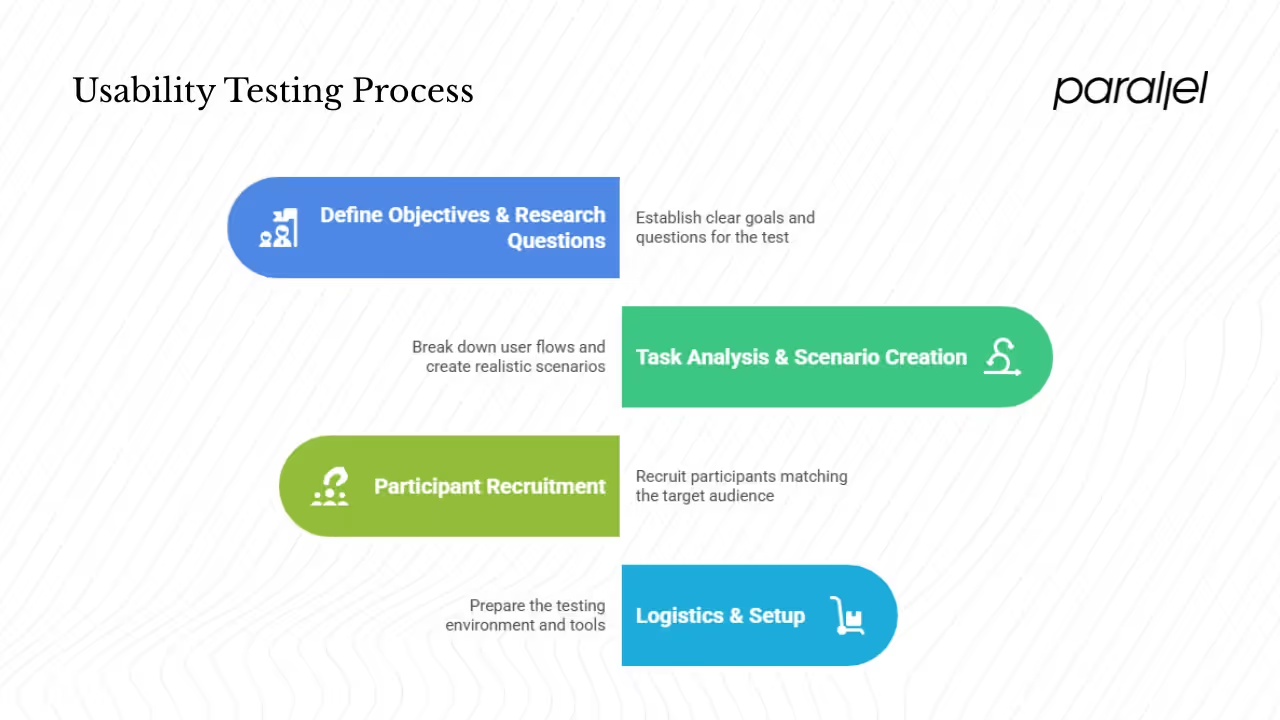

1) Define objectives & research questions

Begin by tying the test to business and product goals. For example, a SaaS team might want to reduce time‑to‑value, so a test objective could be: “Can new users complete the onboarding in under three minutes?” Other common goals include checking whether a feature is discoverable, identifying steps where users drop off, or validating assumptions about workflow. From goals, formulate questions such as:

- Do participants find the coupon code field during checkout?

- How many missteps occur during the payment flow?

- Where does confusion arise when configuring settings?

Write hypotheses you hope to validate or disprove. A hypothesis might be: “Users will notice the onboarding wizard and complete it without assistance.” Testing will show whether this holds true.

2) Task analysis & creating test scenarios

Task analysis involves breaking down user flows into discrete actions. Map out the critical paths through your product: sign‑up, onboarding, purchasing, settings adjustments. Identify which tasks are high‑risk (likely to cause abandonment) or high‑value (directly tied to revenue or activation). Select a handful of tasks—usually 3–5—to focus on in a single session.

Create scenarios that reflect real‑world contexts: “You need to reorder your monthly coffee subscription but change the delivery date to next week.” Provide enough background to make the task meaningful without giving away the solution. Instructions should be neutral—avoid words that appear on buttons. For example, instead of saying “Click ‘Buy now’,” Please purchase the product priced around ₹500.” Always pilot your tasks with colleagues to ensure clarity and completeness.

3) Participant recruitment

Define your target users based on personas or segments. Early‑stage founders often recruit existing customers or friends, but it’s vital to find people who match the intended audience. Options include:

- Existing users: reaching out via mailing lists or in‑app messages.

- User panels: services that recruit participants matching demographic criteria.

- Social channels or communities: targeted posts in relevant forums.

- Agencies: for specialised populations or when your own network is small.

Use screeners to filter participants based on relevant traits (e.g., experience level, role). For qualitative testing, NN/g suggests that five to eight participants per segment provide sufficient coverage. If testing distinct user groups (e.g., novices vs experts), test each separately. Offer incentives such as gift cards or product credits, and schedule sessions at convenient times. Provide clear instructions and consent forms ahead of time.

4) Logistics & setup

Decide on moderated or unmoderated testing. Moderated remote sessions require reliable video conferencing and screen sharing. Unmoderated tests rely on specialised tools like Maze, UXTweak or Hotjar; NN/g notes that these tools record screens and voice, allow timestamped notes, and often provide participant panels. They may lack advanced mobile app support, so check capabilities.

Prepare your environment: ensure prototypes are stable, load any test data, and remove obvious bugs. Draft an introduction script that explains the purpose (improving the product) without biasing behaviour. Remind participants there are no right or wrong answers. Provide a think‑aloud prompt: ask them to verbalise thoughts and expectations. Have recording tools ready—video, audio and note‑taking software.

Running the test

Moderation & facilitator role

The moderator is a guide, not a teacher. Begin by welcoming the participant, reviewing consent, and explaining that the session is about the product, not their performance. Encourage them to think aloud—share what they expect to happen and why they choose certain actions. During tasks, observe quietly; avoid leading or correcting. If a participant gets stuck for an extended period, you can prompt with “What are you thinking?” or remind them they can skip, but resist the urge to intervene prematurely. After each task, ask short follow‑up questions like “What did you expect to happen?” or “How easy or hard was that?”

Moderators should avoid influencing outcomes. NN/g emphasises that you must let users solve problems on their own. Common mistakes include over‑explaining, reacting emotionally to errors or defending design decisions. Use warm‑up questions to put participants at ease, then move into tasks. Be mindful of silent pauses; they often indicate cognitive processing. Keep sessions to 45–60 minutes to avoid fatigue.

Observation & data capture

During sessions, record both what participants say and what they do. Key observations include:

- Behavioural indicators: hesitation, misclicks, retries, backtracking and points of confusion.

- Performance metrics: whether tasks are completed, time taken, number of clicks or steps, error counts.

- Subjective feedback: verbal comments, frustration signals, satisfaction ratings.

Use tools for screen and voice recording; take timestamped notes so you can cross‑reference later. Eye‑tracking and cursor heatmaps can reveal where attention goes, but they are optional. After each task, provide a short questionnaire or Likert scale rating (e.g., Single Ease Question) asking participants to rate ease and confidence. For overall evaluation, the System Usability Scale is a widely used 10‑item survey that turns user perceptions into a 0–100 score; a score of 68 is the industry average.

Handling unmoderated tests

In unmoderated testing, you are not present to clarify instructions, so tasks must be self‑explanatory. Write clear, concise prompts and avoid ambiguous language. Embed feedback forms or micro‑surveys after each task. Monitor completion rates and drop‑off; high abandonment may indicate confusing instructions. Choose tools that allow you to filter out invalid sessions and contact participants if needed. If participants misinterpret tasks, adjust your wording and test again.

Usability metrics & quantitative measures

Combining qualitative insights with quantitative metrics yields a more complete picture. Common metrics include:

- Success rate / task completion: the percentage of participants who finish a task correctly. It reflects effectiveness.

- Time on task: how long participants take to complete a task. Shorter times often indicate efficiency but must be interpreted alongside accuracy.

- Error rate: count of incorrect actions or failed attempts. High error rates suggest unclear labels or flows.

- Number of steps or clicks: how many actions participants take compared to the optimal path.

- Path deviation: how far participants stray from the intended sequence. It can highlight navigation issues.

- Satisfaction / ease rating: participants’ subjective ratings via Likert scales or Single Ease Question.

- System Usability Scale (SUS): a 10‑question survey that produces a score from 0 to 100; the average across industries is about 68.

- Net Promoter Score (NPS): although more common in general customer experience, NPS can be used after usability tests to measure how likely participants are to recommend the product. It ranges from –100 to 100 and categorises respondents into detractors, passives and promoters.

Use benchmarks to compare versions or competitor products. For example, if the first iteration has a 60% task completion rate and the next reaches 80%, you can quantify improvement. But remember that metrics alone don’t explain why issues occur; they should accompany qualitative observation. Also, be cautious when interpreting small sample sizes—percentages can swing widely with just a few participants.

Analysing data & reporting findings

1) Organising & synthesising data

After the sessions, transcribe recordings and compile notes. Categorise issues by task and severity. Use coding schemes—such as labeling according to usability heuristics or flow steps—to spot patterns. Group similar observations into themes using affinity mapping or digital whiteboards. Look for recurrent pain points, as well as isolated but severe problems. Document positive findings too: features that worked well are important to preserve.

2) Prioritising issues

Not all issues carry equal weight. Prioritise based on severity (does it block task completion?), frequency (how many participants encountered it?), and impact on business outcomes. Evaluate effort required to fix: some issues are quick copy adjustments; others need deeper structural changes. Aim for a balanced roadmap with quick wins and strategic improvements.

3) Heuristic evaluation (as a complement)

Heuristic evaluation is an expert review method where usability specialists examine the interface against established principles. Nielsen’s heuristics include: visibility of system status (the interface should keep users informed about what is happening), match between system and the real world (use language and conventions familiar to users), user control and freedom (provide clear exits and undo mechanisms), consistency and standards (use the same patterns throughout), error prevention and recognition rather than recall (minimise memory load and anticipate mistakes), flexibility and efficiency of use (offer shortcuts for experienced users), aesthetic and minimalist design (remove unnecessary elements), help users recognise, diagnose, and recover from errors, and help and documentation. Conducting a heuristic evaluation alongside usability testing helps cross‑validate issues and uncover problems that might not appear during limited sessions.

4) Reporting & presenting to stakeholders

Prepare a concise report or presentation that starts with an executive overview summarising objectives, methods and top findings. Include a prioritised list of issues with recommendations. Use quotes, video clips or screenshots to illustrate problems—seeing real users struggle is persuasive. Present quantitative metrics (success rates, time on task, SUS scores) in charts. Close with a proposed action plan: which fixes to implement now, which require further exploration, and whether additional research is needed. Append detailed transcripts and raw data for reference.

Iteration & follow‑up testing

Usability testing is most effective when integrated into an iterative design process. After addressing identified issues, run another round of testing to verify improvements and catch new problems. The Rapid Iterative Testing and Evaluation (RITE) method formalises this: after each participant, the team decides whether to adjust the design immediately. MeasuringU explains that in RITE, if a single participant exposes a clear problem and a fix is obvious, testing pauses so the team can implement the change before resuming. This approach accelerates learning but requires a team capable of rapid updates.

When scoping iterative cycles, focus on the most critical flows rather than testing everything. Start with low‑fidelity prototypes, refine through several rounds and then test high‑fidelity versions. After releasing improvements, conduct regression tests to ensure fixes didn’t introduce new issues. Over time, run longitudinal usability studies to see how usability evolves as features accumulate.

Special topics & advanced considerations

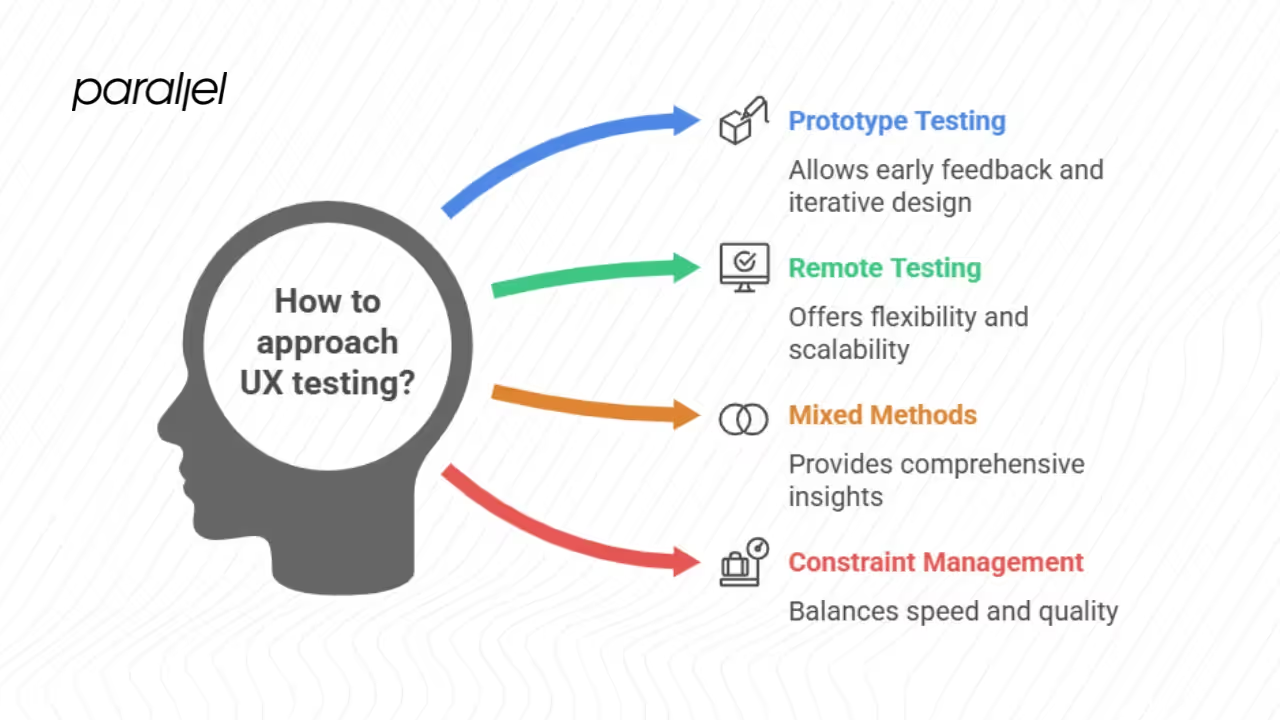

1) Prototype testing

Testing prototypes allows teams to gather feedback before investing in full development. Low‑fidelity prototypes (paper sketches, wireframes) are quick to build and change. Mid‑fidelity prototypes add basic interaction, while high‑fidelity versions simulate realistic visuals and flows. When prototypes lack backend logic, use “wizard of oz” techniques where the moderator manually triggers responses. Ensure participants know the prototype may not function fully. Avoid placeholders that confuse participants; label unavailable features clearly.

2) Remote & unmoderated testing tools

Remote tools have matured rapidly. According to NN/g, unmoderated testing platforms typically record participants’ screens and voices, provide timestamped notes, transcripts and highlight reels. Many tools also offer participant panels, but some (e.g., Lookback) integrate with recruitment platforms. When choosing a tool, consider cost, integrations, analysis features and mobile support. Tools like Maze or UXTweak are popular for small teams due to their ease of setup and built-in analytics. Always test your prototype within the chosen tool to ensure it behaves as expected.

3) Combining methods: mixed approaches

Usability testing doesn’t exist in isolation. Combining qualitative observations with surveys and analytics offers a richer picture. For example, follow up a usability test with a satisfaction survey or NPS to gauge overall sentiment. Pair think‑aloud sessions with eye tracking to see where participants look. After confirming usability basics, use A/B testing to fine‑tune UI variants at scale. Mixed methods help validate findings and reduce bias.

4) Handling constraints & trade‑offs

Startups often face tight budgets and time constraints. Prioritise tasks that have the greatest impact on activation or revenue. When you can’t recruit ideal participants, guerrilla testing—approaching people in cafés or coworking spaces—can uncover obvious issues quickly. Balance speed against data quality; quick tests may reveal major problems but miss nuanced patterns. Accept that you won’t catch everything; the goal is continuous improvement, not perfection.

Example workflow / case study

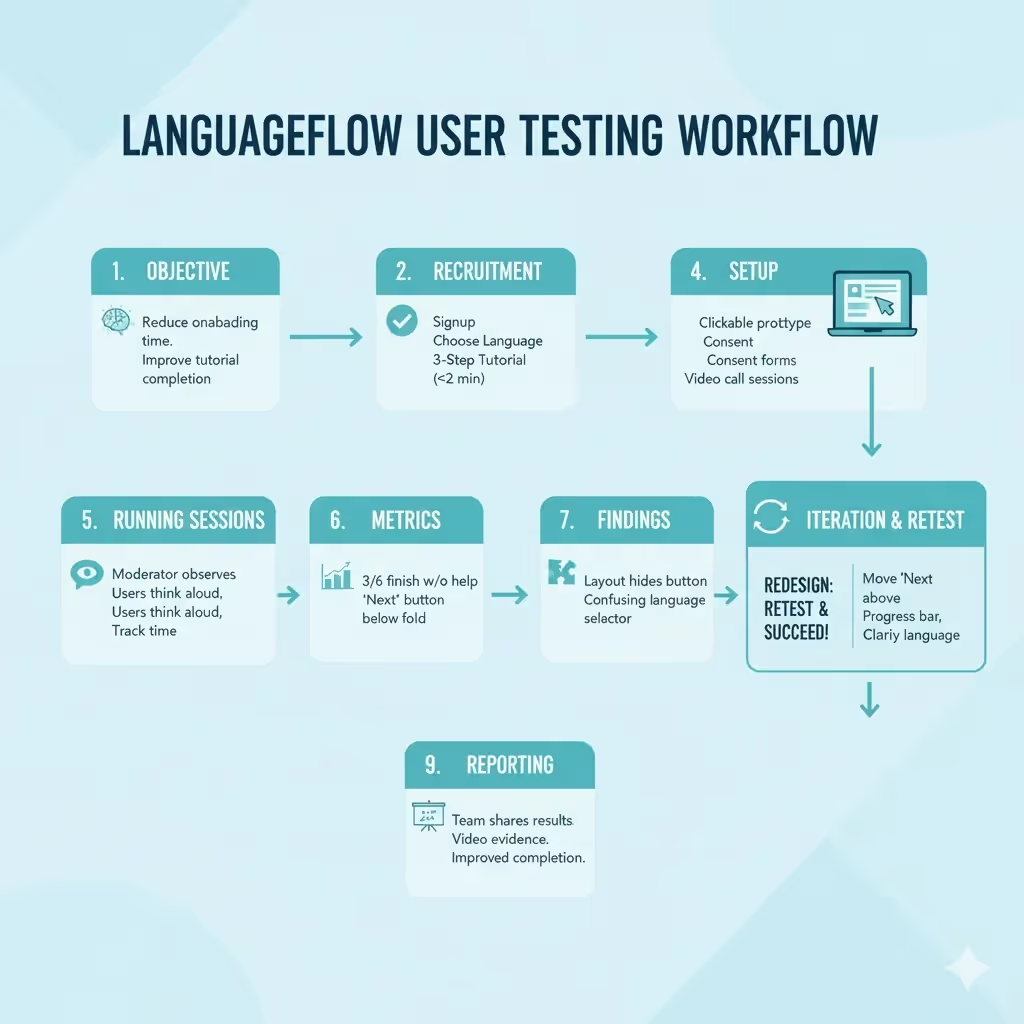

To illustrate how to do usability testing, consider a fictional SaaS startup offering a subscription for learning languages. They notice many users sign up but few complete the onboarding tutorial. Here’s how they approached the issue:

- Objective: Reduce time to complete onboarding and increase tutorial completion rate.

- Tasks: Ask participants to sign up with email, choose a language, and finish the three‑step tutorial. Hypothesis: the tutorial is clear and takes under two minutes.

- Recruitment: They recruit six new users through a panel, matching demographics of their target audience.

- Setup: A clickable prototype is prepared. Moderators send consent forms and instructions. Sessions take place via video call.

- Running sessions: Moderators encourage think‑aloud, observe where participants pause, and record time to completion.

- Metrics: Only three out of six users complete the tutorial without help; average completion time is four minutes. Two participants miss the “Next” button because it is below the fold.

- Findings: The team identifies that the page layout pushes the primary action off screen on smaller devices. They also observe that the language selector is preselected, causing confusion about whether a choice is required.

- Iteration: Designers move the “Next” button above the fold, add a progress indicator and clarify the language selection. They fix these issues within a day and re‑test with three more participants (RITE method). All three complete the tutorial in under two minutes. The team then rolls out the change to production.

- Reporting: They prepare a report summarising the problem, evidence (video clips), the fix and the improved metrics. Stakeholders see clear value and allocate time for further testing.

This example shows the power of small, focused tests and quick iterations. Even minor interface adjustments can dramatically improve completion rates.

Conclusion

Usability testing is a critical tool for founders, product managers and design leaders seeking to build products that people love. It delivers insight into real behaviour, surfaces hidden friction points and prevents expensive redesigns later.

By understanding how to do usability testing—defining objectives, crafting tasks, recruiting appropriate participants, moderating sessions thoughtfully, capturing meaningful metrics, analysing data, applying heuristics and iterating—you set your team up for continuous improvement. Start small, test often and use evidence to inform decisions.

In the end, the goal is not to prove your design perfect but to learn where it falls short and make it better. Invite your colleagues to observe sessions, share raw user feedback and advocate for changes. Finally, schedule your own test session soon; watching someone else use your product will change how you think about it.

FAQ

1) What are the five components included in usability testing?

One lens comes from Nielsen Norman Group’s definition of usability: learnability, efficiency, memorability, errors and satisfaction. Another way to think about a test includes five elements: participants, tasks and scenarios, environment/setup, observation and metrics, and analysis/reporting.

2) What technique do usability tests use?

The core technique involves real users performing realistic tasks while thinking aloud; researchers observe behaviour, record qualitative and quantitative data (success rates, time on task, error counts) and collect feedback. Variations include moderated or unmoderated sessions, remote or in‑person tests, heuristic evaluations and prototype testing.

3) What is an example of a usability test?

Imagine you’ve built an e‑commerce checkout flow. You recruit six participants, ask them to purchase a ₹500 product, apply a coupon and complete payment. You measure how many finish successfully, how long they take, where they struggle and ask them to rate ease. When several users cannot find the coupon field, you adjust the interface and test again. This is a typical example of how to do usability testing.

4) How do you create a usability test?

First define what you need to learn. Choose key tasks and write scenarios. Recruit participants who match your target audience. Decide whether to conduct moderated or unmoderated sessions and select tools. Prepare scripts, consent and think‑aloud instructions. Pilot the test, then run sessions, observe and collect data. Analyse findings, prioritise issues, report to stakeholders and iterate until major problems are resolved.

.avif)