How to Test a Prototype: Complete Guide (2026)

Learn how to test a prototype using user feedback, usability testing, and iterative improvements to validate design and functionality.

Building the wrong thing is expensive. I’ve seen early‑stage teams spend months polishing a feature nobody needed. When users finally tried it, the friction was obvious and the budget was gone. Prototype testing is cheap insurance: it surfaces issues while they’re still a drag‑and‑drop in Figma, not a multi‑sprint rewrite. In this guide I’ll show how to test a prototype end‑to‑end so you can reduce risk and build with conviction. We’ll cover methodology, best practices, pitfalls to avoid and a few frequently asked questions—all grounded in experience and research.

What is prototype testing and why does it matter?

A prototype is an early representation of a product. It can be as simple as a paper sketch or as polished as an interactive mock‑up. Dovetail notes that prototypes range from paper sketches for basic navigation to fully interactive models that resemble the final product. Prototype testing means putting these representations in front of users to validate design decisions before development. The goal is to uncover issues like confusing navigation, unclear interfaces or inefficient workflows while changes are still inexpensive.

Prototype testing is distinct from user testing. User testing asks “do people need or want this product?”; prototype testing asks “can people use this design?”. It sits between ideation and development and is a core practice in design thinking and lean methodology. RealEye’s 2024 guide explains that testing an early model helps designers see the product through users’ eyes, while Loop11’s research shows that prototype testing minimizes workload and saves time by catching issues early.

So why test before you build? Because the cost of a wrong bet isn’t just code rework—it’s opportunity cost. Dovetail highlights that changes made at the prototype stage are far less costly than post‑launch revisions. Early testing shortens development cycles, provides evidence over gut feel and aligns stakeholders around data. Small, lean teams can’t afford weeks of misdirected engineering. They need to validate fast, iterate and move on.

Timing matters. There’s a sweet spot between testing too early and too late. Useful trigger points include:

- Early conceptual stage: Low‑fidelity sketches reveal foundational issues.

- During design and development: Clickable wireframes refine interactions.

- Before major milestones: High‑fidelity prototypes verify details before code.

- After major updates: Test new features before roll‑out.

If everything is still conceptual, test internally first. If you’re essentially doing QA on a finished product, you’re too late for prototype testing.

Planning and building your prototype test

Before diving into how to test a prototype, take time to plan. Clarity here saves hours later.

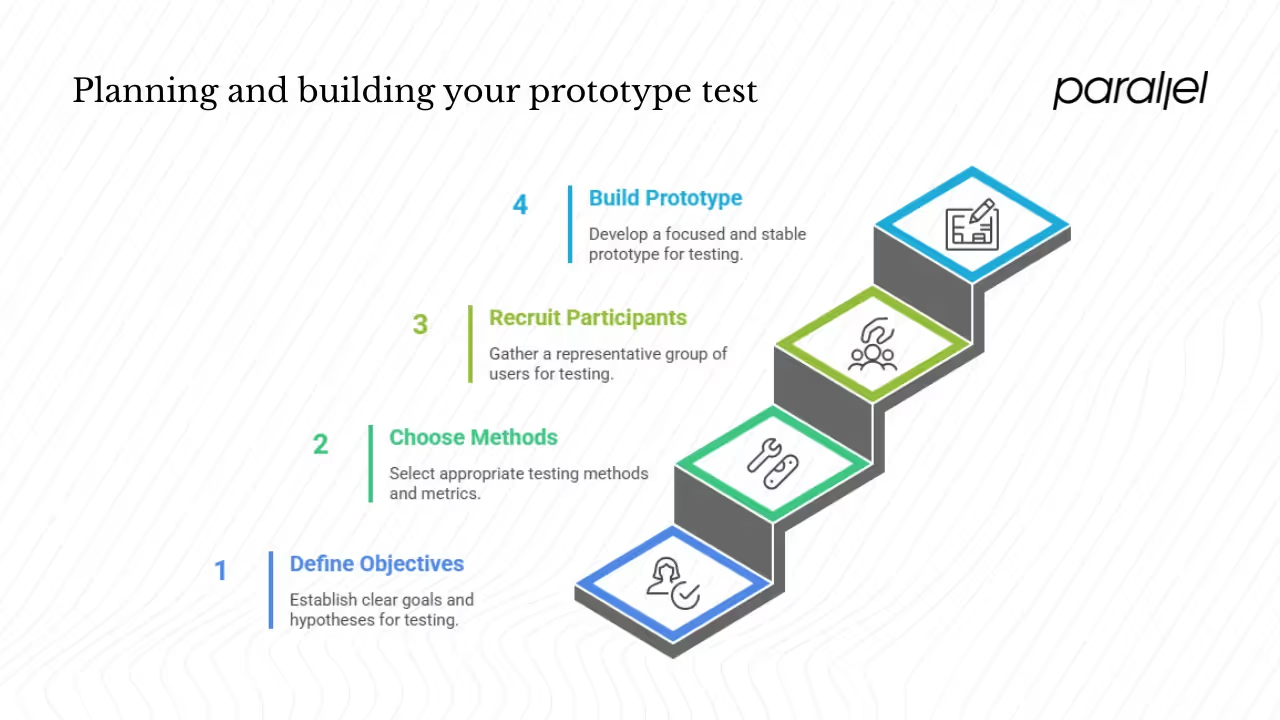

1) Define objectives and hypotheses

Every test needs a clear objective. What do you want to learn? Can users find feature X? Does this navigation make sense? Translate objectives into testable hypotheses, such as “80 % of first‑time users will complete sign‑up without assistance.” Prioritize what you need to test: usability, performance, design aesthetics or functional feasibility. Writing hypotheses forces you to focus and helps determine tasks, metrics and participants.

2) Choose methods and metrics

No single method fits every question. Choose a mix based on your goals:

- Usability testing: Moderated sessions let you observe behavior and ask follow‑up questions; unmoderated sessions scale quickly but provide less context.

- A/B testing: Compare design variations when evaluating specific UI choices.

- Benchmarking: Measure your design against a baseline or competitor.

- Surveys and post‑task questionnaires: Gather satisfaction scores and subjective feedback.

- Wizard‑of‑Oz: Simulate backend logic manually when features aren’t built.

Combine quantitative metrics (success rate, time on task, error counts) with qualitative feedback. Define success criteria up front—“At least 80 % of participants complete checkout in under two minutes”—so you know when to iterate or move on.

3) Recruit participants and decide sample size

Recruit users who resemble your target personas. Include edge cases such as power users or accessibility needs. Sample size depends on your goals. The famous five‑user rule—five participants uncover 85 % of usability issues—has caveats. UXArmy notes that qualitative tests can start with five to eight users, but quantitative studies need larger samples, potentially 30 or more. Unmoderated remote tests often require slightly more participants to account for low‑quality sessions. Incentivize participants appropriately and use screeners to ensure fit.

4) Build only what you need

When building the prototype, scope ruthlessly. Early questions about navigation or concept validation often require low‑fidelity sketches or wireframes; performance or flow tests need higher fidelity and realistic data. RealEye distinguishes between low‑fidelity paper prototypes and detailed digital models. Don’t overbuild: every extra screen is another bug waiting to happen.

Use stubs or mocks to simulate backend logic. Wizard‑of‑Oz techniques let you test flows without full engineering. Clean up your prototype—remove dead ends, fix broken links and ensure accessibility. Instrument it with analytics tags to capture clicks and timing. A stable prototype respects participants’ time and yields better data.

Running the test and analyzing results

The execution phase is where you experience how to test a prototype in practice. Choose between moderated and unmoderated sessions. Moderated tests provide rich insight: you can observe body language, encourage think‑aloud and probe deeper. They are time‑intensive but ideal for complex flows. Unmoderated tests scale quickly and let participants use your prototype in their natural context. However, you have less control and may need to discard incomplete data.

1) Facilitate with care

Be a neutral observer. Explain the purpose—“We’re testing the design, not you”—and encourage participants to think aloud. Avoid leading questions; instead ask, “What would you do next?” or “What was confusing?” Record sessions (screen, audio and video) and take notes. Capture direct quotes; they humanize the data and resonate with stakeholders. After each task, use frameworks like “I Like / I Wish / What If” to structure feedback. Short surveys capture satisfaction or Net Promoter Score.

2) Handle surprises gracefully

Participants will deviate from the script. If they wander off flow, gently redirect, but note the deviation—it may reveal hidden problems. Have backup tasks in case something breaks. Be prepared for technical issues (network drop, incompatible device) with contingency plans and buffer time.

3) Consolidate and analyze

Once testing is complete, consolidate quantitative and qualitative data. Look for patterns: where did multiple users stumble? Did specific metrics fall below thresholds? Use heatmaps or clickstream analytics to visualize behavior. Classify each issue as critical, major or minor and perform root‑cause analysis—was it terminology, layout or missing feedback? Prioritize fixes based on impact versus effort. Benchmark results against previous iterations or competitors to gauge progress.

4) Iterate and decide when to stop

Prototype testing is inherently iterative. Incorporate findings into a revised prototype and test again, focusing each cycle on the riskiest assumptions. Use A/B or multivariate tests when comparing specific changes. Stop testing when metrics meet your success criteria (e.g., 90% success rate), when two consecutive rounds uncover no new major issues, or when business constraints dictate. Document what you tested, decisions made and rationales to preserve institutional memory.

Advanced considerations, trends and pitfalls

1) Remote testing and accessibility

Remote tools like Maze or UXTweak let you reach participants anywhere. They’re invaluable when you need a larger sample or can’t bring users into the lab. Keep in mind that unmoderated studies require more participants to account for low‑quality responses. Always ensure your prototype is accessible: check color contrast, support keyboard navigation and test with screen readers. Inclusive design broadens your market and aligns with ethical standards.

2) Sustainability and AI‑driven prototyping

Rapid prototyping is evolving. A 2025 trend analysis reports that prototypes have become data‑driven models that influence investment decisions and supply‑chain planning. Artificial intelligence speeds up iteration by predicting stress points and user interactions. Sustainability is a new benchmark—materials and processes are chosen not only for function but also for environmental impact. Micro‑iteration cycles and low‑code platforms allow non‑engineers to prototype. When thinking about how to test a prototype, consider these shifts: AI tools can simulate interactions, and customers increasingly value sustainable practices.

3) Common pitfalls and mitigation

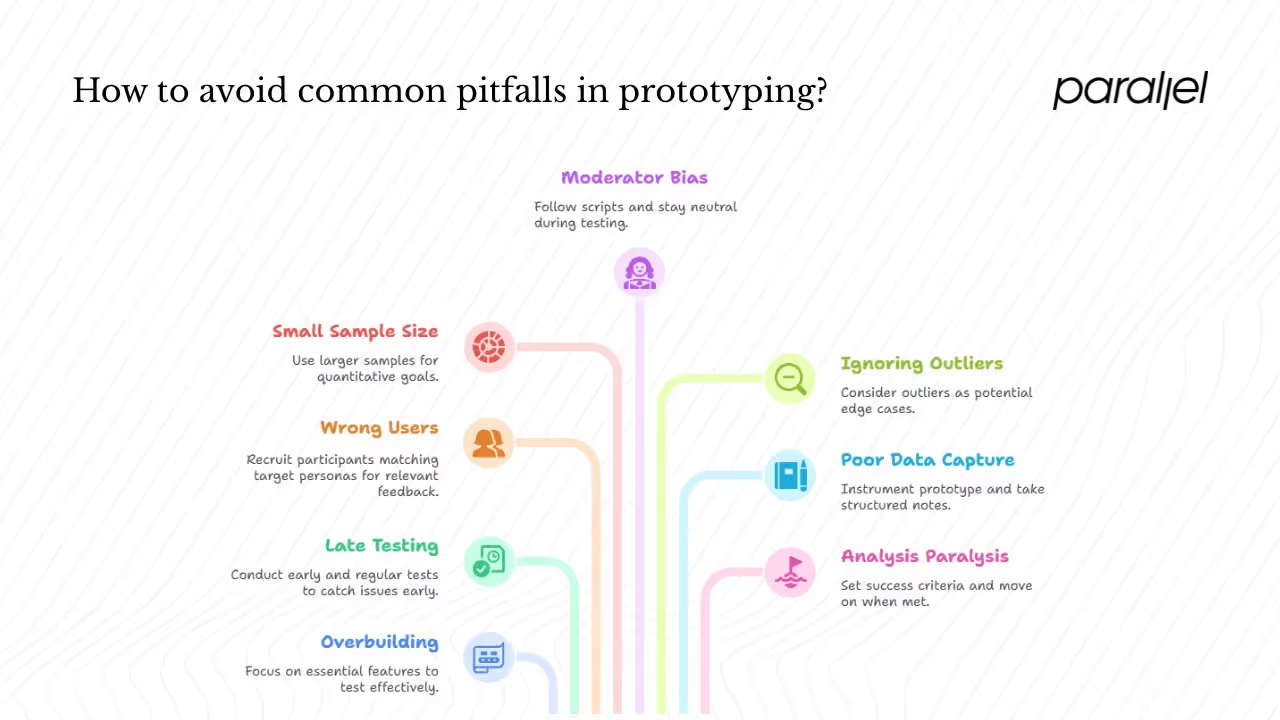

Even with a solid plan, teams stumble. Watch out for these:

- Overbuilding: Scope the prototype to what you intend to test.

- Testing too late or too infrequently: Early and regular tests catch issues when they’re cheap to fix.

- Recruiting the wrong users: Ensure participants match your target personas; otherwise feedback is noise.

- Small sample size for quantitative goals: Qualitative insights may come from five users, but metrics need larger samples.

- Moderator bias: Follow scripts and stay neutral.

- Ignoring outliers: A single outlier may reveal an edge case worth addressing.

- Poor data capture: Instrument your prototype and take structured notes.

- Analysis paralysis: Set success criteria and move on when you meet them.

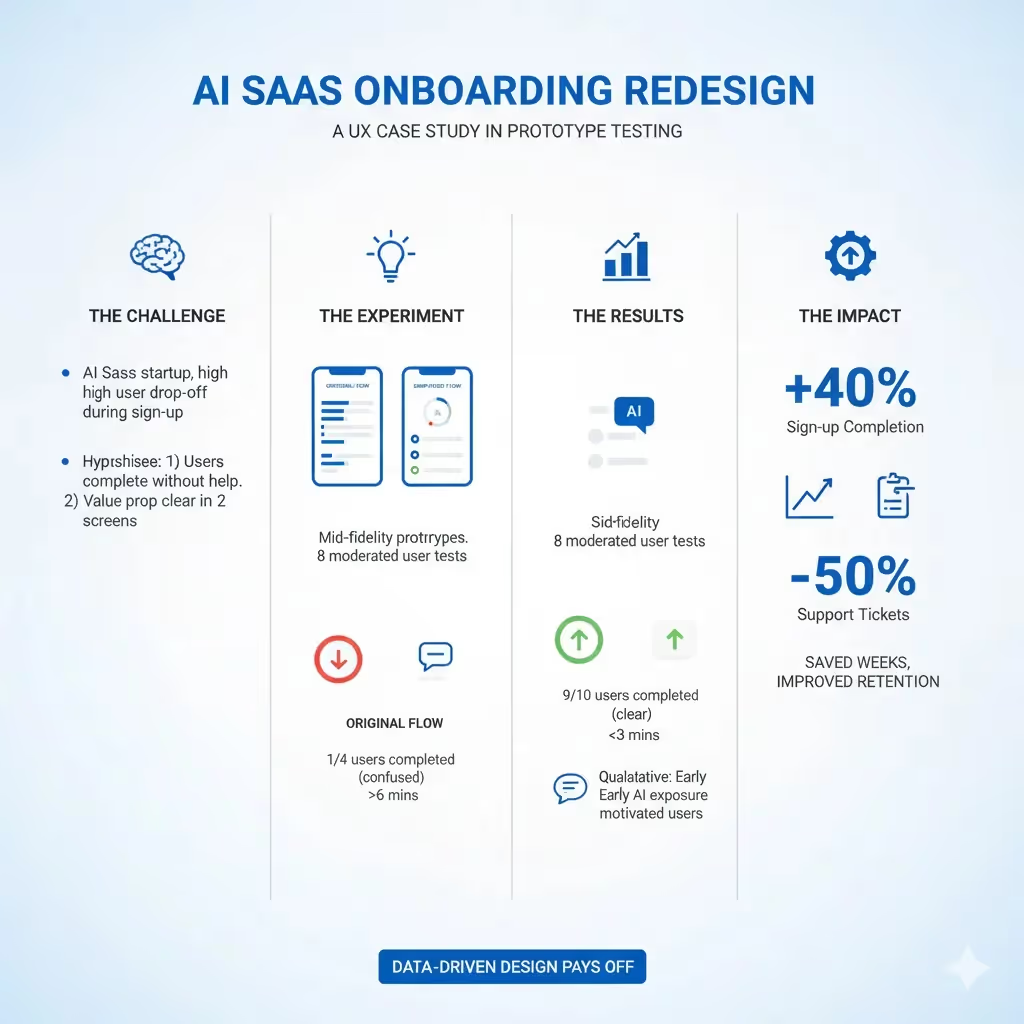

A brief case study

We recently worked with an AI SaaS startup struggling with onboarding. Users were dropping off during signup. We defined two hypotheses: users can complete onboarding without help and they understand the value proposition within two screens. We built two mid‑fidelity prototypes: the original flow and a simplified variant. Eight participants from the target segment performed moderated tests. Only one in four users completed the original flow without confusion, and it took over six minutes. With the simplified flow, nearly nine in ten finished in under three minutes. The qualitative takeaway was that early exposure to the AI’s capabilities motivated users to continue. Armed with this data, the team redesigned onboarding; sign‑up completion increased by roughly 40 % and support tickets dropped by half. Knowing how to test a prototype saved weeks of development and improved retention.

Conclusion

Mastering how to test a prototype is about embracing evidence‑based iteration. Start with clear objectives and hypotheses. Choose appropriate methods and metrics. Recruit participants who mirror your users and decide sample sizes based on whether you seek qualitative insights or quantitative benchmarks. Build prototypes at the right fidelity, simulate missing features and polish them for testing. Run tests with neutrality, capture both behavioral data and subjective feedback, and analyze holistically. Iterate quickly, stop when metrics meet thresholds and document everything.

Frequently asked questions

1) What are the four steps of testing a prototype?

- Define objectives and hypotheses. Decide what you need to learn.

- Build a testable prototype. Scope it to the flows or features you need to validate and simulate missing parts.

- Run the test with users. Choose methods, recruit participants and execute sessions.

- Analyze feedback and iterate. Consolidate data, prioritize issues, refine the prototype and repeat until success criteria are met.

2) How do we test a prototype?

Plan your goals, decide methods, recruit the right users, build a prototype, run tests (moderated or unmoderated), capture data and iterate. Repeat this loop until you’ve met your success thresholds. That’s the essence of how to test a prototype.

3) Which method is commonly used to test prototypes?

Usability testing is the most common method. It may be moderated—where a facilitator observes and probes—or unmoderated, which scales to larger samples. Teams often supplement with surveys, A/B tests or benchmarks depending on the question.

4) What is the checklist for prototype testing?

- Objectives and hypotheses defined

- Metrics and success criteria set

- Participant recruitment plan in place

- Test plan and script ready, pilot‑tested

- Prototype polished and instrumented

- Recording setup prepared

- Facilitation guidelines drafted

- Data capture templates ready

- Issue severity schema defined

- Iteration plan prepared

By following this checklist and integrating prototype evaluation into your culture, you’ll not only know how to test a prototype but also build products that users love and businesses can trust.

Your next step is to make prototype testing habitual. Resist the temptation to build without validation; remember that early feedback saves both time and money. When questions arise in your product journey, ask yourself how to validate your prototype rather than relying on guesswork. That mindset separates teams that flounder from those that deliver value consistently. Be curious, be humble, and let your users guide you.

.avif)