How to Train an AI Model: Complete Guide (2026)

Understand the process of training AI models, including data collection, preprocessing, algorithm selection, and evaluation.

Many founders and product managers want to know how to train an AI model because custom models can improve speed, differentiation and product fit. Yet the process can seem hidden. I'm Robin Dhanwani from Parallel, and I’ll share a practical, step‑by‑step perspective drawn from our work with early‑stage teams.

You’ll see what matters at each stage—from handling data and selecting architectures to validation and deployment—and where the trade‑offs lie. By the end, you’ll have a grounded view of how to train an AI model that supports decision‑making without hype.

Why it matters for startups

Running your own model isn’t about vanity; it’s about solving real business problems. Early‑stage companies gain three advantages when they understand how to train an AI model:

- Speed and autonomy: Off‑the‑shelf models can be heavy and slow. A focused model trained on your data runs faster and cuts dependency on vendors. A cloud‑hosted model with pruning or quantization can even run on modest hardware, reducing costs.

- Differentiation: Startups succeed by doing something unique. Training your own model allows you to encode domain‑specific knowledge and behavioural patterns, making the experience feel distinctive to your users.

- Ownership: Your data often contains sensitive patterns. Handling training in‑house reduces privacy risk and compliance overhead. You decide how much bias mitigation, fairness and explainability to implement.

However, there are trade‑offs. Small teams may struggle with compute budgets, engineering skill and time. That’s why this guide stresses minimal viable models—starting simple and iterating. When you appreciate how to train an AI model, you can make informed choices about investing in people, hardware or partnerships. In other words, understanding how to train an AI model becomes a multiplier for early‑stage teams seeking speed and clarity.

What are the core concepts of AI model training?

Before diving into steps, let’s clarify a few ideas:

Machine learning vs neural networks

Machine learning covers a broad family of algorithms that learn patterns from data. Linear and logistic regression remain popular because they’re simple, interpretable and require little data. Neural networks, inspired by biological neurons, excel at capturing non‑linear relationships and complex patterns but demand more data, compute and tuning. When choosing a model, consider the nature of your problem, dataset size and the interpretability your stakeholders need.

Data preparation & preprocessing

Raw data is messy—missing values, noise, duplicated entries and inconsistent formats. Analysts spend about 80% of their time cleaning and preparing data. Preprocessing involves evaluating, filtering, encoding, scaling and removing outliers to make data usable. Good preprocessing reduces compute time and improves model performance. We’ll walk through these steps later.

Feature engineering, loss functions & optimization

- Feature engineering: selecting or transforming inputs to highlight patterns. Thoughtful engineering can significantly improve model performance, especially with structured data.

- Loss function: measures the difference between predicted and actual values. For regression tasks, mean squared error (MSE) calculates the average squared difference, always positive due to randomness or bias. Classification uses cross‑entropy, and generative tasks use other metrics like FID.

- Optimization: methods like gradient descent adjust model parameters to minimize the loss. You’ll tune learning rates, batch sizes and other hyperparameters to find a good minimum.

With these basics, let’s break down how to train an AI model into manageable steps.

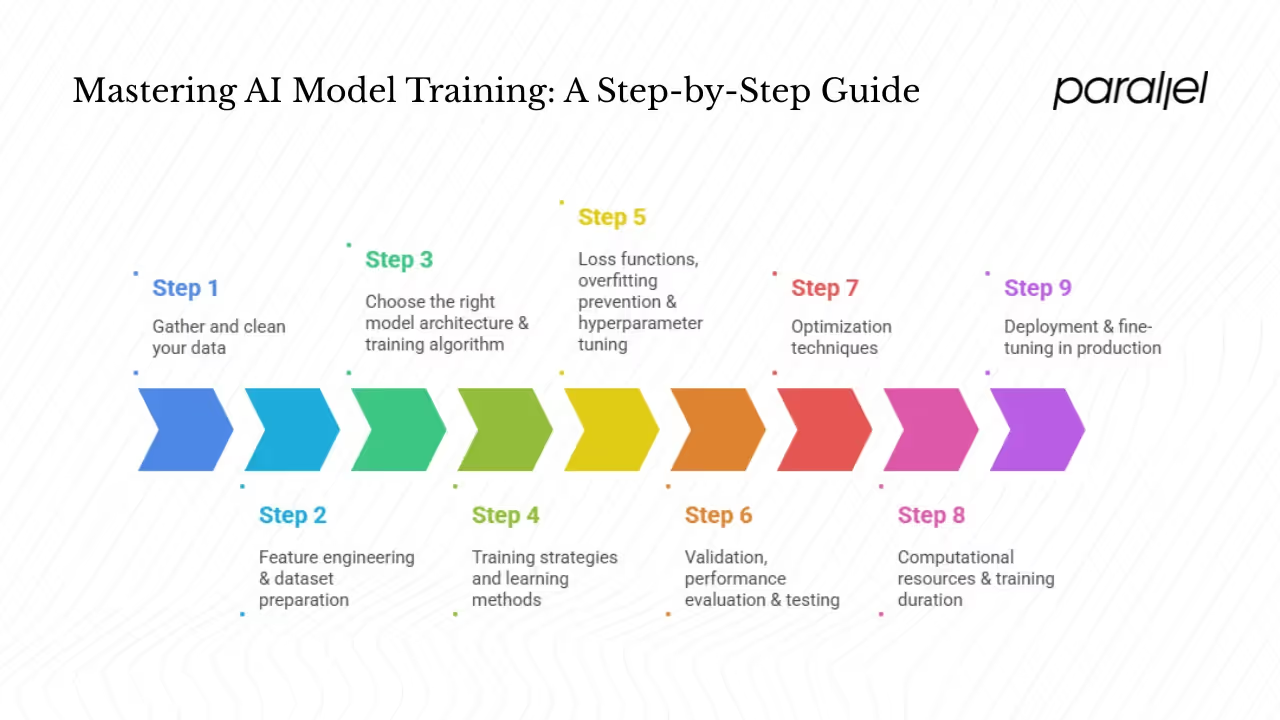

How to train an AI model: Step‑by‑step

Step 1: gather and clean your data

Start by collecting data relevant to your problem: user interactions, transaction logs, images, text or audio. Then prepare it:

- Cleaning and normalization: remove duplicates, fix inconsistent units and scale values. Normalization ensures features with different scales don’t dominate the model. Data wrangling has a big impact—preprocessing reduces training time and compute demands.

- Handling missing values: decide whether to drop rows, impute missing data or use models that handle missing values. Always document the choice and its rationale.

- Annotate or label: for supervised learning, data needs labels. Use in‑house annotators or platforms. Watch out for noisy labels; they can mislead the model.

- Bias and noise checks: examine representation across demographics to avoid unfair outcomes. Bias is not just an ethical issue; it also affects performance.

- Split into sets: divide into training, validation and test sets. A common ratio is 70/15/15, but it can vary. The evaluation sets should reflect the real‑world distribution.

Step 2: feature engineering & dataset preparation

Features are the signals the model learns from. For tabular data, you might derive ratios, differences or time‑based features. For text, use tokenization and embeddings. For images, you might compute edges or use raw pixels. Good features simplify the learning problem and help the model converge faster. Even when using modern neural architectures that learn features automatically, performing basic normalization and filtering remains crucial.

Step 3: choose the right model architecture & training algorithm

Model choice depends on the task and resources:

- Regression or classification with structured data: start with linear or logistic regression or decision trees. They provide interpretability, quick training and a baseline. Random forests and gradient boosting capture non‑linear interactions with moderate complexity.

- Support Vector Machines (SVMs): effective for high‑dimensional data; they work well on smaller datasets but can be slow on large ones.

- Neural networks: these excel at image, speech and text tasks. Convolutional neural networks (CNNs) handle images; recurrent or transformer architectures manage sequences. Large language models (LLMs) like GPT rely on transformers.

When selecting, weigh interpretability against performance. A simpler model may suffice for many early‑stage needs and is easier to explain to stakeholders.

Step 4: training strategies and learning methods

Different methods suit different data scenarios:

- Supervised learning: trains on labeled data. Suitable when you have enough annotated examples.

- Unsupervised learning: finds patterns in unlabeled data—clustering, anomaly detection and dimensionality reduction.

- Semi‑supervised learning: leverages a small labeled dataset with a larger unlabeled set, useful when labeling is expensive.

For language models:

- Pretraining: train a model on large unlabeled corpora, learning general patterns of language. This stage uses unsupervised objectives like predicting the next word.

- Fine‑tuning: adapt the pretrained model to a specific task with labeled data. Fine‑tuning is cheaper than training from scratch and yields better performance.

- Reinforcement Learning from Human Feedback (RLHF): optimize language models toward human preferences using a reward model; this approach improves alignment and has been used to refine ChatGPT.

Step 5: loss functions, overfitting prevention & hyperparameter tuning

- Loss functions: classification problems often use cross‑entropy to measure divergence between predicted probabilities and true labels. Regression uses MSE, while generative models may use adversarial losses plus FID to gauge realism.

- Prevent overfitting: overfitting happens when your model learns noise instead of signal. Use holdout data, regularization (L1/L2 penalties), dropout in neural networks and early stopping when validation loss stops improving.

- Hyperparameter tuning: adjust learning rate, number of layers, hidden units, batch size and regularization strength. Start with manual grid search, then move to automated methods like Bayesian optimization when resources allow. Use small experiments to narrow the range before scaling up.

Step 6: validation, performance evaluation & testing

- Validation set: evaluate your model during training to tune hyperparameters and detect overfitting.

- Test set: assess final generalization to unseen data. Keep it untouched during training.

- Metrics: choose metrics aligned with your objective. For classification: accuracy measures the proportion of correct predictions but is misleading on imbalanced data. Precision and recall capture trade‑offs: precision is true positives divided by predicted positives, while recall measures the fraction of actual positives detected. The F1 score balances them, especially for imbalanced datasets. For generative models, use FID to compare the distribution of generated and real images.

Step 7: optimization techniques

Once your baseline model is ready, you can optimize it:

- Pruning: remove parameters or neurons with little impact on accuracy to reduce model size. Structured pruning removes entire neurons or filters, while unstructured pruning removes individual weights. This shrinks memory footprint and speeds inference.

- Quantization: convert floating‑point parameters (FP32) to lower precision (FP16 or INT8). Quantization aware training incorporates quantization during training, preserving accuracy better than post‑training quantization. These techniques make deployment on edge devices feasible.

- Retraining and incremental learning: as your application evolves, re‑train the model with new data. Use techniques like continual learning to avoid forgetting earlier knowledge.

Step 8: computational resources & training duration

Model training demands vary widely. Simple regressions run on laptops; LLMs require clusters. Training computation is measured in floating‑point operations per second (FLOPs), often petaFLOPs. The computational load depends on dataset size, model complexity and parallel processing. Hardware choices include GPUs and TPUs for parallelism; renting them from cloud providers reduces capital expenditure but adds ongoing costs.

Be mindful of environmental and social costs. Generative models consume immense electricity and water for cooling; they also require high‑performance hardware whose production has environmental impact. Startups should weigh whether the benefits justify the resource use and consider less resource‑intensive models when possible.

Step 9: deployment & fine‑tuning in production

After training, prepare your model for real‑world use:

- Packaging: export the model in a portable format (e.g., ONNX or TensorFlow SavedModel). Build a simple API endpoint for inference. Containerize the service so it can run consistently across environments.

- Monitoring: implement logging to track prediction confidence, latency and usage. Monitor for drift: if input distributions change, performance may degrade.

- Feedback loops: collect user interactions or annotations to retrain the model. Use strategies like active learning to choose the most informative samples. Periodic fine‑tuning keeps the model relevant.

Business & user experience considerations

1) Explainability & transparency

As models influence decisions, stakeholders need to trust them. Explainability tools (e.g., SHAP values, attention maps) highlight which inputs contributed to a decision. Clear documentation about data sources, training methods and limitations helps maintain trust. The Stanford AI Index notes that fairness in AI involves ensuring systems are equitable and avoid discrimination; however, only 29% of organizations consider fairness risks, and the average adoption of fairness measures is 1.97 out of five. Startups must prioritise fairness early to avoid future trust problems.

2) UX implications: trust, sycophancy risk, behaviour in interfaces

Models influence user experience. For chat or recommendation products, a model may mimic user preferences too closely (sycophancy) or entrench biases. Design interfaces that allow users to provide corrections and see alternative recommendations. Provide fallback options when the model’s confidence is low.

3) Objective evaluation & versioning workflows

Implement version control for datasets and models. Use tools that track which dataset and code produced each model, enabling reproducibility. Define evaluation metrics at the beginning and stick to them; avoid shifting metrics after seeing results. Peer reviews and cross‑functional involvement (design, engineering, ethics) provide different perspectives and catch blind spots.

Challenges & best practices

Training a model is both technical and organizational. Common challenges include:

- Data quality: messy, biased or incomplete data leads to poor models. Invest early in cleaning and data governance.

- Privacy & regulatory constraints: user data may be governed by regulations like GDPR. Anonymize data, implement consent management and respect user rights.

- Compute costs: training on GPUs or TPUs can be expensive. Use cloud credits, optimize models via pruning and quantization, and consider small models initially.

- Ethical issues: fairness, transparency and environmental impact cannot be afterthoughts. Build them into your requirements.

Best practices to navigate these challenges:

- Start small and iterate. Build a baseline with simple models, test quickly and expand only when necessary. Early experiments reveal whether a complex approach is justified.

- Define clear evaluation metrics upfront. Pick metrics aligned with your user impact (e.g., recall for fraud detection). Consider fairness and user outcomes, not just accuracy.

- Version datasets and models. Use tools like DVC or MLflow to track data and models. Knowing which data produced which model prevents confusion later.

- Maintain bias and drift audits. Regularly test your model for unfair outcomes across groups. Monitor performance over time to catch concept drift.

By following these practices, you increase the chance of delivering value while avoiding common pitfalls.

Conclusion

Founders and product leaders shouldn’t fear how to train an AI model. Start with a clear problem, gather the right data and choose a simple approach. Then iterate and refine. Training a model is not a one‑off event but a continuous practice of learning from data and feedback. With careful attention to fairness, energy use and user experience, you can build models that not only perform well but also earn trust. This is the heart of how to train an AI model: thoughtful choices, grounded experimentation and responsible deployment.

FAQ

1. Can I train my own model?

Yes. With modern frameworks like PyTorch and cloud services, you can train small models on your laptop or rent compute in the cloud. Focus on a narrow problem and use open‑source tooling.

2. How is an AI model trained?

First, you collect and prepare data. Then you choose a model architecture, train it using optimization algorithms to minimize a loss function, validate it on holdout data and tune hyperparameters. Finally, you test and deploy it.

3. How do you teach your model?

You “teach” it by feeding it examples. In supervised learning, each example has a label. The algorithm adjusts internal parameters to reduce errors between its predictions and the true labels.

4. What training methods teach a model?

Methods include supervised, unsupervised and semi‑supervised learning. For language models, pretraining on unlabeled corpora, fine‑tuning on task‑specific data and RLHF are common.

.avif)