How to Use AI for Concept Testing: Guide (2026)

Explore techniques for leveraging AI in concept testing, from surveys to sentiment analysis, to validate ideas quickly.

The biggest risk in product development isn’t technical feasibility—it’s releasing something people don’t want. Launching a new feature or product consumes time and cash, and too often teams build on untested assumptions. Harvard Business School professor Clayton Christensen famously observed that around 30 000 new products launch each year and 95% of them fail. The lesson is clear: before betting months of design and engineering on an idea, we need to know whether it appeals to the people we’re building for. That’s where concept testing, and increasingly how to use AI for concept testing, comes in.

As a founder and product person at Parallel, I’ve seen how artificial intelligence can shorten feedback cycles and add confidence to product decisions. Using how to use AI for concept testing isn’t about replacing human intuition; it’s about augmenting our understanding with data-driven insights. In the startup context, where resources are scarce and iteration cycles are fast, automated analysis helps us pick the right ideas and refine them quickly. This article breaks down why concept testing matters, how to use AI for concept testing speeds it up, and how to adopt these tools in your own process.

What is concept testing?

At its core, concept testing is the practice of presenting a proposed product or service idea to target users and measuring their reactions. It takes many forms—an illustrated storyboard, a clickable prototype, a simple description—but the goal is always to answer fundamental questions:

- Does the concept solve a real problem for the audience?

- Does it seem useful or desirable?

- Would people pay for it?

- Which aspects excite them and which parts fall flat?

Concept testing happens early, before large engineering investments. Looppanel’s 2024 piece explains that concept testing “evaluates an idea or concept with the target audience before the production stage. By gathering feedback early, teams can identify weak points and pivot quickly instead of sinking resources into something that won’t stick.

Why it matters

Misaligned products are costly. When Ford ignored research and invested US$250 million in the Edsel in the 1950s, it ended up losing more than US$350 million. Today’s software teams face the same risk on a smaller scale. Fixing a design flaw after launch can cost up to 100× more than addressing it in the initial stages. Concept testing surfaces such issues early, saving time and money. Strategyn, a consultancy focused on outcome‑driven innovation, points out that concept testing should validate that a product idea helps customers get their job done better than alternatives. It’s not about adding trendy features; it’s about ensuring the concept aligns with actual needs.

Traditional methods and their limitations

Historically, concept testing relied on focus groups, in‑depth interviews, or surveys. These methods offer valuable qualitative insights but come with drawbacks:

- Small sample sizes – Recruiting a dozen participants gives limited confidence when you’re about to invest heavily.

- Slow turnaround – Scheduling interviews and transcribing responses can take weeks.

- Costs – Professional researchers, participant incentives and facilities add up.

- Bias – Group dynamics can skew feedback, and manual analysis is subject to researcher bias.

These methods still have a role, but they struggle to support the pace of modern product cycles. That gap has created room for how to use AI for concept testing approaches.

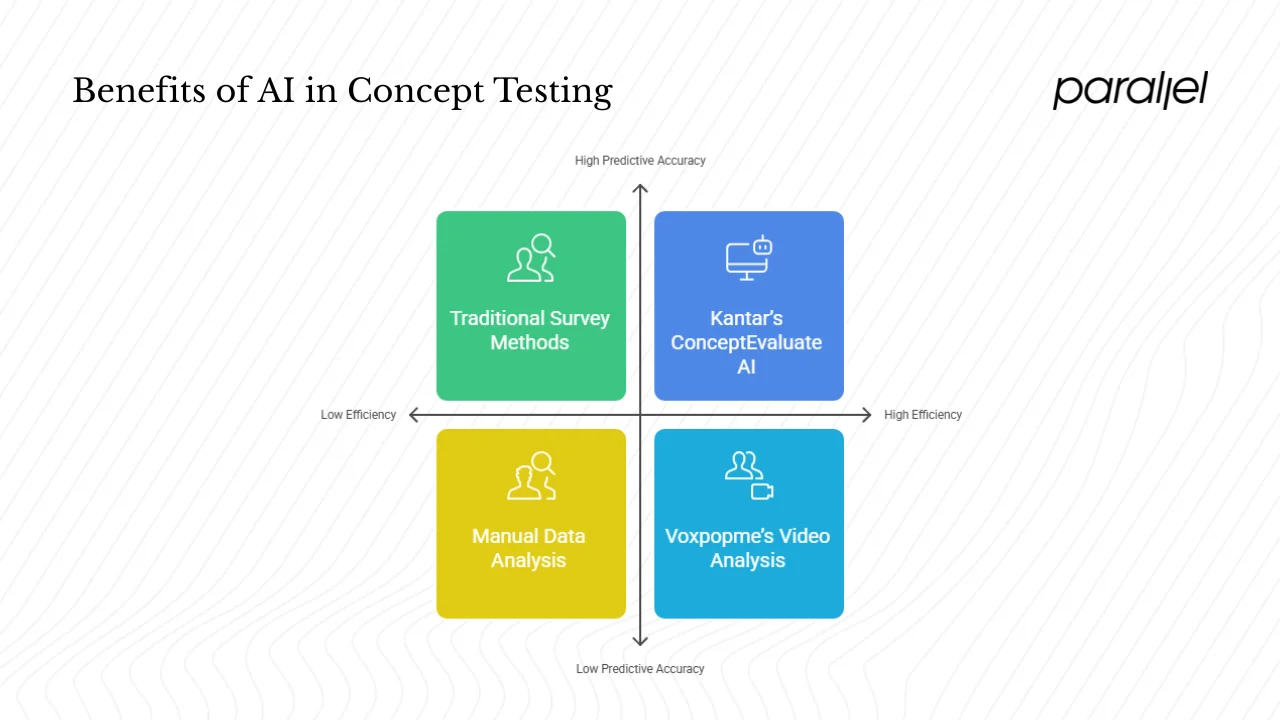

The shift toward artificial intelligence–enabled concept testing

Recent advances in machine learning have transformed how we can analyse user reactions. Artificial intelligence lets us collect data from more participants, process qualitative feedback at scale and even predict in‑market performance before full development. For example, Kantar’s ConceptEvaluate AI tool, launched in July 2024, is trained on nearly 40 000 real innovation tests and over 6 million consumer evaluations. The company reports that its model is close to 90 % accurate compared with traditional survey results in predicting which concepts will succeed. This tool can screen 10–100 concepts in as little as 24 hours, giving innovators a way to test many ideas quickly.

Voxpopme’s case study illustrates another benefit of automation: a global brewing company used video surveys to gather rich qualitative feedback on 150 concepts in just 12 hours. Participants recorded their reactions overnight, and artificial intelligence analysed the sentiment and themes, enabling the company to make informed decisions within a week. This combination of scale and speed is impossible with purely manual research.

Why use artificial intelligence for concept testing?

1) Automation tools

Modern platforms combine participant recruitment, stimulus presentation and automatic analysis. Instead of manually coding open‑ended responses, machine learning algorithms transcribe and categorise them. The result is faster analysis and lower costs. For example, Kantar’s automated service uses an artificial intelligence model to evaluate concepts without requiring a survey panel, making it suitable for sensitive topics. Voxpopme’s platform captures short video responses and uses machine learning to pull out themes and emotions.

2) Predictive analytics

One of the most exciting features of how to use AI for concept testing is the ability to forecast real‑world performance. Kantar’s ConceptEvaluate AI claims to be nearly 90 % consistent with survey‑based results. The model uses outcomes from previous launches to predict purchase intent, uniqueness and relevance of new ideas. This predictive layer helps teams prioritise concepts with the highest potential before committing to prototypes and marketing campaigns.

3) User feedback analysis at scale

Qualitative data is messy. Traditional methods might generate hours of audio or pages of notes. Machine learning can process large volumes of text or video and extract sentiment, topics and emotions. Voxpopme’s tool processes video surveys to identify common themes and emotional responses. Looppanel explains that concept testing can be conducted through surveys, interviews or A/B tests, but with artificial intelligence those responses can be processed automatically, pointing out trends and outliers quickly.

4) Data‑driven decisions and faster iteration

When you can test many ideas quickly and at low cost, you’re more likely to iterate rather than commit prematurely. Kantar’s system allows up to 100 concepts to be screened simultaneously. That means you can try variations—different feature sets, pricing tiers or value propositions—and pick the most promising combinations. With each round of feedback, you refine the concept, narrow in on what matters and save resources for engineering features that people will actually value. For startups, this pace can make the difference between catching a market opportunity and missing it.

How to use artificial intelligence for concept testing: a step‑by‑step guide

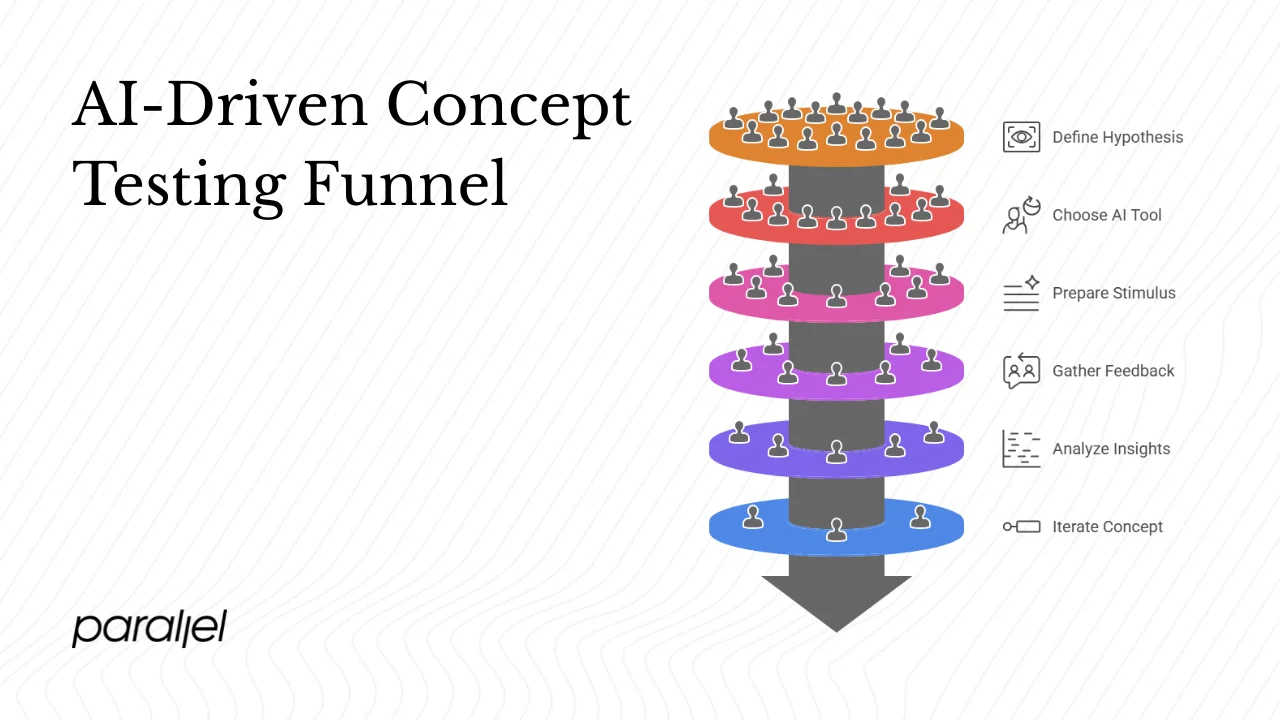

1. Define your product idea and hypothesis

Good concept testing starts with clarity. Write a brief statement describing the problem you’re solving, the target users and the core value proposition. Then articulate your assumptions. For example, you might believe that freelancers want a single dashboard to manage multiple clients, that they’d pay a monthly fee and that integration with accounting software is critical. These hypotheses become the focal points of your test.

Decide what you want to validate: messaging (“Does ‘All your projects in one place’ appeal to freelancers?”), pricing, packaging, feature set or even the market need itself. The more specific your hypotheses, the more actionable your feedback.

2. Choose an artificial intelligence–enabled concept testing tool

Picking the right platform depends on your goals:

- High‑volume screening: If you have dozens of ideas and need to narrow them down quickly, a predictive analytics tool like Kantar’s ConceptEvaluate AI can test 10–100 concepts at once and deliver results in a day. Pricing starts around US$5 500, which may be acceptable when you consider the cost of wrong decisions.

- Qualitative depth: When you want to hear your users speak about the concept, look for tools that capture video or open‑text responses and apply sentiment analysis. Voxpopme excels here: their platform allows a beverage company to show 150 concepts to groups of 10 people and collect overnight feedback.

- DIY platforms: Smaller teams might start with platforms like Looppanel or Lyssna that support surveys and prototype tests with builtin analysis. These aren’t predictive but they help you surface patterns in user reactions.

Criteria to consider include sample size, analytics capabilities (e.g., sentiment or emotion detection), turnaround time, cost, and whether the participant pool matches your target market.

3. Prepare your stimulus or prototype

Your concept needs to be clear enough for participants to understand it without a lengthy explanation. Depending on your budget and the stage of your idea, the stimulus can be:

- A written description outlining the problem, the proposed solution and main features.

- Sketches or storyboards illustrating how a user interacts with the idea.

- Mock‑ups or interactive prototypes built with tools like Figma.

If you’re short on time, generative design tools can help produce multiple variations quickly. At Parallel, we often start with simple slide decks or clickable prototypes that show the core flows. According to Looppanel, concept testing can present ideas in “mock‑ups, sketches, written descriptions and interactive demos”. The critical point is clarity—participants should grasp the concept quickly so their feedback addresses your hypotheses.

4. Launch the test and gather feedback

Recruit participants who match your intended audience. Some platforms provide access to consumer panels; others require your own outreach. Show the concept and collect responses. Use rating scales to measure purchase intent, uniqueness and relevance, and ask open‑ended questions like “What stands out?” and “What confuses you?” to capture qualitative reactions.

Artificial intelligence can assist with data collection and analysis here. Video‑based surveys allow participants to share spontaneous reactions and body language, which algorithms then analyse for sentiment and themes. Voxpopme’s case shows that a global brewer completed an overnight study of 150 concepts by recruiting participants through the platform’s on‑demand community. For large portfolios of concepts, predictive screening tools like Kantar’s can handle high volumes without human moderators.

5. Analyse the data and interpret the insights

The value of how to use AI for concept testing comes through in analysis. For quantitative questions, algorithms compute purchase intent scores, uniqueness and relevance. Kantar’s model uses a dataset of over 6 million consumer evaluations to predict outcomes. For qualitative data, natural language processing identifies themes, emotions and common issues. Voxpopme’s AI Insights tool processes video responses to surface sentiment and points out recurring topics.

In addition to the numbers, context matters. Look for patterns: which features drive excitement? Are participants confused about the value proposition? Does the concept feel too similar to existing solutions? Compare demographic segments to see if certain groups respond differently. Use the predictive component to prioritise concepts with the highest estimated potential. Then synthesise these insights into concrete changes—tweak messaging, adjust features, rethink pricing or refine your target audience.

6. Iterate and refine

Concept testing is rarely a one‑off activity. Use the insights to revise your concept, then test again. Because predictive tools deliver results quickly, you can repeat the cycle several times without delaying your roadmap. For example, you might start with five variations of a feature, narrow down to two based on feedback, adjust the messaging, and run a second test within days. This lean approach is particularly valuable for startups; it prevents over‑investing in unproven ideas and helps you adapt to user feedback before moving into detailed development.

7. Move to prototyping and full user testing

Once a concept receives positive signals, build a more detailed prototype—an MVP or a high‑fidelity mock‑up. This is where usability testing complements concept testing. Whereas concept testing asks “Should we build this?”, usability testing asks “Can people use it effectively?” Looppanel emphasises that usability testing focuses on how easily users interact with the product. Combining both methods ensures you’re building the right thing and building it well. At this stage, tools such as heat‑map analytics and behavioural tracking can give further insights, and artificial intelligence continues to help by highlighting patterns in user behaviour.

Best practices for startups and product managers

- Recruit the right participants. Data is only as useful as the people you gather it from. Make sure your sample matches your target market, both demographically and psychographically.

- Balance automation with human judgment. Artificial intelligence accelerates analysis, but humans still need to interpret context and subtle details. Use automated tools to surface patterns, then rely on your team’s experience to decide what actions to take.

- Consider ethics and privacy. When collecting user data—in particular video or open‑text responses—you must respect participants’ privacy. Choose vendors with strong privacy policies and get consent for recording and analysis.

- Manage expectations. Predictive models provide probabilities, not certainties. Kantar’s tool is about 90 % consistent with survey results, but that still leaves room for surprises. Use artificial intelligence as one input among several, not as an oracle.

- Don’t skip real user interactions. Automated analysis is powerful, but for complex interactions and edge cases, human observation is irreplaceable. Reddit discussions in UX and game development communities often remind us that AI handles basics well but struggles with nuanced flows; simple prototypes with real users surface issues algorithms may miss.

- Plan your budget. While artificial intelligence reduces testing costs overall, quality prototypes and participant incentives still require spending. Consider what stage warrants which investment.

- Integrate concept testing into your product workflow. Make concept testing a standard step in your discovery process. Document insights and share them with design, engineering and marketing teams to bring the roadmap together and reduce misalignment.

Use cases: real‑world examples

- Voxpopme case: A global brewing company used Voxpopme’s video survey platform to test 150 concepts across small groups overnight. The platform delivered transcripts, sentiment analysis and thematic clusters by morning, enabling the innovation team to incorporate customer reactions into their annual planning workshop within the same week.

- Kantar’s ConceptEvaluate AI: Launched in July 2024, this tool draws on a proprietary dataset of 40 000 innovation tests and more than 6 million consumer evaluations. It predicts outcomes with about 90 % accuracy compared to traditional surveys and can screen 10–100 concepts in 24 hours. This makes it ideal for early‑stage screening and large concept portfolios.

- Reddit UX and game development discussions: Practitioners often share that generative tools help them quickly draft mock‑ups or prototypes. A contributor in r/UXDesign noted that rapid prototyping with generative tools makes prototypes feel more realistic and improves client feedback. A game developer in r/gamedev wrote that using a tool like ChatGPT to simulate player conversations helps refine early game concepts but emphasised that testing with actual players is still crucial. These anecdotes echo the broader insight that artificial intelligence accelerates ideation but doesn’t replace human validation.

Challenges and limitations

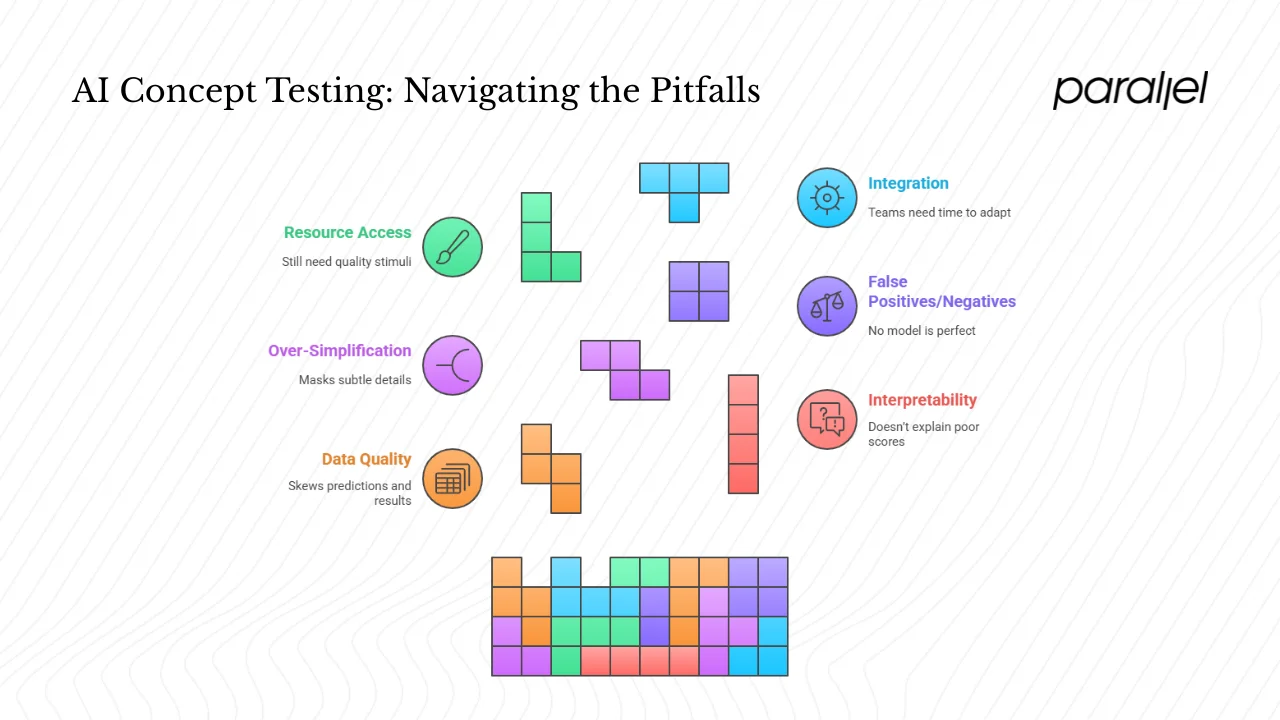

While how to use AI for concept testing offers many advantages, it isn’t a panacea.

1) Data quality and bias

Artificial intelligence models are only as good as the data they’re trained on. If the training dataset skews towards certain demographics or industries, predictions may not generalise to your market. Early‑stage startups with niche audiences must be careful to validate that the model’s benchmarks apply to their context.

2) Interpretability

Predictive scores and sentiment ratings are helpful, but they don’t explain why a concept scores poorly. It’s important to dig into the underlying comments or video clips to understand the reasons behind a negative sentiment. Pair quantitative results with qualitative analysis and team discussions.

3) Over‑simplification

Quantitative scores can mask subtle details. A concept might score moderately overall but excite a specific niche segment. Without careful segmentation and human judgement, you might discard an idea that could succeed within a smaller market.

4) False positives and negatives

No model is perfect. A concept predicted to succeed may flop due to factors outside the test (e.g., competitor moves, marketing execution). Conversely, a concept predicted to perform poorly might appeal if communicated differently or targeted to a different audience. Use predictive results to guide but not dictate your roadmap.

5) Resource access

Artificial intelligence helps reduce analysis costs, but it doesn’t remove the need for quality stimuli and participant incentives. You still need designers to craft mock‑ups and a plan to recruit participants who reflect your users.

6) Integration into existing workflows

Product teams accustomed to traditional research methods may need time to adapt to data‑driven processes. Ensure everyone understands how to interpret artificial intelligence outputs and incorporate them into decision‑making.

Future trends and what’s next

Looking ahead, the combination of concept testing and artificial intelligence will continue to advance. We can expect:

- More sophisticated models: Larger datasets and improved algorithms will enhance predictive accuracy and reduce false positives. Tools may incorporate behavioral data from prototypes to predict long‑term engagement.

- Integration with prototype analytics: Imagine a single platform that runs a concept test, generates a high‑fidelity prototype and tracks user interactions, all analysed by machine learning. This would blur the line between concept testing and usability testing.

- Continuous validation: Rather than one‑off tests, tools will support ongoing concept evaluation, allowing teams to receive feedback as they iterate. This matches continuous discovery practices.

- Accessibility for startups: As tools mature and competition grows, costs should drop, making predictive concept testing accessible to early‑stage teams.

- Feedback loops with live products: Combining pre‑launch concept testing data with post‑launch analytics will create powerful feedback loops, improving future ideation and reducing risk.

Conclusion

In product development, ideas are plentiful but validation is invaluable. Concept testing helps founders and product managers avoid costly missteps by ensuring their ideas match user needs and market realities. How to use AI for concept testing amplifies this practice by providing speed, scale and predictive power. Platforms like Kantar’s ConceptEvaluate AI and Voxpopme show that we can test dozens of concepts in hours rather than weeks. But we must pair these tools with human judgement, careful sampling and ethical data practices.

At Parallel, we’ve witnessed how fast feedback loops enable better decisions and sharper product‑market fit. By adopting how to use AI for concept testing thoughtfully, you can reduce risk, strengthen your innovation pipeline and, most importantly, build products that people genuinely value.

FAQ

1) How can artificial intelligence be used in testing?

Artificial intelligence supports many types of product testing. In concept testing, tools like Kantar’s ConceptEvaluate AI predict in‑market performance and identify which ideas appeal most. In prototype testing, algorithms track user behaviour—click paths, dwell time, errors—and point out usability issues. For user‑feedback analysis, natural language processing can summarise themes and emotions from open‑ended responses or video surveys. In market research, predictive models synthesise demographic, behavioural and sentiment data to guide decisions. The benefit is faster, more scalable analysis compared to manual coding.

2) Can artificial intelligence be used for quality assurance testing?

Yes—but this is a different application. In software quality assurance, machine learning tools generate test scripts, simulate user interactions and detect anomalies. For example, natural language processing can interpret user stories and automatically create test cases. While this improves efficiency, concept testing focuses on validating the desirability and viability of an idea, not debugging code. Startups might use both: predictive concept tests to select ideas, then automated QA tools to ensure the product works as intended.

3) What is the best artificial intelligence tool for a test?

There’s no one‑size‑fits‑all answer; the right choice depends on your objectives. For early‑stage screening of many ideas, predictive tools like Kantar’s ConceptEvaluate AI offer speed and statistical confidence. For rich qualitative feedback, consider platforms like Voxpopme that use video surveys and sentiment analysis. Evaluate each option based on sample access, analytics depth, turnaround time and cost. Match the tool to your concept testing goals—are you comparing feature sets, refining messaging or trying pricing? Piloting a test with one concept can reveal whether a platform fits your context.

4) How to use artificial intelligence for test questions?

Generative models can assist with survey and interview design by suggesting clear, unbiased questions and identifying potential phrasing issues. Start by defining what you want to learn—e.g., initial reactions, perceived benefits, willingness to pay—and then ask the model to draft questions. After collecting responses, use natural language processing to group open‑ended answers into themes and quantify sentiment. Keep in mind the human touch: test your questions with a colleague or a small user group to ensure clarity and relevance.

.avif)