Prompt Engineering for Designers: Mastering AI Workflows

What is prompt engineering for designers? Discover the best 2026 techniques for using AI in UI/UX design, including real-world examples and expert prompt tips.

Generative models can already brainstorm concepts, sketch layouts and draft micro‑copy. For founders and product leads, the challenge is turning those raw outputs into useful design. That’s where prompt engineering for designers comes in: crafting and optimising instructions so models deliver results we can use. Google calls this the practice of asking the right question, and DataCamp notes that it involves designing and refining inputs to elicit specific responses. Mastering this skill helps us automate tasks, develop concepts faster and improve our work. In this guide, I share what we’ve learned at Parallel and how you can adopt similar practices.

What is prompt engineering (and why designers should care)?

At its core, prompt engineering is about intentional communication. The Harvard University information technology group notes that the information, sentences, or questions you enter into a generative artificial intelligence tool influence the quality of outputs. DataCamp’s explanation frames it as designing and refining prompts—questions or instructions—to elicit specific responses from artificial intelligence models. Parloa, a platform specialising in conversational interfaces, adds that prompt engineering guides not just what a model says but how it reasons, formats and prioritises information. For design teams, these definitions mean more than semantics. Prompting is an interface design task: how do we structure inputs so the system understands what we need?

Large models respond differently to vague questions and detailed briefs. A simple instruction like “make me a landing page” often results in generic layouts. When we instead define the audience, purpose and visual intent, the results become more usable. Designers have always been responsible for flows and communication. Prompt engineering for designers extends that responsibility into how we interact with artificial intelligence. It’s not a replacement for craft; it’s a companion to it. Jakob Nielsen calls prompt engineering a new wave of intent‑based outcome specification—we no longer tell the machine how to build something step by step; we describe the outcome we want. A design team from a job board compares prompt engineering to managing a colleague: we give detailed instructions to ensure high‑quality results.

From a product perspective, this skill opens up practical benefits:

- Efficiency: we automate repetitive tasks such as drafting micro‑copy or summarising user interviews.

- Concept development: using generative tools to create multiple design directions in minutes rather than days.

- Competitive edge: early‑stage startups that master prompting can consider more ideas and iterate faster.

- Collaboration: better prompts improve communication between design and product because outputs match intent.

McKinsey’s 2025 survey shows that over three‑quarters of organisations use artificial intelligence in at least one function, and 21% have redesigned workflows to accommodate it. As adoption grows, designers who can guide these systems will be critical.

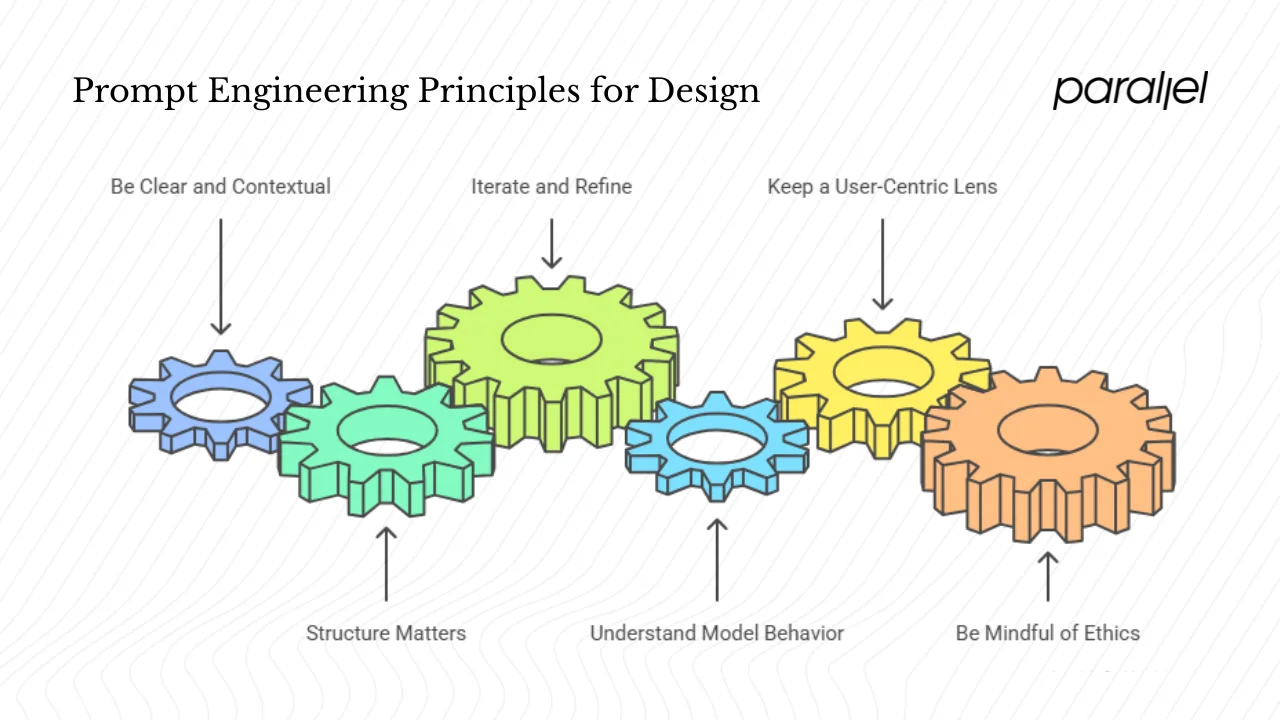

Core principles for prompt engineering in design

For anyone practising prompt engineering for designers, the following principles apply.

1) Be clear and contextual

Generative models aren’t mind readers. Harvard’s guideline reminds us that more descriptive prompts improve the quality of outputs. Likewise, a design article from the job board demonstrates how a vague error‑message prompt yields a robotic response, while a clear, context‑rich prompt produces more human‑like copy. When we write prompts for visual tasks, include the user, task, medium and tone. For example, instead of “design a dashboard,” try “create a dashboard wireframe for a fintech app aimed at freelance designers, prioritising income tracking and expense categorisation.”

2) Structure matters

Google advocates structuring prompts by defining role, context and instruction. That might sound technical, but it’s simply how we brief collaborators. We often start by assigning a role—“You are a senior UX strategist.” Then we provide context—“The product helps small businesses manage invoices.” Finally, we state the instruction—“Outline a user flow for sending an invoice.” Parloa points out that well‑engineered prompts reduce ambiguity, guide reasoning and improve consistency.

3) Iterate and refine

Prompt crafting is iterative. Google’s guidance encourages breaking complex tasks into smaller steps and refining prompts based on feedback. In practice, we often start with a broad task, review the output and adjust one variable at a time. My team uses spreadsheets to track prompt versions, noting what changed and how the output evolved. This experimental mindset echoes our design process—sketch, test, iterate.

4) Understand model behaviour

Designers don’t need to become machine‑learning engineers, but a basic grasp of how models work helps. The Developer Nation article explains that prompt engineering benefits from knowing model architecture, tokenisation and sampling settings. These factors affect cost, speed and randomness. For instance, lowering temperature yields more deterministic results, while raising it invites creative but risky suggestions. Parloa’s article on frameworks lists guardrails such as limiting temperature, enforcing banned terms and defining output formats. These guardrails prevent hallucinations and ensure compliance.

5) Keep a user‑centric lens

Prompt engineering should match user needs and ethics. The Arsturn blog emphasises that the words used in prompts have a big influence on output quality and that user‑centric design principles—empathy, inclusivity and iteration—should guide prompt development. Many people struggle to articulate detailed specifications; our prompts should use plain language and avoid jargon. Consider accessibility: if we’re designing a chatbot for older users, we need to instruct the model to use larger font sizes, high contrast and friendly tone. And always test prompts with real users to catch biases or confusing phrasing.

6) Be mindful of ethics

As generative models become integral to design, we must recognise potential harms. McKinsey reports that 27% of organisations reviewing gen‑artificial intelligence outputs check all content before it goes live. That is because models sometimes hallucinate or produce biased outputs. Include constraints in prompts such as “avoid stereotypes” or “cite reliable sources.” Always review outputs manually before incorporating them into products.

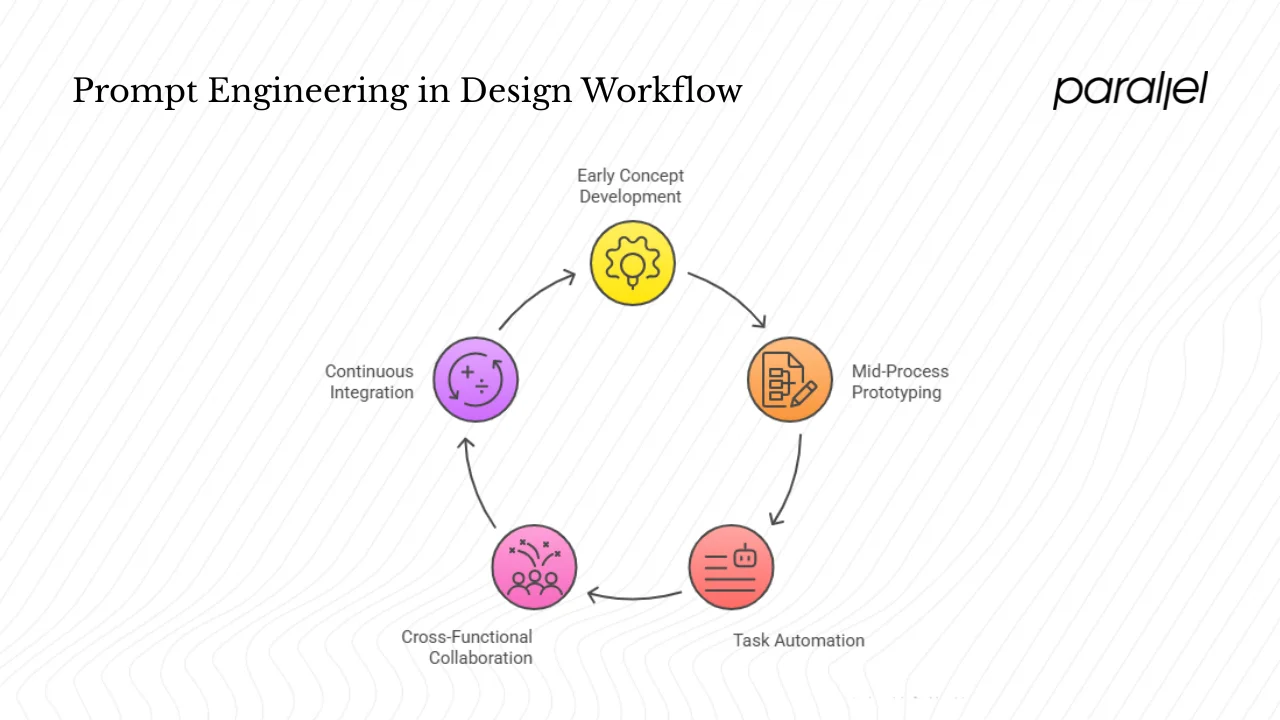

Where prompt engineering fits into the design workflow

Understanding where prompt engineering for designers fits into the design and product workflow helps teams integrate the practice effectively.

1) Early concept development

During ideation, generative tools can broaden our horizons. We use prompts like “generate five distinct layout ideas for a budgeting app targeting recent graduates” or “suggest three alternative onboarding flows for an education platform.” These prompts produce lists of options we might not have considered on our own. Classic Informatics notes that generative artificial intelligence adoption has jumped from 32% to 65% of organisations in 2025, reflecting how quickly teams are adopting such tools for content creation and design. By crafting good prompts, we turn these tools into brainstorming partners rather than random suggestion machines.

2) Mid‑process prototyping

Prompting can accelerate wireframes, user flows and even mood boards. Tools like Figma, Photoshop and Sketch are integrating generative features. My colleagues often ask ChatGPT: “Describe the complete user flow for ordering coffee in our app, including decision points and error states.” Another prompt might be: “Generate a mood board description for a minimalist, playful SaaS dashboard; include colour palette suggestions and typography recommendations.” We then convert these descriptions into visual prototypes.

3) Task automation and documentation

Once a design concept is validated, we move into execution. Here, prompts save time by automating micro‑copy, summarising user research, documenting design systems and generating release notes. We might prompt: “Here is our design system description. Summarise it in three reusable component descriptions.” or “Summarise these interview notes, highlighting main pain points for small business users.” Automating these tasks frees designers to focus on problem solving.

4) Collaboration across functions

Well‑structured prompts serve as shared artefacts between design, product and engineering. They capture assumptions and constraints. For example, a product manager can write: “You are a QA specialist. Based on this user flow, list test cases covering success and error states.” The resulting test cases help the QA team prepare. Prompt frameworks such as COSTAR—Context, Objective, Style, Tone, Audience and Response—provide a common vocabulary for cross‑functional teams. By embedding prompt engineering for designers into our workflow, we create a bridge that improves communication and clarity.

5) Continuous integration

We don’t just prompt once and forget it. As we learn more about our users or adjust the product, we revise prompts. At Parallel, we consider prompts like any other asset: they live in version control, with comments about what changed and why. When we update our brand voice, we adjust prompts to reflect that voice. This maintenance ensures that prompts develop alongside the product.

Practical techniques and patterns

Zero‑shot vs few‑shot

Zero‑shot prompts provide no examples; they simply state the instruction. They’re useful for straightforward tasks: “List five human‑computer interaction conferences in Asia in 2025.” Few‑shot prompts include examples to guide the model. For a tone‑setting activity, we might supply one positive and one negative error message and then ask the model to generate three more. Google’s guide lists direct, one‑shot and few‑shot prompting techniques, while chain‑of‑thought prompts encourage the model to reason step by step. For complex design problems—such as mapping an onboarding flow or comparing multiple personas—chain‑of‑thought prompts yield more structured output.

Role and scenario prompts

Assigning a role anchors the model’s perspective. For design tasks, we often begin with “You are a senior UX strategist” or “You are a brand historian.” Scenario prompts add context: “Imagine you are a content designer at a health‑tech startup; write three micro‑copy examples for a failed payment.” Role‑based instructions help the model adopt the right tone and vocabulary. Harvard’s guide encourages the “act as” pattern, where we ask the model to behave like a specific persona.

Step‑by‑step decomposition

Many design challenges involve multiple stages: understanding the problem, generating ideas, refining them. Chain‑of‑thought prompts instruct the model to break the task down. For example:

- “List three features for a habit‑tracking app targeting parents.”

- “For each feature, describe the user’s motivation and potential friction.”

- “Design a user flow that addresses the friction in feature two.”

By sequencing prompts, we encourage the model to think through the problem rather than jumping to conclusions.

Templates for common tasks

Having reusable prompt templates reduces time spent reinventing instructions. Here are a few we use regularly:

- Concept generation: “Generate five distinct interface concepts for [task] in [product], targeting [user persona]. For each concept, describe the core interaction and one unique visual element.”

- User flow mapping: “Describe the complete user flow for [task] in [product], including decision points, error states and recovery paths. Present the flow as a numbered list.”

- Visual style: “Describe a mood board for a [adjective] [product type], suggesting colours, typography and imagery. The mood should match [brand attributes].”

- Component suggestions: “Here is our design system: [insert description]. Summarise it into three reusable component categories with names and brief descriptions.”

Templates should include variables for context, tone and output format. Parloa’s framework emphasises defining context, objective, style, tone, audience and response. Adopting a similar structure in templates improves consistency.

Prompt refinement tips

- Track variations: keep a table of prompts and outputs to see what changes produce better results.

- Change one variable at a time: adjust context, tone or format separately to understand each effect.

- Use feedback loops: ask the model to critique its output—“Rate your response on clarity and brevity”—and revise accordingly.

- Limit randomness: adjust sampling parameters or instruct the model to stick to factual information when necessary.

Collaboration prompts

Prompts can facilitate collaboration across disciplines. For example:

- “Act as a project manager. Summarise the following meeting notes into action items and assign owners.”

- “You are an engineering lead. Based on the current design, outline potential technical challenges and suggest mitigations.”

- “Act as a marketing copywriter. Rewrite this headline for a social post aimed at early adopters.”

These prompts shift the model’s focus according to stakeholder roles, making its output more relevant.

Tools and technologies designers should know

Generative platforms

The big players—GPT‑4, Claude, Gemini and others—constitute the backbone of prompting. Each has its quirks. Large models like GPT‑4o have improved contextual understanding and can handle more complex prompts. Multimodal models accept text and images, enabling tasks like generating interface ideas from sketches.

Text‑to‑image and visual models

Tools such as Midjourney, DALL‑E and Adobe Firefly translate textual prompts into imagery. They’re useful for mood boards or conceptual illustrations. Prompt augmentation features, such as style galleries or prompt rewrite functions, help users refine inputs. Jakob Nielsen identifies six patterns—Style Galleries, Prompt Rewrite, Targeted Prompt Rewrite, Related Prompts, Prompt Builders and Parametrization—that lower the articulation barrier for users.

Prompt management tools

As prompts become assets, teams need ways to organise and version them. Tools like PromptLayer, PromptHub and internal databases allow you to store templates, track performance and collaborate across teams. Parloa’s article highlights that frameworks provide naming conventions, shared vocabulary and evaluation standards. Integrating prompts into design tools like Figma or Sketch via plugins can streamline workflows.

Metrics and evaluation

How do you know if a prompt works? Assess outputs on relevance, accuracy, creativity and tone. In some cases, we run small user tests—asking colleagues if the generated copy fits the brand or if the layout concept meets user goals. Parloa stresses defining what “good output” means and aligning evaluation across teams.

Cost and scalability

Generative models have token limits and associated costs. Developer Nation warns that understanding model architecture, parameters and sampling settings matters because they influence output quality and cost. For startups, selecting a model with favourable pricing and adequate context length can save money while still enabling creative exploration. Keep an eye on token usage when iterating prompts; sometimes a shorter, clearer prompt yields better results and costs less.

Real‑world applications

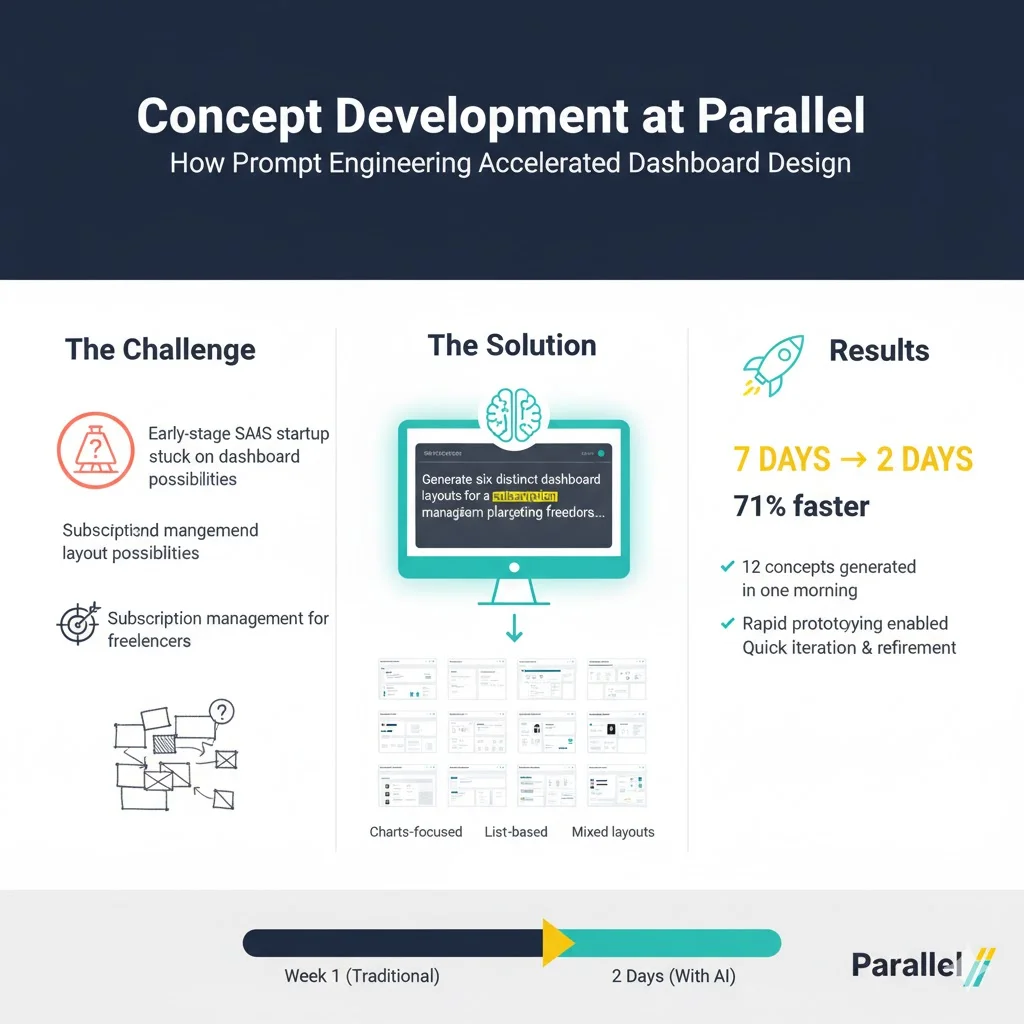

Concept development at Parallel

At Parallel, we recently helped an early‑stage SaaS startup develop their dashboard product. The team felt stuck on layout possibilities. We used prompt engineering for designers to generate a dozen layout concepts within a morning. The prompt was: “Generate six distinct dashboard layouts for a subscription management platform targeting freelancers. Each should prioritise revenue metrics, upcoming invoices and quick actions.” The model returned a variety of structures—some emphasised charts, others lists. We quickly prototyped the most promising ones, then iterated with our own refinements. This approach shortened the concept phase from a week to two days.

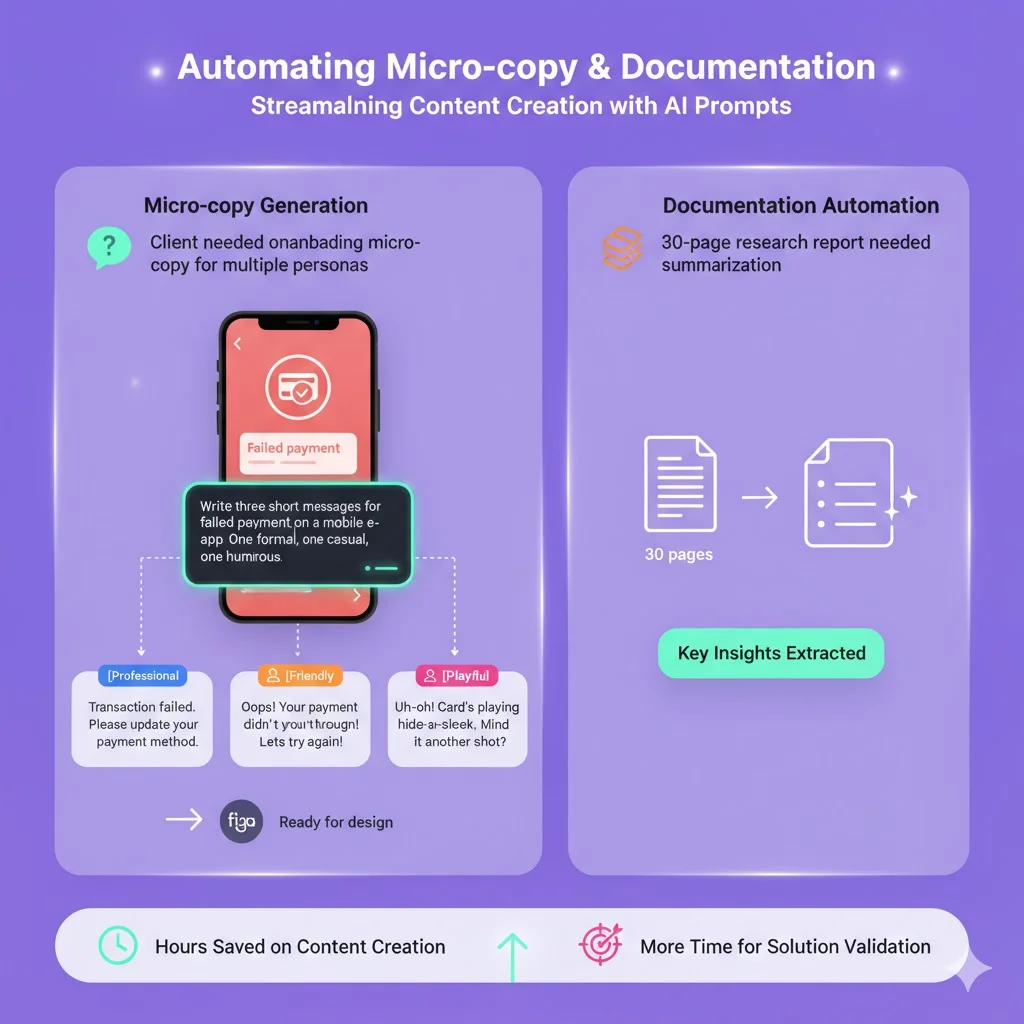

Automating micro‑copy and documentation

Another client needed onboarding micro‑copy for different user personas. We wrote prompts specifying tone (professional, friendly or playful), user type, and action. For example: “Write three short messages for a failed payment on a mobile e‑commerce app. One should sound formal, one casual and one humorous.” The outputs served as first drafts. After a designer’s edit, the micro‑copy went straight into Figma. We also used prompts to summarise a 30‑page research report into bullet‑point insights. The time saved on documentation allowed the team to spend more energy validating solutions.

Collaborative prototyping

In a workshop, we had designers, product managers and engineers jointly prompt a model to design a new feature. Starting with a broad description, each team member added constraints—security requirements from the engineer, brand guidelines from the designer, and user goals from the PM. The final prompt read like a thorough design brief. The resulting output wasn’t perfect, but it gave us a shared starting point and revealed where our assumptions differed. This workshop improved collaboration and highlighted the value of clear, structured prompts.

Lessons learned

Prompt engineering is not magic. We’ve had sessions where prompts were too vague or lacked constraints, leading to off‑topic outputs. We’ve seen the model hallucinate features or suggest patterns that violate accessibility guidelines. The crucial point is to consider prompts as prototypes—test, refine, and never take outputs at face value. Also, don’t let models replace your judgement. Use them to augment your thinking, not to outsource it.

Challenges, limitations and best practices

Common pitfalls

- Vague instructions: generic prompts yield generic results. Always specify audience, purpose and format.

- Over‑reliance on artificial intelligence: models can’t replace user research or design judgement. Use them as partners, not decision‑makers.

- Cost and latency: long prompts and high sampling rates increase token usage and response time. Be concise.

- Model limitations: large models sometimes hallucinate or misinterpret context. Always review outputs.

- Bias and ethics: prompts may inadvertently encode biases. Include constraints that discourage stereotypes and harmful content.

Limits specific to design

Generative systems lack an intrinsic sense of brand. They may propose colours or typography that conflict with guidelines. Micro‑interactions and motion details often need human crafting. Visual outputs from text‑to‑image models can be inconsistent or require significant post‑processing. Tools may not handle complex user flows or localisation nuances. Recognising these limitations will prevent frustration.

Best practices

- Collaborate across functions: involve engineers and product leads when crafting prompts; cross‑disciplinary perspectives improve clarity.

- Keep humans in the loop: always review and refine artificial intelligence outputs before implementation.

- Document and version prompts: track changes and outcomes so you can reproduce and improve them.

- Assess design quality: evaluate artificial intelligence outputs against design principles—hierarchy, contrast, accessibility—and not just factual correctness.

- Stay current: tools develop quickly. Follow updates from platforms and adjust your prompts accordingly.

- Be mindful of ethics: avoid prompts that could produce discriminatory content; include guidelines for fairness and accessibility.

Looking ahead, models are getting better at handling vague prompts. Tools will soon include built-in prompt templates and may reduce the need for manual engineering. However, human context and design intuition will remain crucial.

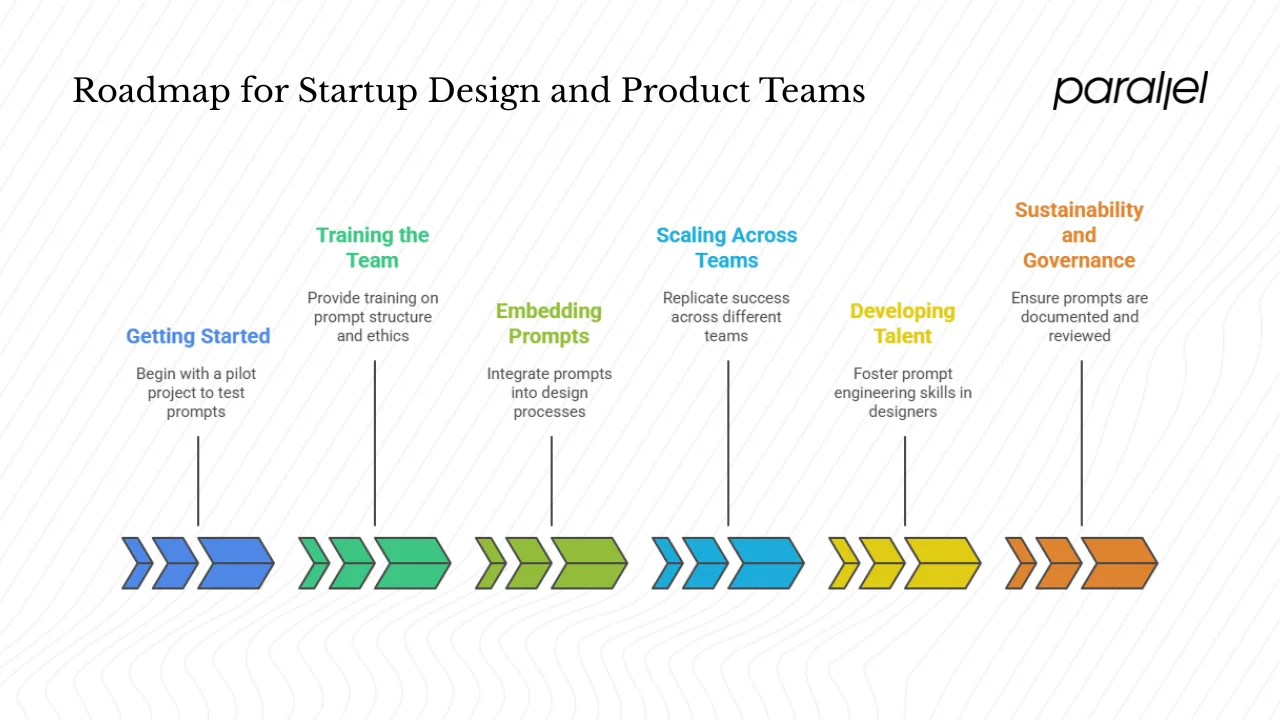

A roadmap for startup design and product teams

1) Getting started

If you’re a founder or product manager, begin with a pilot. Choose one workflow—perhaps generating micro‑copy or producing concept sketches—and work with your design team to craft prompts. Invest a few hours experimenting. Track the time saved and quality of outputs. Classic Informatics reports that generative artificial intelligence adoption rose from 32% to 65% of organisations in a single year; even small wins can put you ahead.

2) Training the team

Provide basic training on model behaviour, prompt structure and ethical considerations. Encourage designers to practise writing and iterating prompts. Run mini‑hackathons where teams compete to produce the best output from the same brief. Share learnings in regular retrospectives.

3) Embedding prompts into processes

Define who writes and owns prompts. Create a library of templates for tasks such as user flows, concept generation and research summaries. Store them in a version‑controlled repository accessible to the whole team. Use frameworks like COSTAR to structure prompts and agree on context, objective, style, tone, audience and response. Make prompt reviews part of design critiques.

4) Scaling across teams

As your organisation grows, replicate success across products. Encourage cross‑team sharing of prompt techniques. Create guidelines for integrating brand voice into prompts. Establish governance to oversee ethics and quality. McKinsey’s survey highlights that CEO oversight of artificial intelligence governance is correlated with higher impact—assign clear responsibility for prompt usage and review.

5) Developing talent

Designers who master prompt engineering for designers combine creative problem solving with technical curiosity. Pair prompt writing with design thinking. Ask “Who is the user? What is the need? How might a model help?” Practise writing prompts that articulate these aspects. Stay curious about new models, frameworks and visual tools.

6) Sustainability and governance

When prompts become part of production, consider them like code: document, review, and ensure they match policy. Monitor usage to avoid runaway costs. Plan for versioning and rollback if a prompt change causes unintended outputs. Create channels for feedback so designers can report issues or share improvements.

Conclusion

Generative models are rewriting how we design. For startups and product teams, prompt engineering for designers isn’t a passing fad; it’s a skill that blends communication, systems thinking and creativity. By learning to craft clear, structured, contextual instructions, we invite machines into our practice in a way that enhances, rather than replaces, human judgement. The statistics are clear: artificial intelligence adoption is soaring, and the organisations that rework their workflows around artificial intelligence see tangible benefits. The only real risk is standing still while others learn to converse with machines. My invitation is simple: start with one prompt today. Iterate, share, and let it inform your process. You’ll be surprised at how quickly it becomes second nature.

FAQ

1) What does a prompt designer do?

A prompt designer crafts and optimises the text or other input given to models so the model produces the desired output. In a design context, this might mean creating prompts that generate wireframe suggestions, micro‑copy, layout ideas, user flows or even visual assets. It’s akin to writing a concise design brief for an intelligent collaborator.

2) Are prompt engineers still in demand?

Yes. As organisations embed generative tools into workflows, people who can communicate with models, structure interactions and integrate outputs into business processes are increasingly valuable. A design team from a job board notes that prompt engineering is well‑suited to creative professionals, and adoption rates are climbing.

3) What is a prompt engineer’s salary?

Salaries vary widely based on region, company stage and responsibilities. Some roles focus solely on prompt writing; others combine design, research and technical tasks. In my experience advising startups, compensation can range from designer salaries for people at a mid point of experience to senior product roles. For current figures in your region, check job‑market sites.

4) What is replacing prompt engineering?

There is no full replacement yet. Models are improving at understanding vague instructions, and more tools include builtin templates, which may reduce manual tuning. Agentic workflows and fine‑tuning may handle more tasks automatically. However, for design and product teams, the human element remains essential. We still need to understand user context, design thinking and communication to guide these tools responsibly.

.avif)