Thematic AI: Complete Guide (2026)

Discover Thematic AI and how it uses natural language processing to analyze feedback and extract themes for better decision‑making.

Trying to make sense of hundreds of interview transcripts or support tickets can feel like drinking from a firehose. Early‑stage teams often drown in text while looking for the signal that will drive their next product iteration. Over the past few years, smart technologies have appeared to help people sift through these narratives.

In this guide, I’ll talk about thematic AI, a set of tools and methods that use machine learning and language models to group and summarise qualitative data. If you lead a startup or run product or design, you’ll learn what it is, how it differs from traditional thematic analysis, how it works under the hood, and how to use it without losing the human touch. I’ll also share real examples from teams we’ve worked with at Parallel.

What is thematic AI?

Thematic AI is the use of machine learning models—particularly large language models (LLMs)—to automatically identify, group, and summarize themes in qualitative text data.

Traditional thematic analysis involves manually reading and coding responses from interviews, surveys, or feedback. Thematic AI automates the early stages of this process. It analyzes unstructured text like support tickets or transcripts, finds patterns, and clusters similar ideas together, producing an initial draft of themes for human analysts to review.

Unlike older topic modeling tools that rely on word frequency, thematic AI uses contextual embeddings. That means it understands that “the app crashed” and “it broke on me” belong to the same theme. Tools like NVivo, ATLAS.ti, Thematic, and Looppanel now integrate these capabilities.

This method doesn’t replace researchers. Instead, it speeds up repetitive coding and lets people focus on interpreting insights.

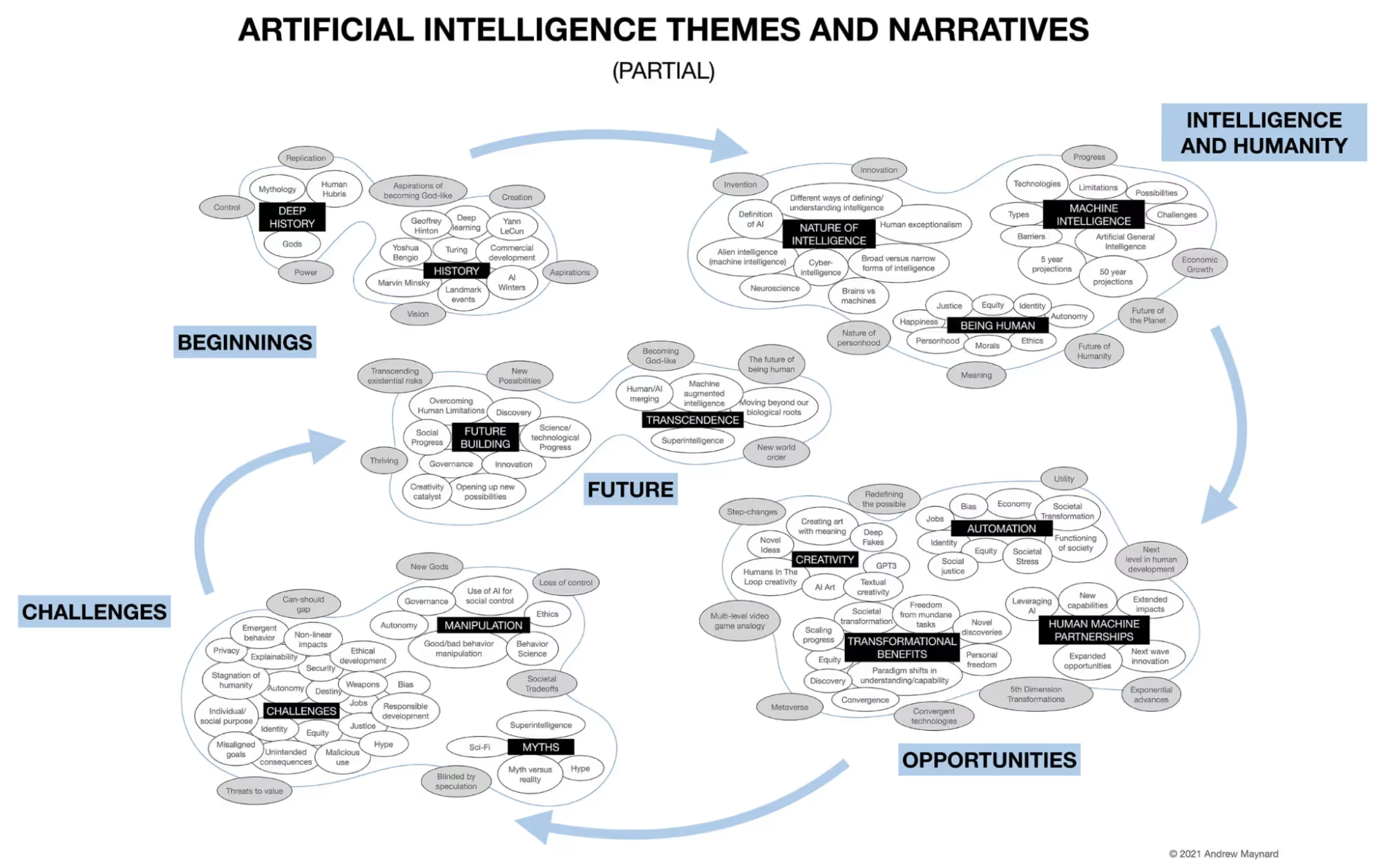

Unlike early topic modeling tools, modern machine‑assisted analysis systems rely on large language models and contextual embeddings. These models look at words in relation to each other and capture meaning rather than simple frequency. That means they can group different phrases that express the same idea — “the app crashed” and “it broke on me” — into the same theme. The “AI” part refers to the underlying machine learning, but for clarity in this guide I’ll refer to it as smart algorithms or machine intelligence to avoid the jargon. At its core, this approach combines language models with pattern recognition to generate a first draft of themes that a human analyst then refines.

Important terms and building blocks

Before diving deeper, it’s helpful to unpack a few concepts.

- Machine learning: Algorithms that learn patterns from data rather than following explicit rules. In this context, the model learns from text and finds commonalities across responses. Language models such as GPT‑3.5 or Claude 3.5 can process thousands of tokens quickly; the cost of running these models has dropped dramatically since 2022.

- Natural language processing (NLP) and text mining: Techniques that let computers work with human language. NLP includes tasks such as tokenization (splitting sentences into words), part‑of‑speech tagging and entity recognition. These steps turn raw text into structured input for clustering.

- Clustering and topic modeling: Unsupervised methods like Latent Dirichlet Allocation (LDA) or neural topic models group similar text segments without predefined labels. They help detect hidden topics across thousands of lines of feedback.

- Unsupervised learning: A type of machine learning where models discover patterns from data without labelled examples. Many smart analysis tools start with unsupervised clustering before human reviewers tweak the results.

- Semantic understanding: Instead of looking at word counts, modern models use word and sentence embeddings to represent meaning in a high‑dimensional space. This helps them group synonyms and paraphrases into themes.

- Pattern recognition: The ability to spot recurring phrases or ideas across data. Traditional thematic analysis relies on the analyst’s cognitive ability; algorithms can speed up this pattern‑matching step.

- Data analysis in the modern stack: This approach sits alongside analytics tools, survey platforms and customer feedback systems. Integrating with Slack or Notion makes it easier to pull qualitative insights into product roadmaps.

How Thematic AI Works

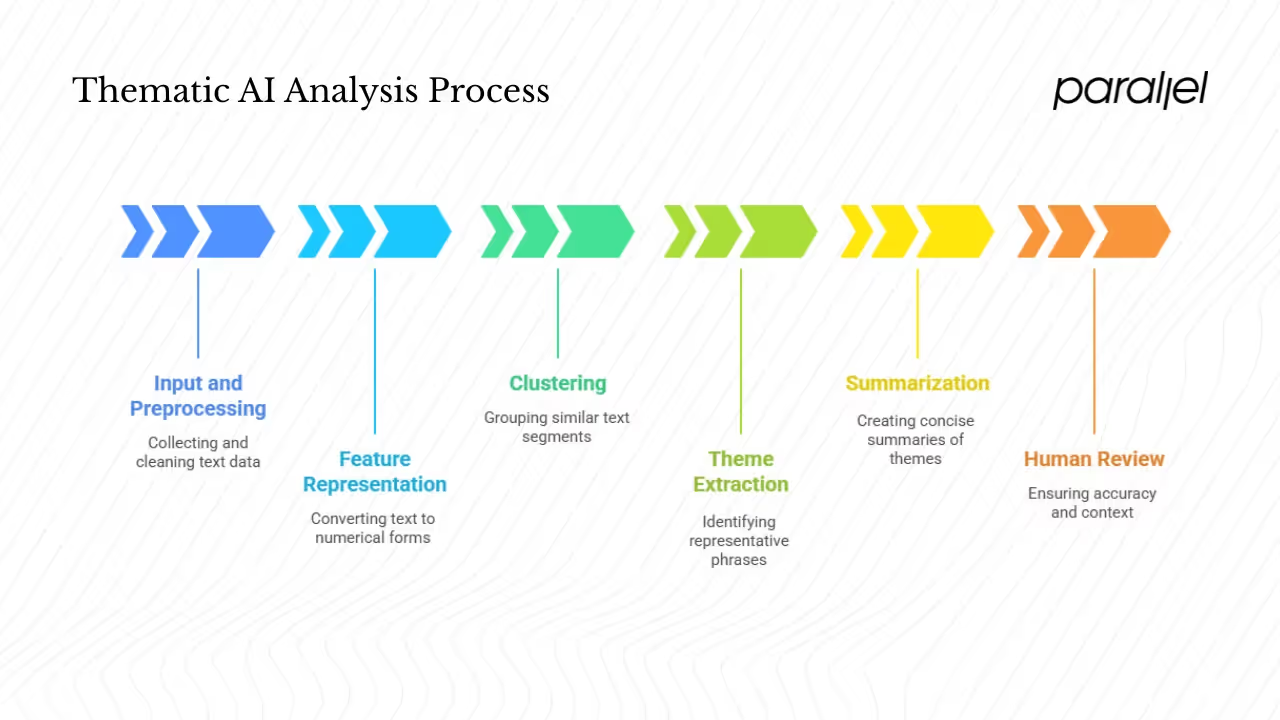

Thematic AI follows a similar process to manual thematic analysis but automates the repetitive parts. Here's a simplified breakdown:

1. Collect and Clean the Text

You start with qualitative data—interview transcripts, open-ended survey responses, support tickets, chat logs, etc. The system removes noise like greetings or filler words and standardizes the text for analysis.

2. Convert Text into Vectors

Using models like transformers, the system turns words and sentences into numerical representations called embeddings. These embeddings capture meaning and context, not just word frequency.

3. Cluster Similar Responses

Algorithms group similar responses together based on their semantic meaning. For instance, “credit card declined” and “couldn’t pay” might get grouped under “payment issues.” This can be done with models like LDA or neural topic models.

4. Generate Themes and Summaries

Once clusters are formed, generative models produce summary phrases that represent each group. These summaries help analysts quickly scan and refine the themes.

5. Human Review and Refinement

A human analyst reviews the output, merges overlapping themes, renames clusters, and ensures that the generated themes are accurate. This step is critical to catch subtle errors or misclassifications.

Traditional thematic analysis vs. thematic AI

Where thematic AI adds value

Early‑stage teams often lack the resources to manually code every interview. In my own work with SaaS startups, we’ve seen machine‑assisted analysis reduce time to insights dramatically. Here are some areas where these tools shine:

- Qualitative user research: When you run a series of usability studies or discovery interviews, you end up with hours of transcripts. Smart clustering can bring out pain points and recurring desires faster than a manual pass.

- Customer feedback and reviews: Products with user feedback channels (app stores, NPS comments) can generate thousands of entries. Platforms like Thematic or Looppanel group similar comments and surface top issues. For example, a 2024 article reports that 77.1% of UX researchers use machine‑assisted tools in at least some of their research, showing widespread adoption.

- Support tickets and chat logs: Support teams deal with unstructured text daily. Grouping tickets by theme helps product teams see which bugs or feature requests are most critical.

- Market research and trend spotting: Analysts can feed in social posts, news articles or forum discussions to detect new topics without reading everything manually.

- Voice‑of‑customer programs: Companies running continuous feedback loops rely on thematic grouping to identify which initiatives drive satisfaction. Thematic’s case studies with DoorDash and Atlassian show how automated analysis helps them create structure out of thousands of comments.

- Competitive intelligence: By ingesting public reviews or support transcripts from competitors, teams can discover what users complain about and what features are appreciated.

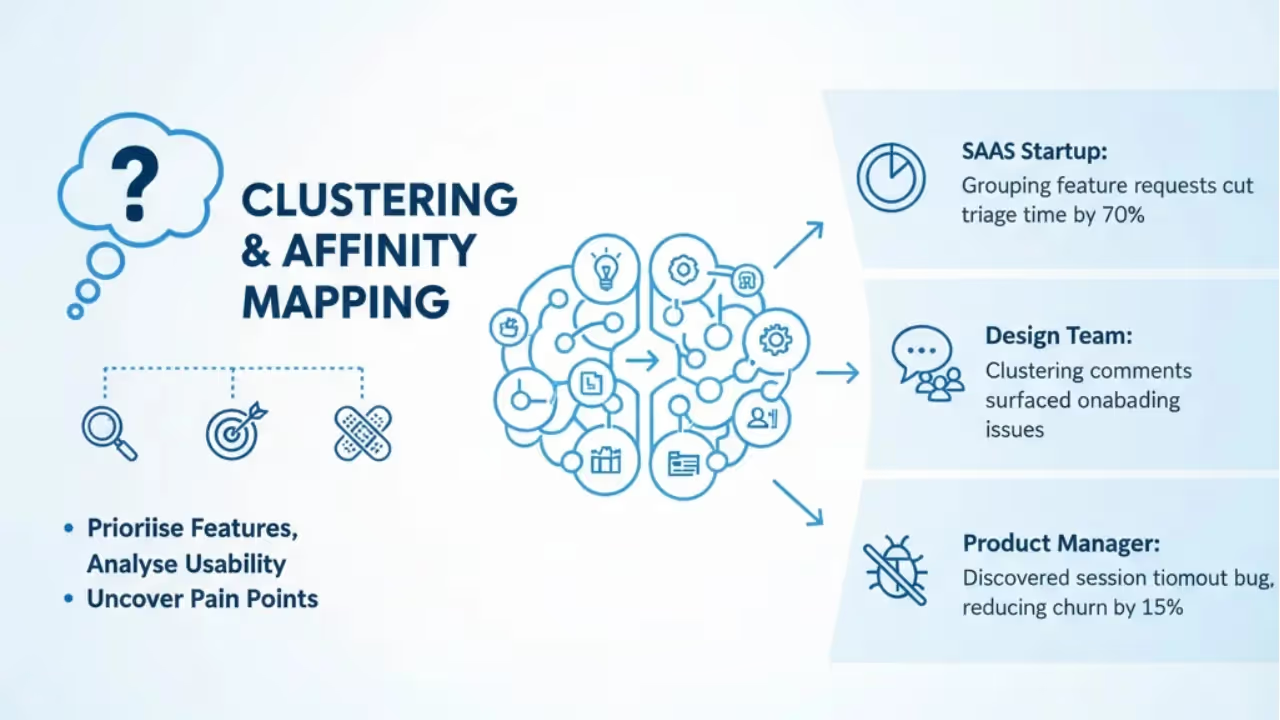

Example workflows

Teams use this method to prioritise features, analyse usability tests and uncover hidden pain points. In one SaaS startup, grouping feature requests cut triage time by 70%. A design team quickly surfaced onboarding issues by clustering comments, and a product manager discovered a session timeout bug, reducing churn by 15%.

Tools in the ecosystem

Tools range from Looppanel, which transcribes, tags and groups feedback and reports that most researchers now use machine assistance, to Insight7, a no‑code platform with visual dashboards. Established qualitative analysis tools such as NVivo, ATLAS.ti and MAXQDA now offer summarisation and code suggestions. Researchers are also experimenting with multi‑agent models that achieve perfect concordance with experts in some cases but emphasise the need for transparency and standardised checklists.. When choosing a tool, weigh factors like cost, transparency and integration with your workflow.

Implementation and best practices

When we roll out thematic AI for teams, we follow a few main steps:

- Prepare data: clean, deduplicate and normalise text; translate if needed; anonymise sensitive information.

- Choose and configure models: decide between pre‑trained or custom models and craft clear prompts; multi‑stage workflows can improve results.

- Validate and refine: review clusters manually, merge or split themes and rename them; human oversight remains essential.

- Share insights: summarise themes with representative quotes and quantify frequency; use visualisations and dashboards.

- Be aware of pitfalls: avoid spurious patterns, hallucinated themes, loss of subtlety and bias; choose transparent tools.

Research into multi‑agent systems and domain‑specific models continues; some models achieve expert concordance, and open models are becoming more affordable and accessible.

Conclusion

For founders, product managers and designers juggling endless feedback, thematic AI can be a force multiplier. Instead of spending days coding transcripts, you can let smart algorithms take the first pass, then apply your judgment to refine the results. It's important to see these tools as assistants, not oracles. Human oversight remains vital to preserve subtlety and avoid false patterns.

In my experience at Parallel, the teams that benefit most start small: they pilot a tool on a limited dataset, refine their prompts and review process, then scale up once confident. If you’re curious about adopting this method, consider running a pilot on your next batch of interviews or support tickets. Keep your mind open to the insights that come out, and be ready to step in when the machine misses something subtle.

Frequently asked questions (FAQ)

1) What is thematic AI?

It refers to the use of language models and clustering algorithms to group and summarise qualitative data into themes. Unlike traditional thematic analysis, which is entirely manual, it automates the initial coding and clustering stages, letting researchers focus on interpretation.

2) What are the seven types of artificial intelligence?

Scholars often describe seven categories: reactive machines, limited memory systems, theory of mind, self‑aware systems, narrow intelligence, general intelligence and superintelligence. In practice, current tools fall into the narrow category: they excel at specific tasks like summarising text but lack human understanding. When we talk about this approach, we’re dealing with narrow language models trained to process text and recognise patterns.

3) What is thematic intelligence?

In the context of research, thematic intelligence refers to the ability to understand and act on themes that come from data. Tools in this category provide machine‑assisted thematic intelligence by clustering, summarising and quantifying patterns, while humans provide the strategic context and decision‑making.

4) What is a woven‑named tool often mentioned in discussions?

Some tools with names inspired by weaving offer generative features for creating content or user personas. These products are typically unrelated to thematic analysis. When evaluating tools, focus on whether they support clustering, coding and transparent workflows rather than being swayed by naming trends.

.avif)