What Is AI Ethics? Complete Guide (2026)

Understand AI ethics, the principles guiding responsible AI development, including fairness, transparency, and accountability.

In early‑stage product teams, the decisions we make about artificial intelligence (AI) have far‑reaching consequences. A neural network that filters applicants or a recommendation engine that nudges people into buying something influences people’s lives. This guide unpacks what is AI ethics, shows why it matters for founders, product managers and design leaders, and offers pragmatic tools. By the end, you will understand core moral principles, how they apply to artificial intelligence systems, and what to watch as you build.

What is AI ethics?

Ethics is the system of moral principles that help us discern right from wrong. AI ethics studies how those principles apply to artificial intelligence systems. IBM explains that AI ethics is a multidisciplinary field aiming to optimize the benefits of AI while reducing risks and adverse outcomes. It sits at the intersection of technology, philosophy, human rights and public policy. Key dimensions include responsibility, human values, autonomy and trust.

Why does this matter? As artificial intelligence systems increasingly drive decisions—who gets a mortgage, which news stories you see, which diagnosis your doctor considers—their effects scale rapidly. IBM notes that poor research design and biased data have already led to unforeseen consequences, including unfair outcomes and reputational or legal exposure. Ethical guidelines are not abstract; they are guardrails for safer and more sustainable products.

Why it matters for startups & product teams

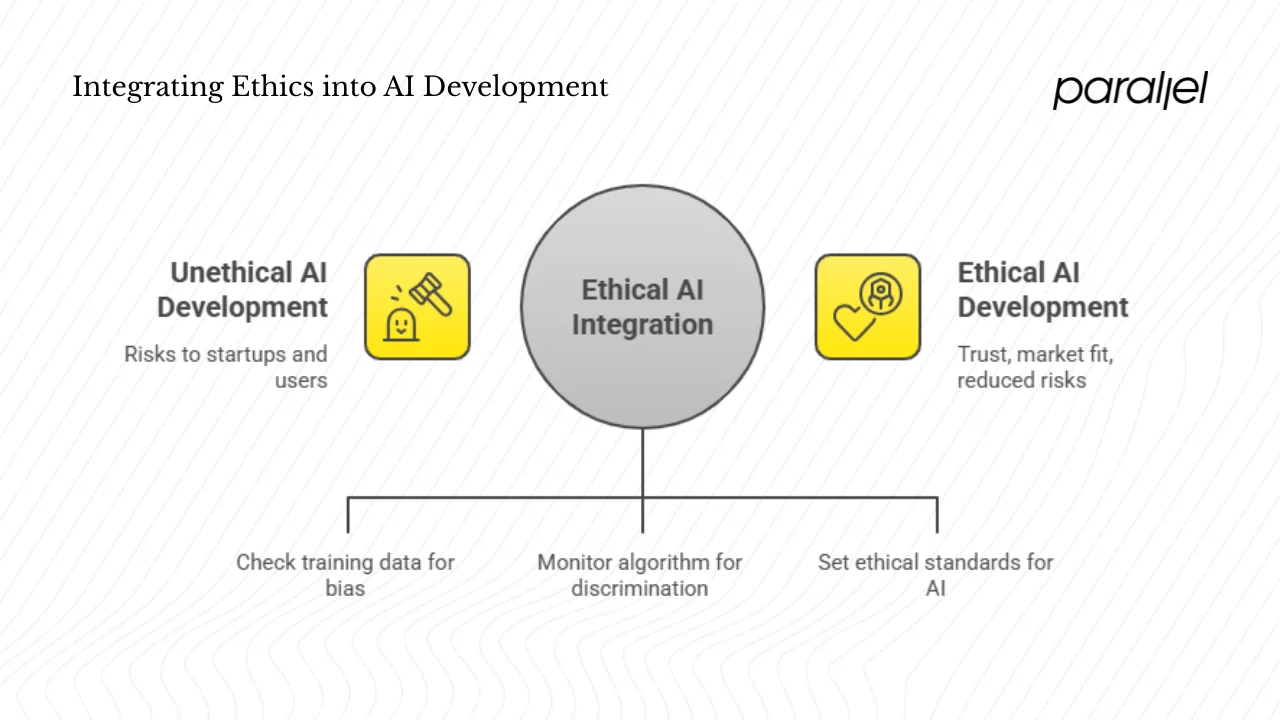

To truly answer what is AI ethics, we need to explore how ethical questions play out in the context of startups and product teams. Ethical considerations are not abstract at this stage: they influence hiring algorithms, recommendation systems and customer data practices.

Ignoring ethics comes at a cost. Bias embedded in training data can produce unfair outcomes; inadequate privacy protections can leak sensitive information; opaque models erode user trust; and regulatory failures can trigger backlash or fines. The Nielsen Norman Group (NN/g) emphasises that risk management should be integrated into design decisions because every design choice carries the potential for harm. Failing to evaluate those risks can lead to long‑term harm to the organisation and its customers.

On the other hand, weaving ethics into the product from the start strengthens trust and yields better fit with market needs. It reduces surprises later, such as last‑minute legal reviews that delay launches. As founders and product leaders, we are building systems that influence lives, make recommendations and collect personal data. Our role is to set ethical guardrails that ensure those systems augment rather than undermine human agency. IBM warns that companies that neglect ethics risk reputational, regulatory and legal exposure. In contrast, products grounded in ethical principles earn lasting trust.

A case in point: In 2020 a large recruitment platform rolled out an automated applicant screening algorithm trained on historical hiring data. It quickly emerged that the model penalised applicants with certain gendered phrases on their résumés. Because the team had not audited the training data for bias and had no process for monitoring fairness, the algorithm discriminated unintentionally. The backlash led to public apologies, lawsuits and a costly rebuild. Ethical considerations at ideation and training could have prevented this outcome.

Key principles of AI ethics

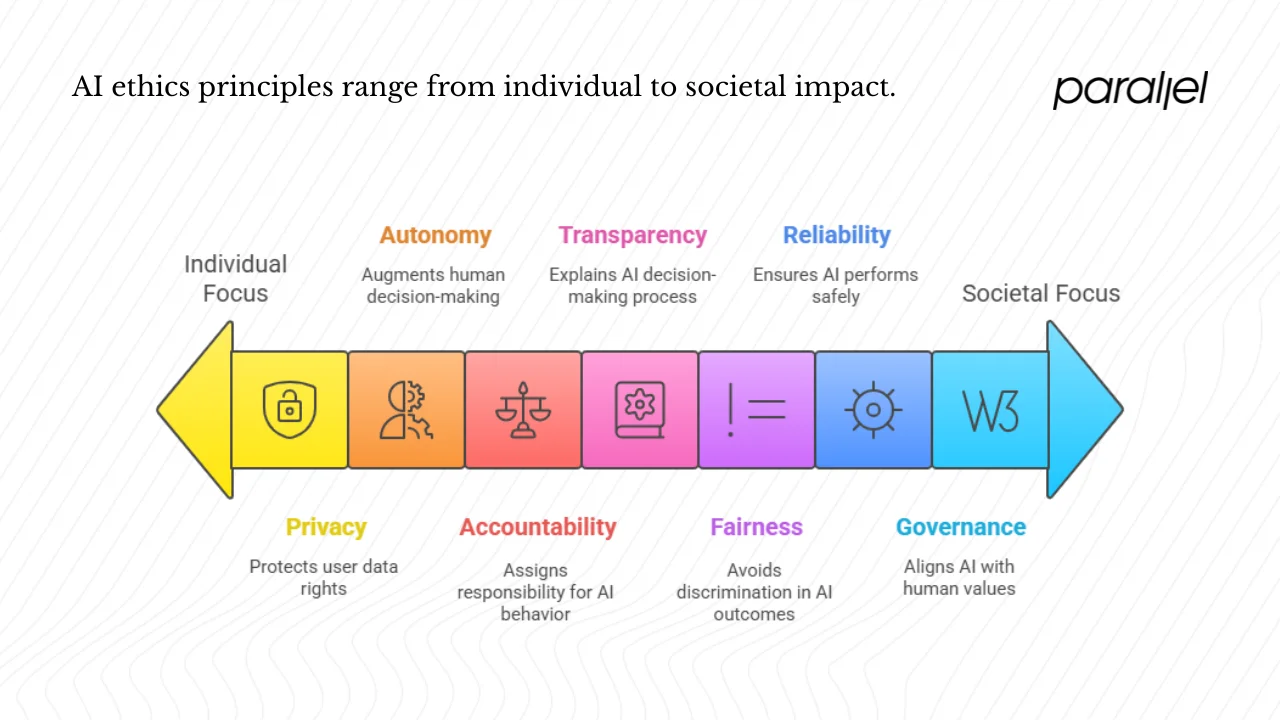

When we ask what is AI ethics beyond definitions, we arrive at a set of guiding principles. These principles translate moral values into actionable design and engineering practices.

Various organisations have proposed frameworks for ethical artificial intelligence. Here is a synthesis of core principles, explained through a startup/product lens.

1) Fairness / non‑discrimination

AI systems should not unfairly disadvantage individuals or groups based on sensitive attributes such as race, gender or age. UNESCO’s global ethics guidelines call for fairness and non‑discrimination to ensure AI benefits are accessible to all and to avoid biased outcomes. For product teams, this means auditing training data, testing models for disparate impact, and designing features that treat users equitably. For example, if your recommendation engine suggests job openings, run fairness metrics to ensure it does not steer certain demographics towards lower‑paying roles.

2) Transparency / explainability

Users and stakeholders need to understand how AI decisions are made. IBM’s trust principles emphasise that new technology must be transparent and explainable; companies should be clear about who trains the models, what data is used and what informs the algorithm’s recommendations. For startups, this means documenting data sources, exposing reasoning where possible and providing clear user interfaces. When a credit‑scoring model rejects an applicant, the interface should offer an explanation. At a minimum, internal teams must be able to audit model logic.

3) Accountability / responsibility

Someone must be answerable for an AI system’s behaviour. UNESCO’s guidelines say AI systems should be auditable and traceable, with oversight, impact assessments and due diligence. SAP’s updated ethics policy likewise emphasises responsibility and accountability. For product teams, assign a leader who is accountable for ethical outcomes. Create an incident response process for addressing harm and empower cross‑functional reviews that include legal, design and engineering perspectives.

4) Privacy / data protection

Respecting users’ data rights is fundamental. SAP’s policy highlights the right to privacy and data protection as a guiding principle. IBM’s trust principles assert that data and insights belong to their creators. Implement consent flows, minimise data collection to what is necessary and follow applicable data protection regulations. When collecting user feedback to improve a model, clearly explain how data will be used and stored.

5) Human autonomy / human‑centric design

AI should augment human decision‑making, not replace or undermine it. IBM’s trust principles state that the purpose of AI is to augment human intelligence. UNESCO underscores the importance of human oversight. For product teams, keep a human in the loop for high‑impact decisions and design interfaces that empower users. In content moderation tools, allow moderators to review algorithmic flags and override when needed.

6) Trust / reliability / robustness

AI must perform reliably and safely. IBM notes that ethical concerns include robustness. UNESCO’s guidelines highlight safety and security. Build models that are resilient to adversarial inputs, test them under edge cases and include fail‑safe mechanisms. Establish monitoring to detect drift or anomalies after deployment.

7) Governance and regulation / alignment with human values

Ethical AI should align with human rights and societal values. UNESCO’s core values include respect for human rights and dignity, peaceful and just societies, diversity and environmental flourishing. SAP aligns its policy with UNESCO to reinforce proportionality, safety, fairness, sustainability, privacy, human oversight and transparency. Startups should adopt internal governance frameworks: define prohibited uses, create ethics checklists, document decisions and engage stakeholders. Recognise that regulations are evolving; the AI Index report notes that U.S. federal agencies introduced 59 AI‑related regulations in 2024—more than double the number in 2023, while legislative mentions of AI rose 21.3% across 75 countries. Keeping abreast of emerging rules is essential.

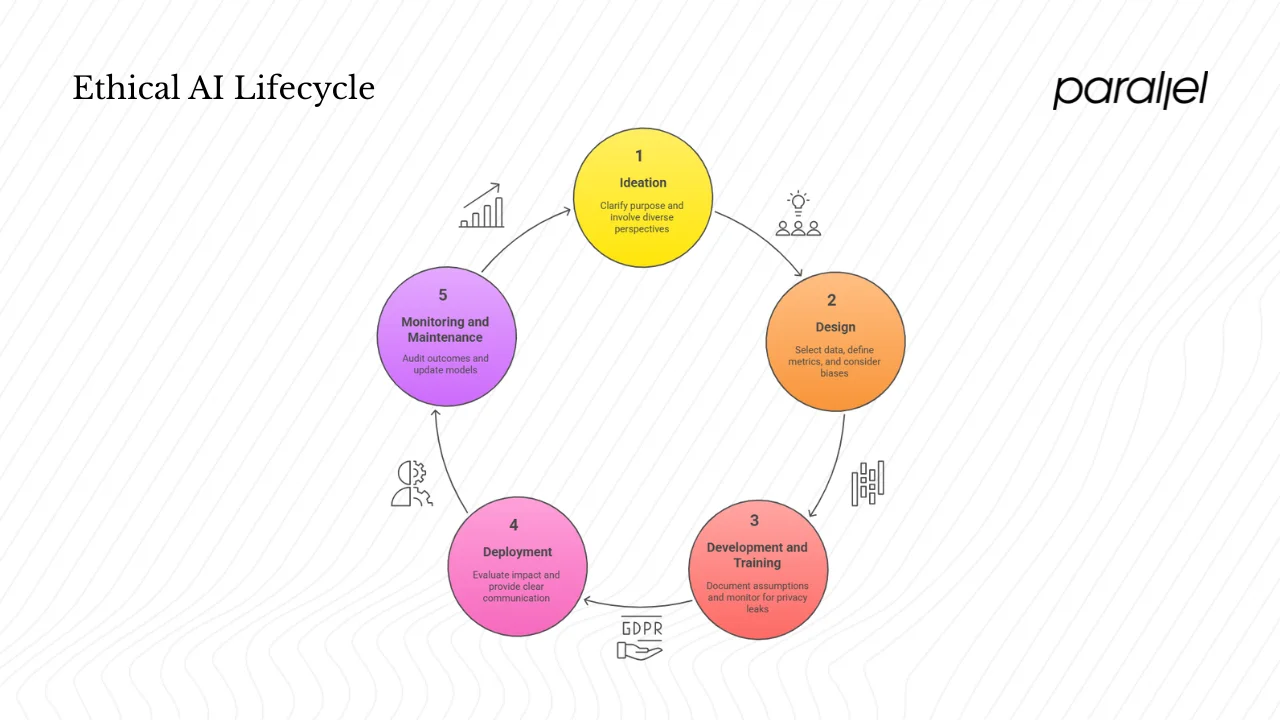

The lifecycle of an AI system: where ethics comes in

Keeping what is AI ethics in mind throughout the lifecycle—from ideation through monitoring—helps teams build responsibly. Every phase invites reflection on purpose, impact and accountability.

Ethics is not a bolt‑on at the end; it is woven through the entire development process.

1) Ideation

Clarify the purpose: What problem are you solving? Who is affected? Could automated decisions cause harm? At this stage, involve diverse perspectives, including those who may be impacted. Ask whether the problem needs an AI solution at all—NN/g warns that adding AI for novelty rarely produces real value.

2) Design

Select representative data and define metrics. Identify potential biases in data sources. Define fairness, privacy and robustness criteria. Consider edge cases and misuse scenarios. When designing user interfaces, provide transparency and options for user control. The IDEO AI ethics cards encourage teams to consider unintended consequences and to prioritise humanistic and culturally considerate design.

3) Development and training

Document assumptions and decisions. Monitor for privacy leaks and ensure data is obtained lawfully. Use fairness metrics and adversarial testing. IBM’s article notes that data responsibility, fairness, explainability and robustness are critical considerations.

4) Deployment

Evaluate impact on users. Provide clear communication about how the system works and how to contest decisions. Set up feedback mechanisms. Ensure compliance with privacy regulations and that users can opt out where feasible. Transparent consent flows build trust.

5) Monitoring and maintenance

Continuously audit outcomes and model performance. Use dashboards to monitor fairness, accuracy and drift. Establish processes to update or retrain models when unfairness emerges or when data distribution shifts. UNESCO’s ethical impact assessment suggests structured processes to identify and mitigate harms.

Common ethical challenges & risks in AI

Even with the best intentions, teams encounter recurring challenges. Recognising them helps you plan proactively.

- Bias & discrimination: Historical data reflects societal inequities. Without careful curation, AI models can reinforce those inequities. For example, predictive policing algorithms trained on biased arrest records may disproportionately target certain neighbourhoods. Use techniques like reweighing, sampling and fairness constraints to mitigate bias and audit outcomes for disparate impact.

- Privacy & surveillance: Collecting vast amounts of personal data can expose individuals to privacy breaches or surveillance. GDPR and similar laws require consent, minimisation and the right to be forgotten. Anonymisation techniques and privacy‑preserving machine learning (e.g., federated learning) can reduce risk.

- Opacity & lack of explainability: Many models, especially deep learning systems, operate as black boxes. This hinders trust and accountability. Invest in explainability methods (e.g., LIME, SHAP) and consider using simpler models when accuracy benefits are marginal.

- Autonomy & manipulation: AI can nudge or manipulate human behaviour subtly. Recommendation systems may encourage addictive usage patterns; generative models can create deepfakes that mislead. Design for user agency and disclose nudges. Avoid exploitative patterns.

- Accountability gaps: When outcomes are harmful, it may be unclear who is responsible—the developer, the model, or the organisation. Clear governance structures and documentation can close this gap.

- Regulatory & legal uncertainty: The regulatory environment is evolving; compliance is challenging. The AI Index’s finding that AI‑related regulations are growing quickly highlights the need for proactive tracking of new rules.

- Value misalignment: Product goals may conflict with societal values. For example, optimising engagement at the expense of users’ wellbeing can lead to negative consequences. Ethical reviews can help align objectives with broader human values.

Governance, regulation & ethical guidelines

No single global regulator exists for AI, but many guidelines and frameworks are emerging.

Global guidelines

UNESCO produced the first global standard on AI ethics in 2021. Its guidelines emphasise human rights, dignity and fairness, and call for human oversight. Four core values underpin the framework: respect for human rights and dignity, living in peaceful and just societies, ensuring diversity, and sustaining the environment It sets out ten core principles—including proportionality and do no harm, safety and security, privacy, multi‑stakeholder governance, responsibility and accountability, transparency and explainability, human oversight, sustainability, awareness and literacy, and fairness and non‑discrimination.

Corporate policies

SAP’s updated AI ethics policy aligns with UNESCO’s framework and includes ten guiding principles: proportionality and do not harm; safety and security; fairness and non‑discrimination; sustainability; right to privacy and data protection; human oversight and determination; transparency and explainability; responsibility and accountability; awareness and literacy; and multi‑stakeholder and adaptive governance. The policy emphasises that aligning with a widely recognised standard allows teams to confidently develop and deploy AI systems. The fairness principle requires not only protecting fairness but promoting it and putting safeguards in place to avoid discriminatory outcomes.

Internal governance for startups

Large corporations can assign dedicated ethics boards. Startups cannot always replicate that, but they can adopt lightweight practices:

- Define and document ethical principles for the organisation, drawing from UNESCO or corporate policies.

- Establish a cross‑functional ethics review process during ideation and before launch. Include design, product, engineering, legal and domain experts.

- Assign responsibility: a product manager or design lead should own ethical outcomes.

- Create checklists for data sourcing, privacy, fairness and explainability. Use them at each stage.

- Maintain documentation of decisions, assumptions and trade‑offs to enable auditing and learning.

Emerging regulation

Regulations are proliferating. In 2024, U.S. federal agencies introduced 59 AI‑related regulations, doubling the previous year, and legislative mentions of AI rose by more than 21% across 75 countries. The European Union is finalising the AI Act, which categorises AI systems by risk and imposes obligations on high‑risk categories. India, Canada and other countries are drafting policies, and the Chinese government is actively funding AI research while imposing restrictions on generative content. Startups should monitor these developments, especially if operating internationally. Aligning with UNESCO’s values and principles can provide a solid foundation.

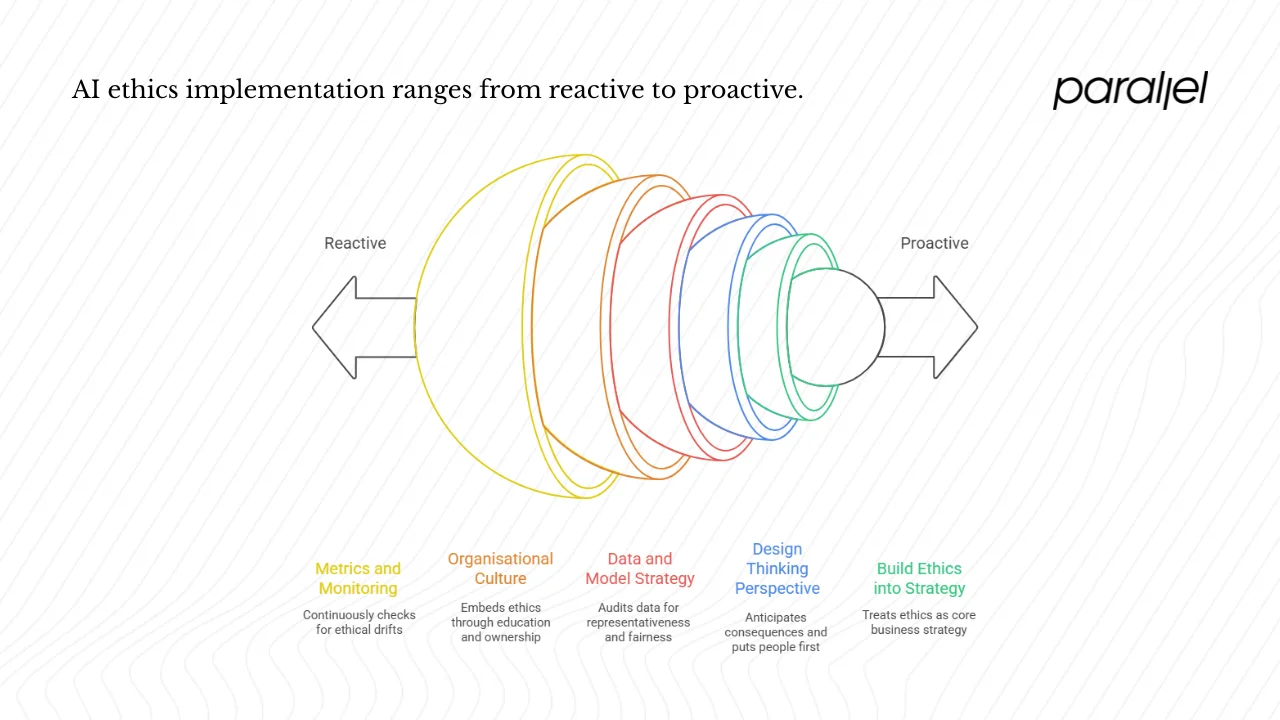

Applying AI ethics in product design & strategy

Ethical concerns can seem abstract, but they translate into concrete product decisions. Here are practical strategies for founders, product managers and design leaders:

1) Build ethics into product strategy

Treat ethics as part of the business strategy, not a side project. When defining your vision and roadmap, ask: Does this feature respect users’ autonomy? How might it be misused? What data do we truly need? Document these considerations alongside product requirements. Resist pressure to add AI features because competitors are doing so—NN/g warns that “powered by AI” is not a value proposition.

2) Adopt a design thinking perspective

Design processes are powerful for anticipating unintended consequences. IDEO’s AI ethics cards encourage teams to consider how data systems and algorithms can have unintended consequences and to always put people first. In practice:

- Involve a broad range of stakeholders—including users, subject matter experts, and people from marginalized communities.

- Map potential harms and benefits. For a generative tool, ask how it might propagate stereotypes or misinformation.

- Prototype safeguards. If building a recommendation system, test versions that show explanations or allow user feedback.

IDEO’s business case for responsible design argues that responsible design is not a nice‑to‑have; it is a critical facet of our evolving global environment. It states that designing for a wide range of people and their needs is a commitment many designers live by. Though we avoid that banned word, the message is clear: designing responsibly is not a “cherry‑on‑top” but a core practice.

3) Data & model strategy

Ethics is inseparable from data strategy. Audit datasets for representativeness; remove or annotate sensitive attributes where necessary; and test models across demographic slices. Use fairness metrics appropriate for your context. Document data lineage and model versions for traceability. IBM highlights that issues like data responsibility, fairness, explainability and robustness are core ethical concerns.

4) Organisational culture

Embed ethics into culture through education and ownership. Designate an ethics champion, such as a senior product manager or designer, who ensures that ethical considerations are raised. Offer training sessions on privacy, fairness and responsible machine learning. Encourage open discussion of ethical dilemmas, so team members feel empowered to voice concerns.

5) Metrics and monitoring

Define success metrics that go beyond engagement or revenue. Include fairness indicators, error rates across user groups, user satisfaction and complaints. Build transparency logs that record decisions and model changes. Provide channels for user feedback, and allocate time in the roadmap for ethical maintenance. Continuous monitoring helps you catch drifts or emerging harm.

Tips for early‑stage teams:

For startups with limited resources, keep governance lightweight yet deliberate. Use checklists and simple review templates. Consider periodic internal audits rather than large committees. When releasing new features, run small pilots to gather feedback and adjust before full scale. Iterate ethically just as you iterate on product/market fit.

Real‑world example: IBM’s pillars of trust

IBM summarises ethical AI under five pillars: fairness, explainability, robustness, transparency and privacy. These pillars echo the principles outlined earlier. As an example, a startup building a personal finance assistant could adopt these pillars: ensuring the model makes fair recommendations across socioeconomic groups, providing explanations for budgeting suggestions, testing the model against adversarial prompts, clearly communicating data usage and safeguarding user data.

Future trends in AI ethics & what to watch

Looking ahead, the question of what is AI ethics evolves as new technologies emerge. Anticipating future dilemmas helps product teams stay ahead of societal expectations and regulatory shifts.

Artificial intelligence technologies continue to evolve rapidly, bringing new ethical questions.

- Generative models and deepfakes: The ability to generate realistic text, images and audio raises concerns about misinformation, consent and authenticity. UNESCO’s guidelines call for transparency and explainability; developers of generative tools should label synthetic content and give users control.

- Autonomous decision systems: Self‑driving cars, drones and autonomous agents raise questions about liability and safety. Who is responsible when an autonomous vehicle causes harm? IBM’s article highlights that the question of responsibility for self‑driving car accidents is an ongoing ethical debate.

- Large language models in high‑stakes domains: As generative models are used for medical advice, legal analysis or hiring, the risk of harm increases. Ensure human oversight, domain‑expert review and compliance with sector‑specific regulations.

- Environmental impact: Training large models consumes significant energy. UNESCO’s principle of sustainability calls for assessing environmental impacts. Startups should consider energy‑efficient architectures and renewable energy sources.

- Democracy and human rights: AI can influence public opinion, voting and media consumption. UNESCO’s human rights‑centred approach emphasises protection of rights and dignity. Developers should avoid deploying models in ways that undermine democratic processes or amplify hate speech.

- Regulatory convergence: Governments are moving from guidance to enforcement. The AI Index report’s data on increasing regulations suggests that compliance will become table stakes. Aligning with global principles such as UNESCO’s ensures readiness for varied jurisdictions.

Treating ethics as a strategic advantage will differentiate companies. Products that embody fairness, transparency and respect will attract customers, partners and regulators. Research shows that values‑driven consumers increasingly prefer brands that prioritise responsible design.

Conclusion

AI ethics is not a checklist to tick off at the last minute; it is a discipline woven into the way we build technology. In this guide, we defined what is AI ethics as the application of moral principles to artificial intelligence systems, and we explored why it matters. We surveyed key principles—fairness, transparency, accountability, privacy, human‑centred design, reliability and governance—drawing on respected sources like IBM, UNESCO and SAP. We mapped these principles to the product lifecycle and common challenges, and we outlined governance models and practical steps for startups.

As founders, product managers and design leaders, our call to action is clear: embed ethical thinking from day one, involve those affected by our decisions and hold ourselves accountable. The goal is not to stifle innovation but to create solutions that empower people, respect their rights and contribute to a more just society. Ethics is not merely compliance; it is a strategic choice to build trust and long‑term resilience.

FAQ

1. What is AI ethics in simple words?

It is the study of how we apply moral principles—such as fairness, transparency, responsibility and respect for human rights—to artificial intelligence systems. The aim is to maximise benefits and minimise harms.

2. What are the five principles of AI ethics?

Five commonly referenced principles are fairness (avoiding discrimination), transparency (explaining how decisions are made), accountability (assigning responsibility for outcomes), privacy (protecting personal data) and human‑centricity (keeping people in control).

3. What are the seven principles of ethical AI?

A broader set adds reliability/robustness, sustainability and multi‑stakeholder governance to the five above. This list emphasises building models that are safe and resilient, assessing environmental impact, involving diverse stakeholders and ensuring the system promotes human rights and dignity.

4. What are the three main concerns about AI ethics?

Common concerns are bias and discrimination (models reinforcing existing inequities), privacy and surveillance (misuse or leakage of personal data) and opacity and accountability (difficulty in understanding and assigning responsibility for automated decisions). Addressing these concerns requires auditing data and models, protecting user information and adopting transparent, accountable practices.

.avif)