What Is Natural Language Processing? Guide (2026)

Learn about natural language processing (NLP), its techniques, and how it enables machines to understand human language.

Running an early‑stage startup means dealing with mountains of feedback, customer conversations and support tickets. Much of that data is unstructured: emails, chat logs, social‑media posts and voice recordings. You’ve probably heard the buzzwords and may have asked yourself what natural language processing is and why it matters. I’ve spent years working with product and design teams and have seen both the promise and the pitfalls of language‑centric technology.

This article explains in simple terms what natural language processing (NLP), how it works at a high level, why it should be on your strategic radar and how to get started without hype. Along the way we’ll look at the opportunities for product thinking, design and user experience, the technical trade‑offs, and the emerging trends shaping the field.

What is NLP and why should you care?

At its core, natural language processing is the study and engineering of giving computers the ability to recognise, interpret and generate human language. Coursera’s 2025 explainer calls NLP a subset of artificial intelligence, computer science and linguistics focused on making human communication comprehensible to computers. Put differently, NLP enables your software to convert spoken or written language into structured information and back again. It powers voice‑activated assistants, chatbots, translation tools and sentiment analysis. The global market for NLP reflects its importance: Precedence Research estimates the market will be worth USD 42.47 billion in 2025 and grow to around USD 791.16 billion by 2034, a staggering 38.4% compound annual growth.

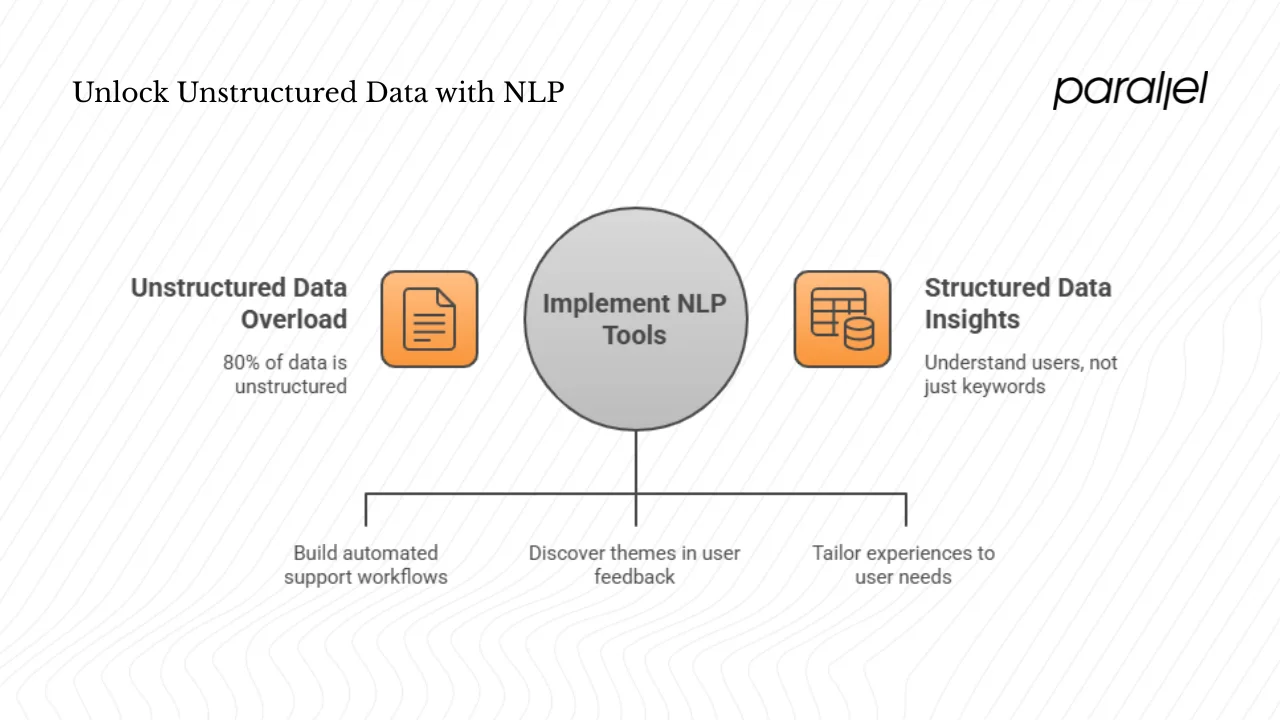

Why should founders and product leaders care? Because nearly 80% of the world’s data is unstructured. Emails, tweets, reviews and voice recordings can hold clues about user needs, pain points and preferences. Without a way to process them at scale, teams rely on anecdote or manual tagging. NLP tools unlock that data and let you build conversational interfaces, automate support workflows, discover themes in feedback and personalise experiences. As of 2025, roughly 20.5% of internet users conduct voice searches and more than 8.4 billion voice assistants are in use globally. With voice and chat becoming the default interaction patterns, ignoring language understanding means ceding ground to competitors.

NLP isn’t just fancy keyword matching. Older rule‑based systems relied on handcrafted patterns and struggled with nuance. Modern NLP combines computational linguistics with machine learning and deep learning. Models learn statistical representations of words and phrases from large datasets and use those representations to infer meaning, sentiment and intent. That difference is why NLP can answer questions, summarise documents or translate idioms, whereas a simple search would fail. So when you wonder about natural language processing, think of it as the intersection of language study and data‑driven algorithms that enables your software to understand users rather than merely count keywords.

In practice, it goes beyond keyword matching: instead of matching exact phrases, modern NLP models learn from examples and capture context and relationships. It sits within the broader family of AI techniques that also includes vision and decision‑making.

Why NLP matters for product and design teams

Unstructured data everywhere

According to IDC, roughly 80% of the world’s information is unstructured. Edge Delta notes that unstructured data is growing four times faster than structured data. Startups accumulate this data through emails, chat logs, reviews, support tickets, surveys and voice messages. Manually reading, categorising and prioritising these inputs is nearly impossible once your user base grows. When we worked with an early‑stage SaaS company, they were drowning in bug reports and feature requests. By training a simple NLP classifier on a few hundred labelled tickets, we automatically routed critical issues to the right team and reduced response time from days to hours.

Emerging patterns and competitive advantage

Voice and conversational interfaces are becoming common; DemandSage reports that about 20.5% of people use voice search and more than 8.4 billion voice assistants are in use. Effective voice interfaces need clear guidance, audio cues and error handling to avoid frustration.

Beyond interaction, adopting NLP early lets you respond to feedback faster and build features like semantic search or document automation. UX research shows that roughly half of designers were experimenting with AI by late 2024, but they emphasise that tools work best when paired with human judgment.

Foundations: linguistics, machine learning and AI

Linguistics and computational linguistics

Language has structure at multiple levels. Syntax governs how words combine into sentences. Semantics deals with meaning, and pragmatics concerns context. Computational linguistics applies linguistic theory to build algorithms that parse and interpret language. For example, tokenization splits text into smaller units (words, sub‑words or sentences); lemmatisation reduces words to their base form; part‑of‑speech tagging labels each word as a noun, verb or adjective. These processes help computers understand language beyond raw character strings.

Machine learning and deep learning

Machine learning models learn patterns from data rather than relying on fixed rules. In NLP, supervised learning uses labelled datasets to train models to classify sentiment, extract entities or translate text. Unsupervised learning finds structures like topics or clusters in unlabelled data. Eesel’s article on supervised vs unsupervised NLP notes that supervised models can label support tickets or detect spam, but they require large amounts of annotated data; unsupervised methods discover themes without labels. Many modern NLP systems blend both approaches. Deep learning, particularly transformer architectures, has revolutionised language modelling by allowing models to attend to context across entire sequences, leading to breakthroughs in translation, summarisation and generation.

NLP within the AI umbrella

Artificial intelligence is the broader field focused on building systems that perform tasks that normally require human intelligence. NLP is one branch. Others include computer vision, reinforcement learning and robotics. Within NLP, tasks include speech recognition (converting audio to text), language understanding (identifying intent, entities and sentiment), and language generation (producing human‑like text). Large‑language models (LLMs) like GPT‑4 demonstrate how far generation has come, but they also highlight the importance of data quality, bias mitigation and responsible deployment.

Key concepts and terminology

Here are a few core terms to know:

- Tokenization and lemmatisation. Breaking text into units and normalising them.

- Named‑entity recognition (NER). Identifying names of people, organisations or places.

- Sentiment analysis. Detecting polarity in text to understand how users feel.

- Text classification and topic modelling. Grouping documents by category or finding themes.

- Embeddings. Representing words or sentences as vectors that capture similarity; these power search and recommendation.

How NLP works

Rule‑based, statistical and deep‑learning approaches

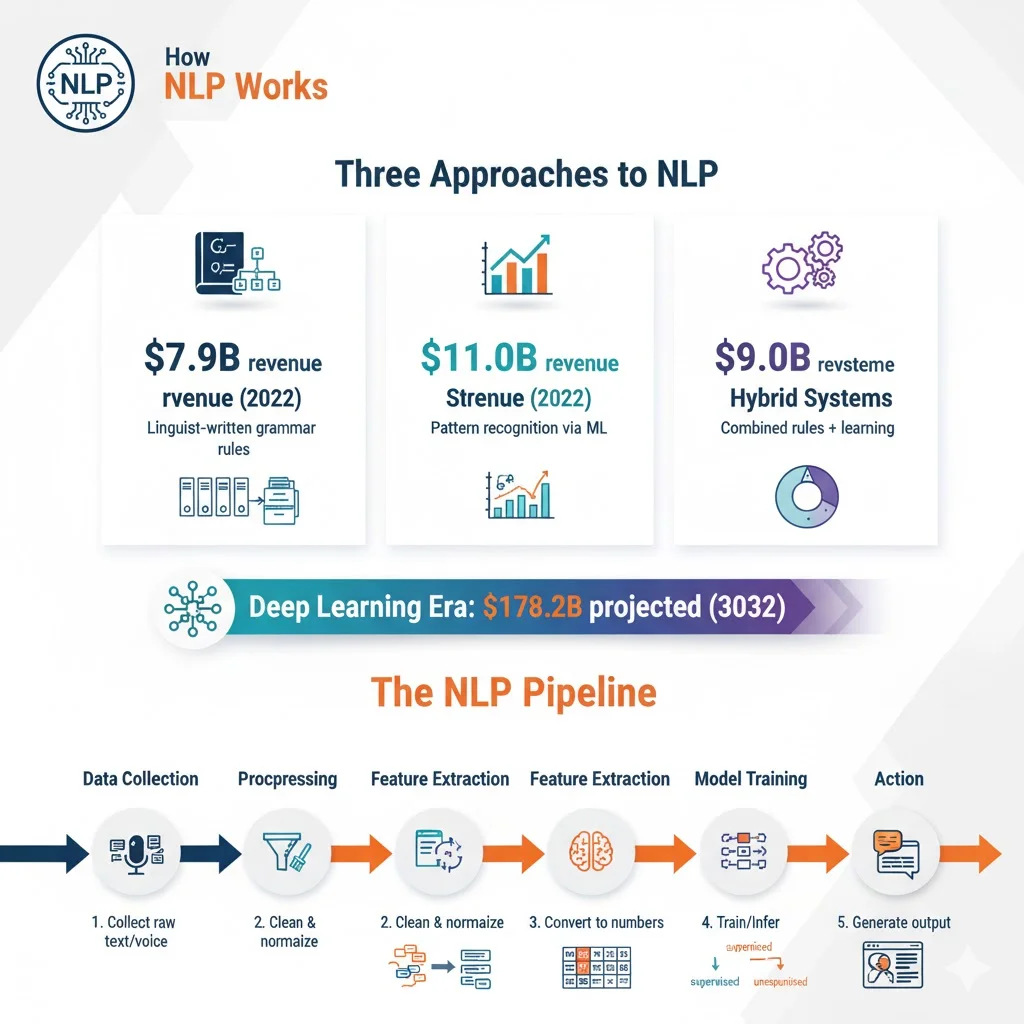

When people ask what natural language processing is, they often picture a monolithic technology. In reality there are several approaches. Rule‑based NLP relies on linguists writing grammar rules. It excels in domains with predictable language (such as form letters or simple logs) and contributed about USD 7.9 billion in revenue in 2022. Statistical NLP uses machine‑learning models to find patterns in large datasets. It powered many early breakthroughs and accounted for USD 11.0 billion in revenue in 2022. Hybrid systems combine both approaches to leverage rules and learned patterns, bringing in about USD 9.0 billion.

Deep learning has since transformed NLP. Transformers and attention mechanisms allow models to capture context across long sequences. This shift has enabled systems like GPT‑4 and voice assistants to handle idioms and multi‑turn dialogue. The artsmart statistics page notes that hybrid and statistical approaches are projected to contribute USD 178.2 billion and USD 146.4 billion by 2032, underscoring how data‑driven methods dominate the future.

Typical pipeline

At a high level, most NLP applications follow a similar pipeline:

- Data collection. Gather raw text or voice. For voice interfaces this starts with speech recognition, converting audio to text using models trained on large corpora.

- Preprocessing. Clean and normalise the text. Steps include tokenization and lemmatisation, lowercasing and removing noise. For voice, this includes handling pauses and fillers.

- Feature extraction. Convert text into numeric representations. Traditional approaches use bag‑of‑words or n‑grams; modern systems use embeddings and contextual vectors.

- Model training or inference. Use labelled data to train supervised models or unlabelled data for unsupervised approaches. The Aisera article explains that supervised learning requires well‑labelled data and iterative optimisation cycles, while unsupervised learning discovers structure in unlabelled text.

- Post‑processing and action. Interpret the model’s output and feed it into your product. In a chatbot this could mean generating a reply; in analytics it might update a dashboard.

This pipeline is often wrapped in a broader user experience. For example, a voice assistant listens, converts speech to text, identifies intent, performs the requested action and speaks the result back. Understanding the pipeline helps non‑technical teams collaborate effectively with engineers when exploring natural language processing solutions.

Limitations and challenges

Language is messy. Different languages have distinct syntax and idioms, and slang evolves quickly. Models can be sensitive to biases in the training data. Without enough diverse examples they may misinterpret minority dialects or amplify harmful stereotypes. Domain adaptation is another hurdle: a model trained on movie reviews may perform poorly on medical records. Finally, many teams focus on English and ignore local languages. As we’ve seen working with Indian startups, multilingual support is vital—your first NLP feature might need to handle Hindi and Marathi as well as English. Recognising these challenges early helps you set realistic expectations when people ask what natural language processing is and what it can do for your users.

Common use‑cases in product and business

Natural language processing isn’t just about chatbots. Startups can:

- Conversational agents and voice interfaces. Bots that answer common questions and handle voice commands free up support teams while giving users a natural way to interact.

- Feedback mining. Automatically detect sentiment and themes in customer reviews, surveys and support logs to prioritise improvements.

- Document automation. Extract names, dates and other entities from contracts, invoices and résumés, enabling faster workflows.

- Search and recommendations. Understand the intent behind queries to surface semantically relevant results instead of simple keyword matches.

When describing these examples to stakeholders, remind them that NLP is simply a toolbox for extracting meaning and structure from language, not a silver bullet.

Practical considerations for startups and product leaders

Before diving into NLP, clarify the problem and audit your data. Decide whether to use an off‑the‑shelf API or build a custom model—supervised methods require labels, while unsupervised methods can discover patterns without them. Off‑the‑shelf tools are quick to integrate but may struggle with domain‑specific jargon; custom models require more expertise but can provide better accuracy.

Performance, maintenance and bias matter too. Track precision and latency, watch for model drift and test across diverse user groups to avoid unfair outcomes. Good user experience is as important as the model: voice assistants need to handle errors gracefully and chatbots should allow hand‑off to humans. Always respect privacy, anonymise sensitive data and put guardrails in place to avoid harmful output. Start small, keep your scope narrow and prioritise features that move your core metrics.

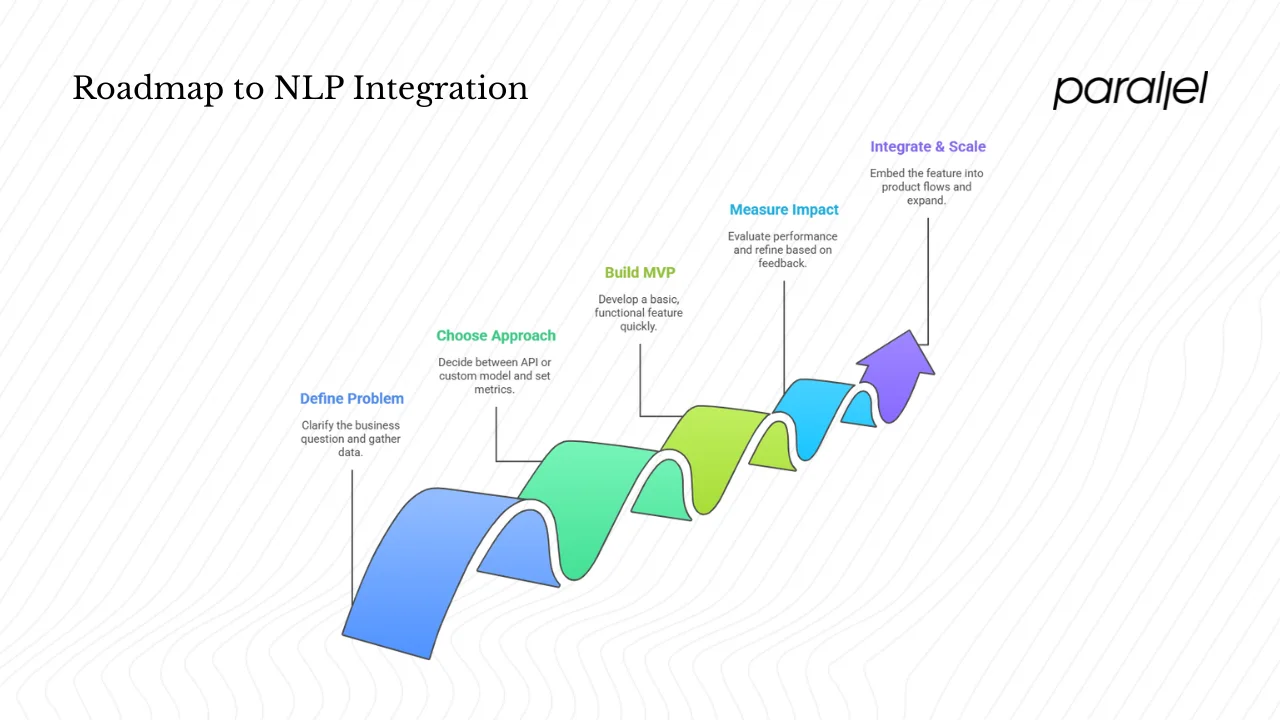

Roadmap: getting started with NLP at your startup

The best way to learn is to build something. Here is a practical roadmap:

- Define the problem and audit your data. Clarify the business question you’re solving and gather enough text or audio samples to work with. A basic classifier can be built with a few hundred labelled examples.

- Choose an approach and set metrics. Decide between using an off‑the‑shelf API or building your own model. Supervised methods need labels, while unsupervised methods can find patterns. Pick metrics such as accuracy or response time before you start.

- Build a minimum viable feature. Start small—auto‑tag support tickets or surface insights from reviews. Don’t aim for perfection; focus on delivering something useful quickly.

- Measure impact and iterate. Compare metrics before and after your feature goes live and refine based on user feedback. Add languages, improve labels and adjust thresholds as you learn.

- Integrate and scale. Once the MVP works, embed it into your product flows. Use sentiment scores to prioritise features or route tickets and add voice or search when needed.

Following this roadmap will help you focus your efforts and invest your limited resources wisely.

Trends and what’s next

Generative AI and large‑language models such as GPT‑4 have made NLP mainstream. They can write text and code, but they still hallucinate and reproduce biases. Many teams pair them with smaller, domain‑specific models to keep responses on track.

NLP is also becoming more multilingual and multimodal. Models that support many languages at once will help startups serve global users. Voice, text and images are converging, and hardware optimisations—like Intel’s oneAPI integration with IBM’s Watson NLP Library, which improved function throughput by up to 35% on 3rd Gen processors and 165% on 4th Gen processors—will make real‑time applications more responsive. Edge deployment will bring some processing onto devices, reducing latency and improving privacy.

Ethical and domain‑specific NLP will be at the front of mind. Teams must mitigate bias and protect user data. Containerised libraries such as IBM Watson’s allow developers to embed NLP into systems while filtering out hate and profanity. Domain‑specific models tuned for fields like health care or finance will continue to emerge.

Conclusion: bringing NLP into your startup

If you’ve wondered about natural language processing, the answer is simple: it’s about teaching computers to understand and generate human language. For early‑stage startups, NLP unlocks insights from unstructured data, powers new interfaces and creates competitive edge. The real value comes from solving specific user problems. Start small, pick a focused use case, build or integrate a tool, and measure its impact. Keep your design human‑centered. Combine automated processing with human judgment. With the right mindset, NLP can help your team work smarter and your product feel more personal.

Frequently asked questions

1) What do you mean by natural language processing?

It’s the field that combines linguistics, machine learning and computer science to give computers the ability to process, interpret and generate human language, whether written or spoken. If you’ve ever used a voice assistant or seen a support ticket auto‑categorised, you’ve seen it in action.

2) Is ChatGPT an NLP?

ChatGPT uses techniques from NLP and sits on top of large‑language models. It can understand and generate text. However, NLP as a field encompasses many tasks beyond chat, such as speech recognition, translation and information extraction. So ChatGPT is an advanced application of NLP, but it isn’t the whole field.

3) What is NLP and example?

Natural language processing enables machines to understand and act on human language. Examples include automatically classifying customer feedback into “positive” or “negative”, extracting names and dates from emails, or allowing someone to speak a command into a smart speaker and have it translated into an action.

4) Is NLP a form of AI?

Yes. NLP is a branch of artificial intelligence that deals specifically with language understanding and generation. Other branches of AI address vision, decision‑making and robotics. When people ask what natural language processing is, they’re really asking about one important piece of the broader AI puzzle.

.avif)